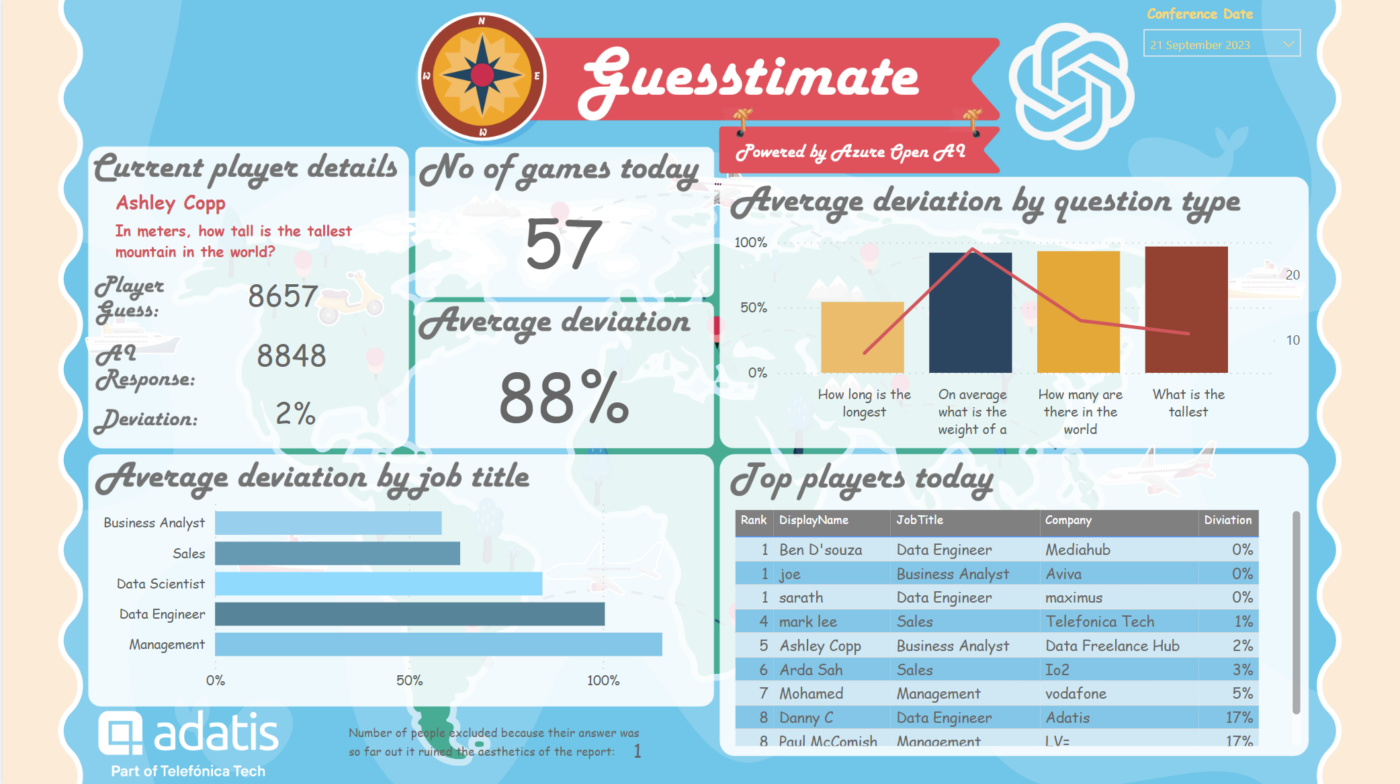

Artificial intelligence tools, like Chat GPT, are all the buzz right now, and rightfully so – they enable you to accomplish a diverse array of tasks with remarkable efficiency! As part of the Big Data London conference, Adatis built a game which utilises one of these artificial intelligence tools – Azure OpenAI. The rules of the game are as follows:

- Participants are presented with a question, which requires a numeric response.

- Participants then provide their best guess as to the answer to the question.

- The game then queries Azure OpenAI, and returns an answer.

- Here, we assume the answer provided by Azure OpenAI is 100% correct.

- Participants are then scored based on the proximity of their answer to the answer provided by Azure OpenAI.

In order to enable this, a Python function which queries Azure OpenAI was written. This article will explain the process behind this.

Set-up

The first thing you will need to do is set-up an Azure OpenAI resource. The documentation to do this can be viewed here.

After having done this, you will need to retrieve the following:

- URL: the endpoint for making API requests to the Azure OpenAI service.

- API Key: A secret token that authenticates your requests to the Azure OpenAI service.

The Prompt and Prompt Engineering

Consider the following question (Question 1):

“How many lions are there in Africa?”

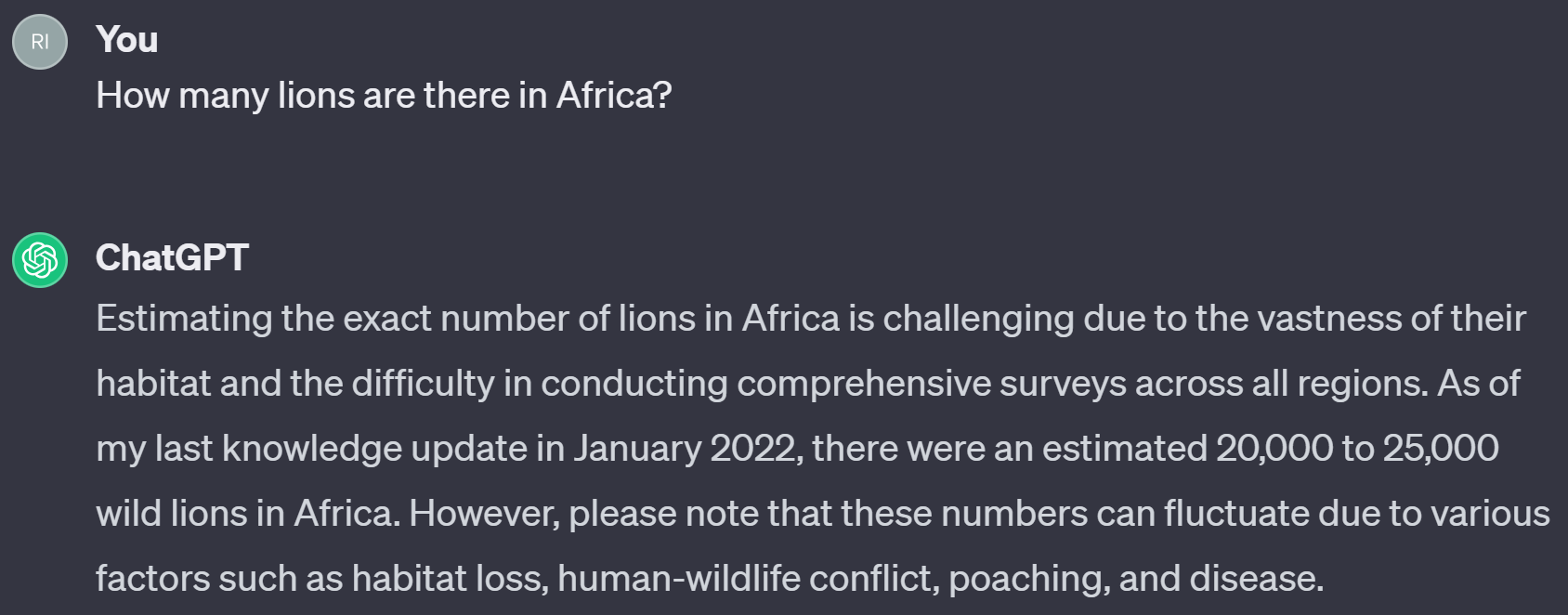

If we ask Chat GPT this question, the response is as follows:

Note the following about the above response:

- It’s relatively long, containing many clarifications: For example, if we wanted to display a short sentence to the participant stating the number of lions in Africa, this response would be too long as it contains a lot of irrelevant fluff!

- The value provided is a range and not one value: For example, this response would result in some difficulty when comparing the participants answer with the answer provided by Azure OpenAI.

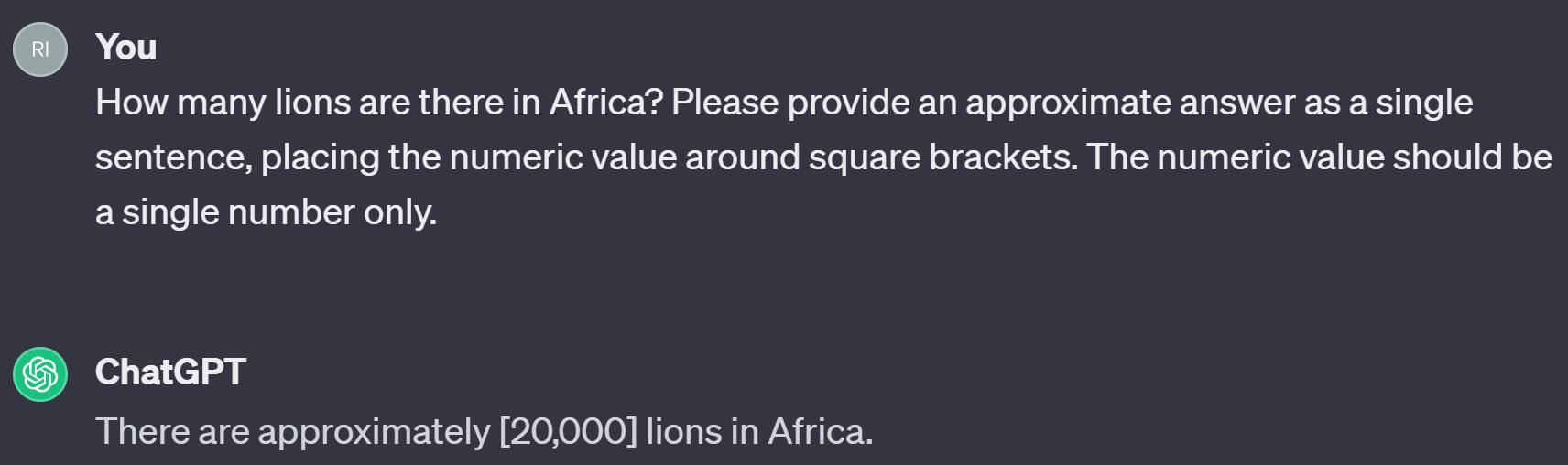

How can we modify our prompt to result in a more useful response? We can add a further sentence, informing Chat GPT of the ideal response format:

“Please provide an approximate answer as a single sentence, placing the numeric value around square brackets. The numeric value should be a single number only.”

Now let’s try again!

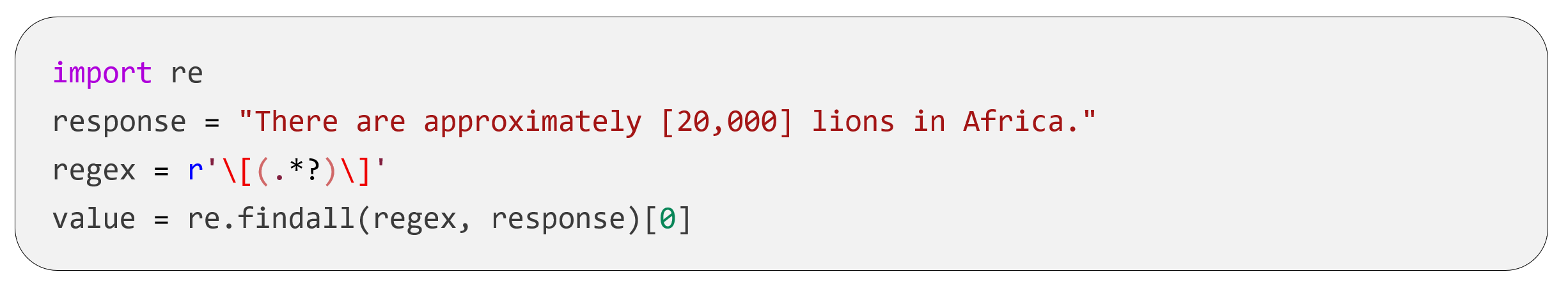

That’s better! Now we have a single sentence with a single value! Also, we have set-up the response so that it is easy to extract this value using Regex as shown below!

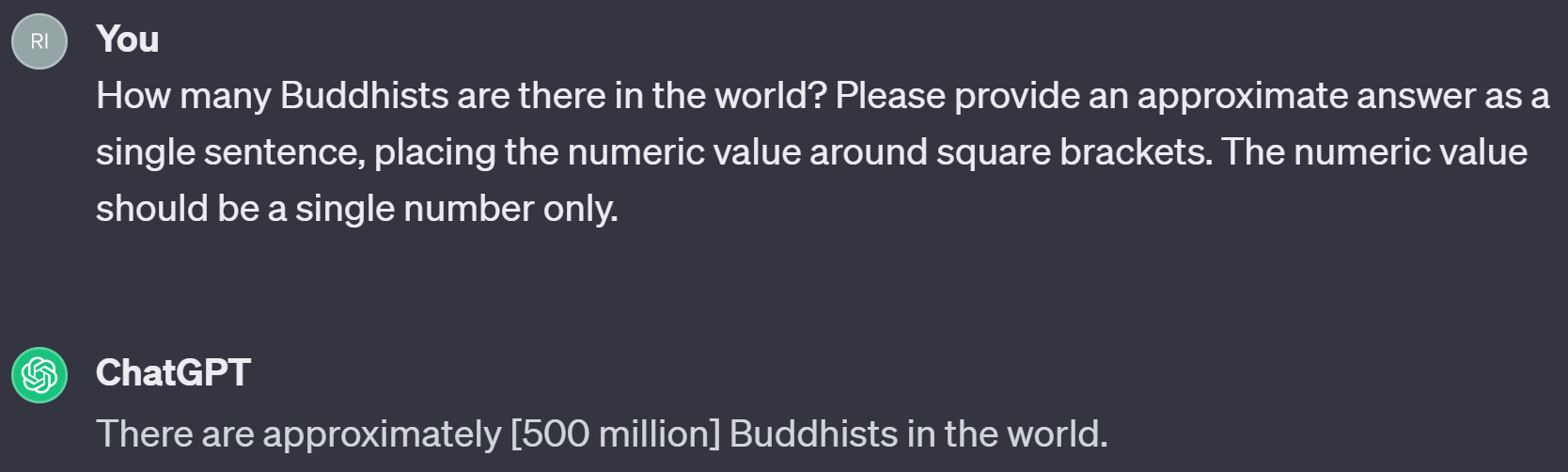

Let’s try another prompt (Question 2):

“How many Buddhists are there in the world?”

The response to this prompt is as follows:

Note the following about the above response:

- The value contains text: For example, this response would result in some difficulty when comparing the participant’s answer with the answer provided by Azure OpenAI.

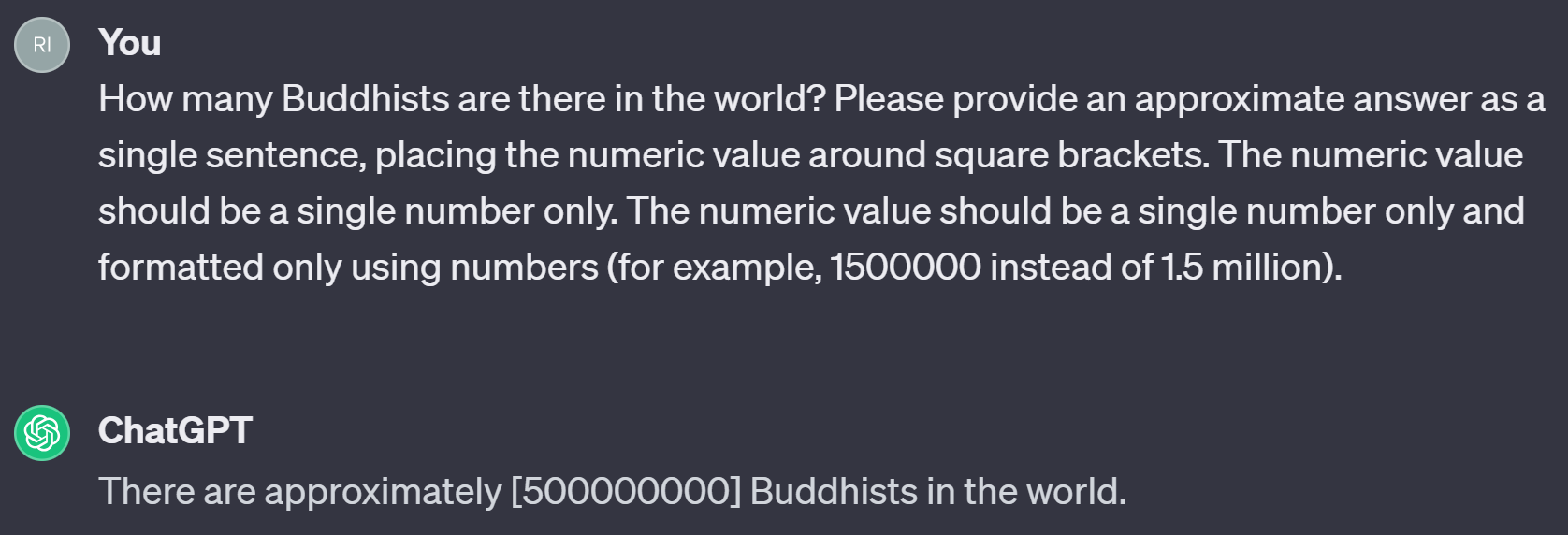

Let’s modify our response format request by adding the following:

“The numeric value should be a single number only and formatted only using numbers (for example, 1500000 instead of 1.5 million).”

Now, let’s try again!

That’s better! Now we have the value in a numeric format, making it easy to convert the string into a numeric datatype so that it can be compared with the participants answer. Of course, an alternative to adding this response format request is creating a Python function to produce the same result. However, this is more time consuming – there’s no reason to do this if the same functionality can be achieved in an easier way!

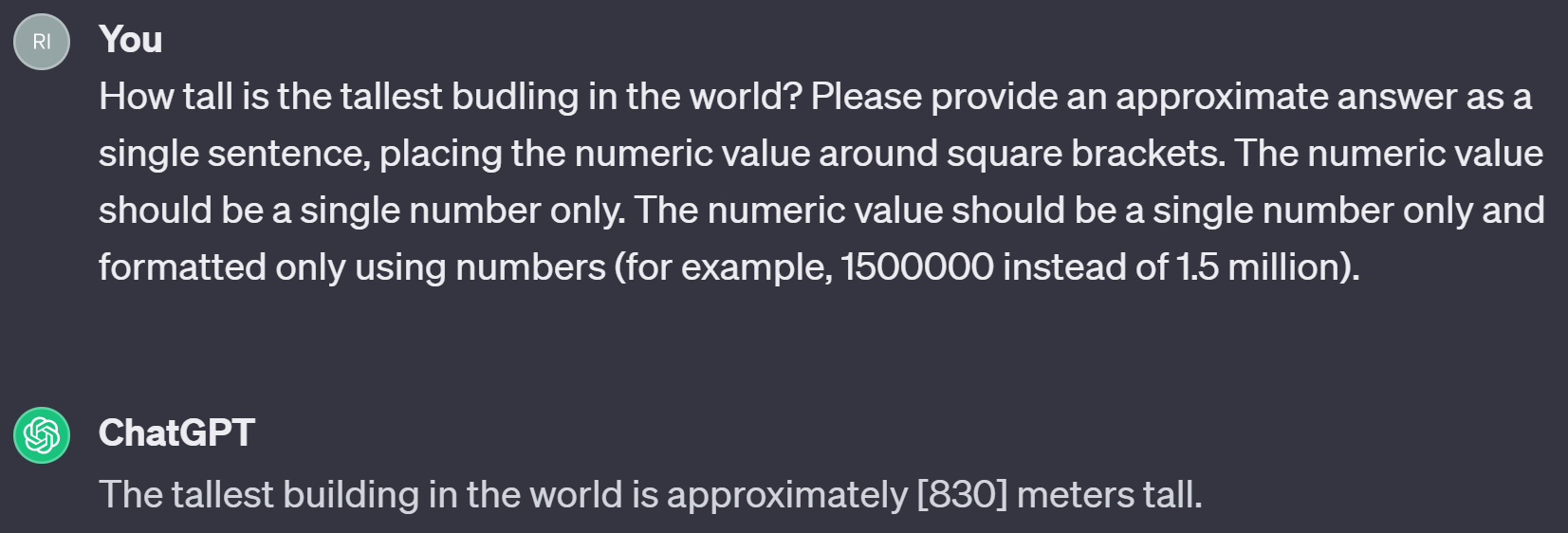

Let’s try one last prompt (Question 3):

How tall is the tallest building in the world?

The response to this prompt is as follows:

This prompt seems to work well! However, one thing to note would be the units of measurement. For example, if we asked participants to provide their answer in feet (the Burj Khalifa is 2,722 ft high), they might be scored poorly despite giving a relatively good answer due to the differing units of measurement.

As there are so many different potential questions and responses, there may always be instances where the response format request may not be sufficient. Strategies which could be employed to deal with this in this situation include:

- Limit the number of different types of questions. For example, with our response format request, questions beginning with “How Many” and “How tall” seem to work, however other types of questions may not work as well.

- Pre-populate a table with prompts and Chat GPT responses which have been cleaned appropriately and query this table instead. Ironically, this was not done in our case as we thought this may reduce the magic of the game!

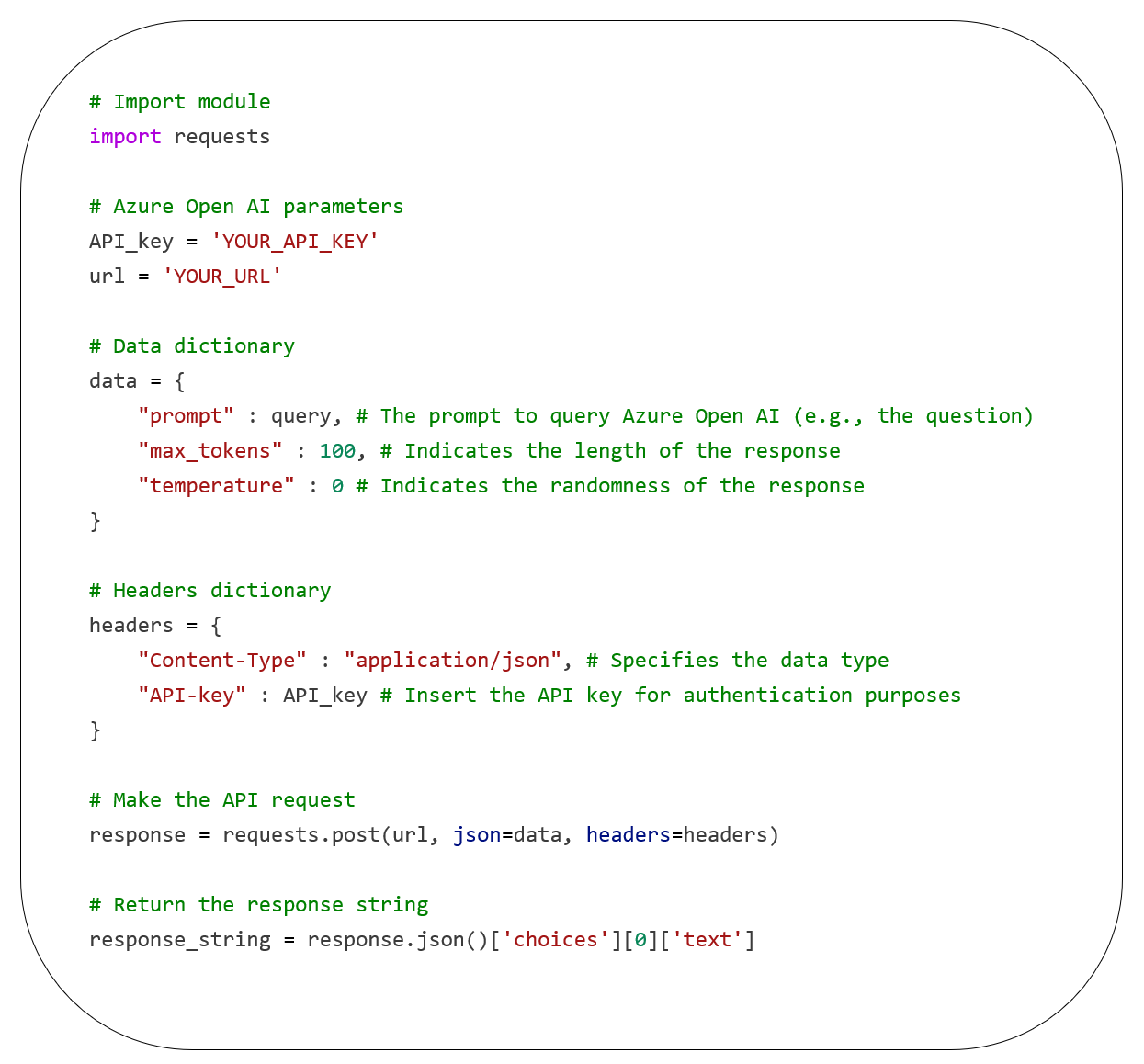

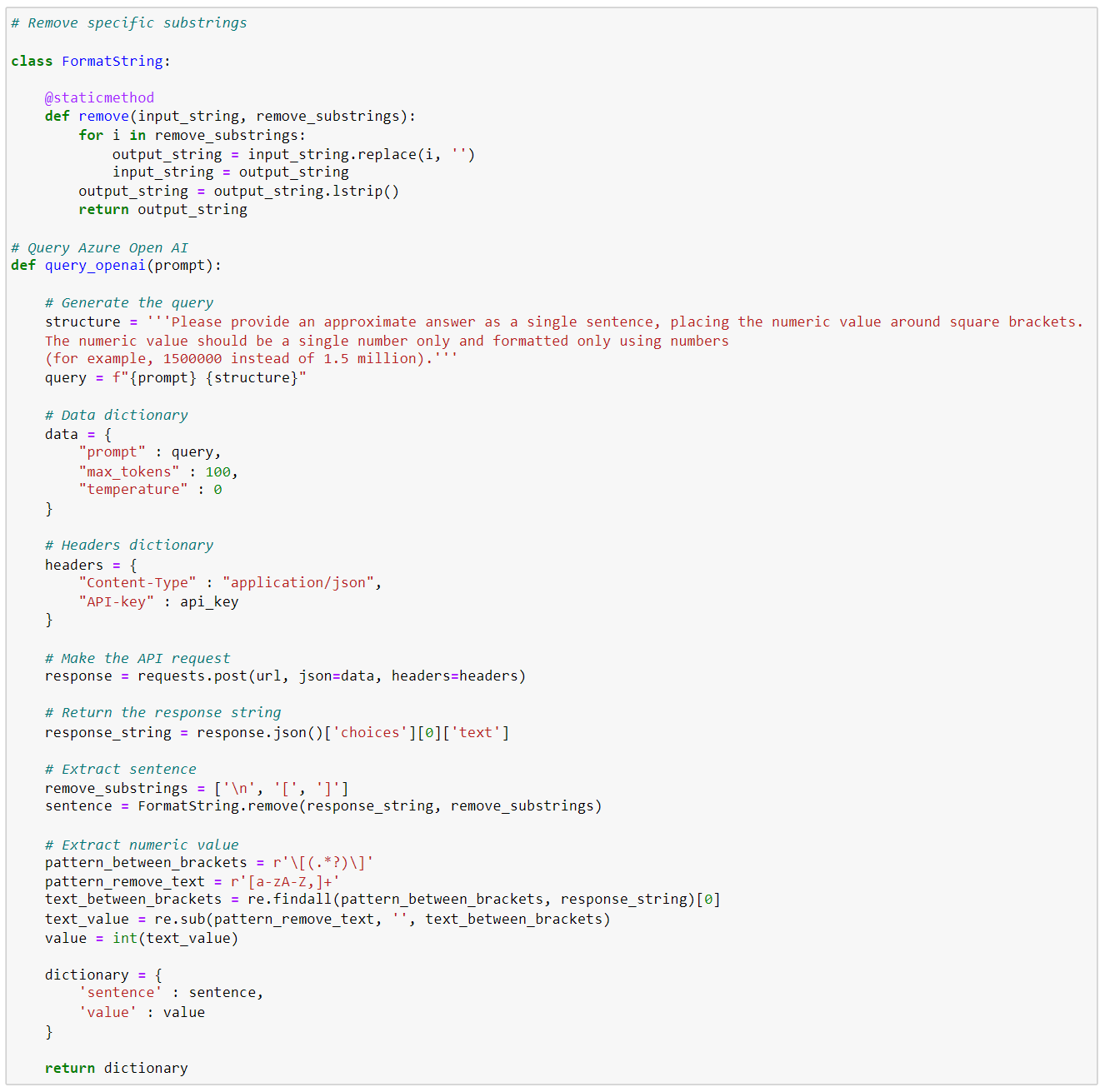

Python Function

The Python function to query Azure OpenAI is actually pretty basic! It is composed of the following steps:

- Uses the requests module to query Azure OpenAI with the given prompt.

- Data cleansing to extract the sentence and value from the response.

Please see the code and comments below to understand the implementation of the requests module.

The finalised function is shown below:

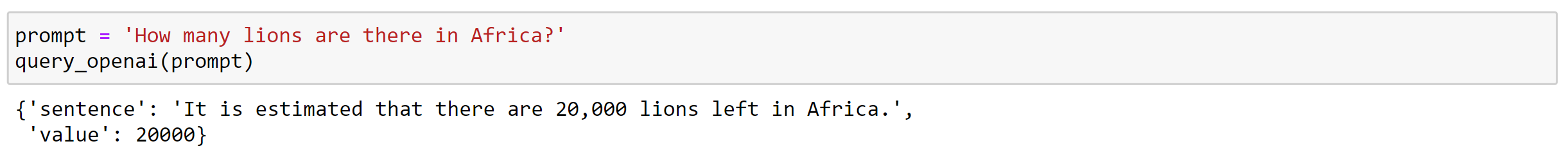

The output of the query_openai() function for Question 1 is shown below:

Now that the Python function is done, it can be integrated into the game using Azure functions! The relevant documentation can be viewed here.

Wrap-up

In this blog post, we have discussed how to build a Python function that queries Azure OpenAI. We have also discussed, with reference to an example, the use of prompt engineering to ensure the response generated by Azure OpenAI is as convenient as possible, allowing you to meet your requirements. We hope this blog post helps you to continue leveraging artificial intelligence tools, like Azure OpenAI, in a productive and beneficial way.

Introduction to Data Wrangler in Microsoft Fabric

What is Data Wrangler? A key selling point of Microsoft Fabric is the Data Science

Jul

Autogen Power BI Model in Tabular Editor

In the realm of business intelligence, Power BI has emerged as a powerful tool for

Jul

Microsoft Healthcare Accelerator for Fabric

Microsoft released the Healthcare Data Solutions in Microsoft Fabric in Q1 2024. It was introduced

Jul

Unlock the Power of Colour: Make Your Power BI Reports Pop

Colour is a powerful visual tool that can enhance the appeal and readability of your

Jul

Python vs. PySpark: Navigating Data Analytics in Databricks – Part 2

Part 2: Exploring Advanced Functionalities in Databricks Welcome back to our Databricks journey! In this

May

GPT-4 with Vision vs Custom Vision in Anomaly Detection

Businesses today are generating data at an unprecedented rate. Automated processing of data is essential

May

Exploring DALL·E Capabilities

What is DALL·E? DALL·E is text-to-image generation system developed by OpenAI using deep learning methodologies.

May

Using Copilot Studio to Develop a HR Policy Bot

The next addition to Microsoft’s generative AI and large language model tools is Microsoft Copilot

Apr