When the term fuzzy matching comes up in the Microsoft world, it’s natural for anyone who’s used the BI stack to think of SQL Server Integration Services (SSIS), due to the fact that it has both Fuzzy Grouping and Fuzzy Matching components. Therefore, when using the matching in Data Quality Services (DQS) the other day, I thought it might be worth contrasting the matching capabilities in the two products.

Overview

The SSIS Fuzzy Grouping transformation is typically used on a set of data containing duplicates, as it will operate across all of the rows, grouping similar rows together based on fuzzy comparisons, with a view to eliminating duplicates. In contrast, the Fuzzy Lookup transformation takes a value in the SSIS pipeline and uses fuzzy matching to match the input value against a set of clean reference data in a database.

The matching in DQS takes a set of data and groups the data into clusters, based on a number of rules and other factors, again with a view to removing duplicates. It’s therefore only directly comparable against the SSIS Fuzzy Grouping, rather than the Fuzzy Lookup.

Test Scenario

For the purposes of this blog post, I’ve got a sample query from AdventureWorksDW, taking data from the DimCustomer table. I’ve unioned all that data with a few sample records that contain typos. E.g. I’ve got ‘Pamela Fernndez’ misspelt for one customer and ‘4610 Pinto Rd’ instead of ‘Road’ for another. I’m going to attempt to de-duplicate data in SSIS first and then do the same thing in DQS to see if there is any difference.

SSIS Fuzzy Grouping

As shown below, I’ve just got a very simple OLE DB source passing some data into a Fuzzy Grouping, then I’m going to examine the data via a data viewer:

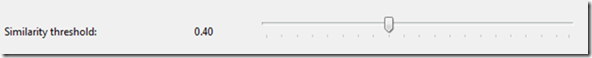

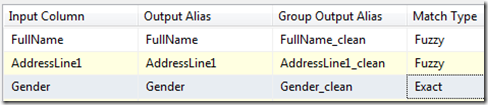

The columns that I’m passing into the Fuzzy Grouping for the Customers are Full Name, Address Line 1, Gender and City just for the purposes of this blog post. I’ve configured the Fuzzy Grouping transform to carry out Fuzzy Matching on Address Line 1 and Full Name, as these are the most inconsistent (intentionally) across my made up data. I’ve also dropped the threshold right down to 0.40 to see a full range of matches that we may or may not get:

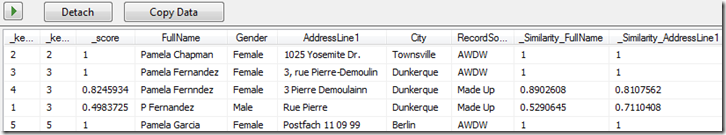

I’ve filtered the data for customers with a surname of Fernandez or a first name of Pamela, which includes two made up records. When I run the package, the data viewer produces the following results:

The _score column has a high score of 0.8245935, which is based on the similarity scores of FullName and AddressLine1. There are 3 records included in the cluster, including someone called ‘P Fernandez’ who is male. Although that’s been matched, it’s only because I decreased the threshold, plus it can easily be excluded by adding an exact match for Gender, as shown below:

DQS Matching

So overall SSIS copes well with the test scenario, how does DQS compare?

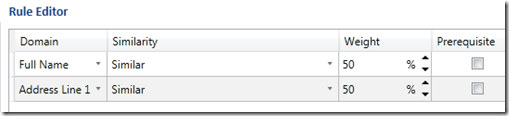

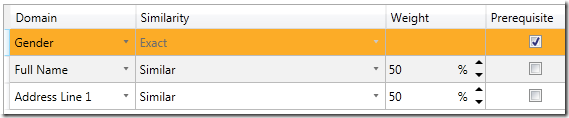

After creating a knowledge base, creating domains and carrying out knowledge discovery, it’s possible to use DQS to create a Matching Policy. The Matching Policy involves building a number of rules that determine how the data matches. The starting point in creating a matching rule is determining which domains you want to match on and whether they should be matched using the fuzzy algorithms (similar) or matched exactly:

So not too dissimilar to SSIS. You then choose to give each domain a percentage weight, which must add up to 100%. For example, if you give Full Name a weight 30% and, when run, its match score is 61% accuracy, then you have Address Line 1 at a 70% weight and a 45% accuracy, then an overall match score for a row will be (0.3 * 0.61) + (0.7 * 0.45) = 49.8% match score.

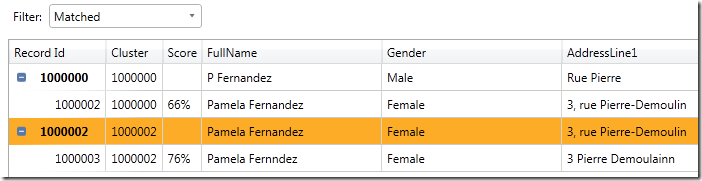

Starting off with a 50% weight on the two domains, I get the following results when operating on the same data:

As with SSIS, it hasn’t got it quite right, but using the Gender domain will help. What I quite like in DQS is that I can flag a domain as being a pre-requisite:

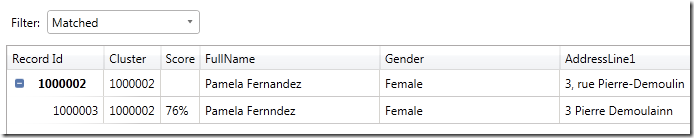

Flagging a domain as a pre-requisite means that the domain will be used to eliminate matches, but will not contribute to the matching score. If I wanted it to contribute to the matching score I would just have to uncheck pre-requisite and give it a weight. Running the matching again gives the following results:

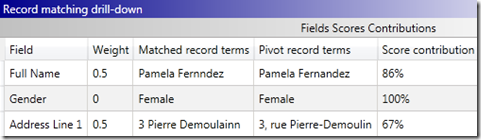

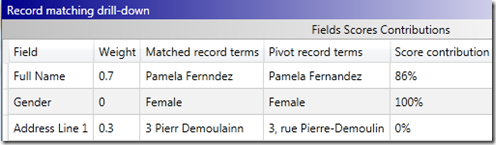

So the duplicate is correctly mapped with a score of 75%. Drilling down on the match was achieved is quite nice in DQS also:

This brings me to an interesting observation about the matching. I originally had my dummy record with a value of “3 Pierr Demoulainn” for the address. So in addition to having a typo in the second name and missing the word “Rue”, my other test also missed the second e out of the word “Pierre”. If I run my test data with these values, I get no matches at all. The lack of the second e in “Pierr” seems to have made all the difference. I can get it to match if I give a lot of weighting to Full Name. If I do this, we see the following results:

Address Line 1 has been given a score of 0%. Making the same change to my test data and then running in SSIS gives different results. The similarity for the address drops from 0.81 to 0.75, but it certainly doesn’t drop to zero. Although it will depend massively on your own data, the matching in DQS seems a bit more stringent. This can be mitigated by using more domains for the matching, rather than the simple 2 or 3 domains that I’ve used. To back that statement up, using a composite domain on both Address Line 1 and City does yield a strong match (71%) for the same data.

Conclusion

The rule based matching in DQS gives a lot of flexibility on how to weight matching across different domains. Coupled with the knowledge base components that interact with the matching, such as cleaning and Term-Based Relations, DQS has more features specifically aimed at matching than SSIS. It all depends on what sort of data you’re working with, but in theory, as you maintain the knowledge base over time, DQS should give you strong matches based on the knowledge you’ve given it.

However, there are some drawbacks – it’s a shame that the matching can’t be automated (please vote for my connect suggestion if you agree). SSIS by its very nature can be automated, meaning the matching in SSIS will be more suitable for a lot of implementations. Secondly, I’d like to be able to match external records against correct values already in the knowledge base, rather than just doing a fuzzy grouping on the external records. I’m a bit surprised DQS can’t do a fuzzy lookup, maybe this will change in V2. Finally, as I’ve shown above, some of the matching results are a little unexpected, whereas in SSIS they were good pretty much straight away. To be fair to DQS this could probably be mitigated with a better choice of matching fields and also running cleaning against a fully populated knowledge base beforehand.

Introduction to Data Wrangler in Microsoft Fabric

What is Data Wrangler? A key selling point of Microsoft Fabric is the Data Science

Jul

Autogen Power BI Model in Tabular Editor

In the realm of business intelligence, Power BI has emerged as a powerful tool for

Jul

Microsoft Healthcare Accelerator for Fabric

Microsoft released the Healthcare Data Solutions in Microsoft Fabric in Q1 2024. It was introduced

Jul

Unlock the Power of Colour: Make Your Power BI Reports Pop

Colour is a powerful visual tool that can enhance the appeal and readability of your

Jul

Python vs. PySpark: Navigating Data Analytics in Databricks – Part 2

Part 2: Exploring Advanced Functionalities in Databricks Welcome back to our Databricks journey! In this

May

GPT-4 with Vision vs Custom Vision in Anomaly Detection

Businesses today are generating data at an unprecedented rate. Automated processing of data is essential

May

Exploring DALL·E Capabilities

What is DALL·E? DALL·E is text-to-image generation system developed by OpenAI using deep learning methodologies.

May

Using Copilot Studio to Develop a HR Policy Bot

The next addition to Microsoft’s generative AI and large language model tools is Microsoft Copilot

Apr