Power BI Premium: The SSAS Superset

I have presented the topic of how to successfully roll out Power BI at enterprise scale many times in the past, both at SQL Saturday, Data Relay, User Groups and I always find many people asking for a blog around this topic. For this reason, I decided to write a three-part blog all around the successful roll-out out Power BI. Each blog will be answering the below questions in detail:

- Part 1: How is Power BI an enterprise-ready platform?

- Part 2: What steps and considerations should we take when rolling out Power BI?

- Part 3: What questions should we be asking ourselves when choosing a Premium capacity?

We will be starting off with understanding how Power BI is an enterprise-ready platform. Two things are certain, Premium has come a long way since it was first announced and there are still many questions surrounding it. The purpose of this first part blog post is to answer some of those questions, understand why SQL Server Analysis Services (SSAS) was previously favoured and explore what has changed to make Power BI more favourable and an enterprise solution.

The Roots of Power BI Premium

The primary reason Power BI Premium was introduced was to offer a dedicated capacity, therefore resources such as storage, processing, and memory powering the Power BI Service for your organization and yours only. This removes what is known as “noisy neighbors”, experienced by customers hosting their content in a Shared Capacity.

With Premium introducing Dedicated Capacity, this means that many of the restrictions in place for customers hosting their content in a Shared Capacity have been lifted. Customers using Shared Capacity had restrictions such as 8 refreshes per day and 1GB models which do make sense, as it ensures individual customers do not monopolize on this which would result on other customers experiencing a hit on the quality of the service.

No, surely Premium was for Licensing?

Yes, licensing was a big deal and Premium was welcomed with open arms for it. It resolved the issues of the previous licensing plans: Power BI Free and Power BI Pro. Now, your license is not only per-user but instead per-capacity which reduces the price considerably when compared to having to purchase a single Pro license for every user, even those only consuming the reports and not developing and publishing.

Power BI Premium is Enterprise Ready

I have had many conversations in the past that always led me to conclude that Power BI was not enterprise-ready, but that was the past. Now, with many of the capabilities that were initially and incrementally at later stages unlocked with Premium and some that come with Pro licensing, we can say that Power BI is enterprise-ready.

We will explore some of the reasons why Power BI was previously not favored over SQL Server Analysis Services (SSAS) and more importantly the what has changed. However, before we dive into it, it’s important to understand that traditionally Analysis Services was perceived as the enterprise platform which would have been used by BI professionals for developing in-depth models classified as the single version of the truth. Even though Power BI uses the same underlying engine as Analysis Services, Power BI was purely seen as the platform used by business analysts for self-service capabilities. Power BI was not associated with the common BI objectives which all organizations want imbedded in their foundation.

With that said, things have changed. Both Power BI and Analysis Services are merging together into a superset which is starting to be favored, Power BI Premium. So here we go, below are five Power BI capabilities that enable this change.

1. Power BI was not a single version of the truth, SSAS was!

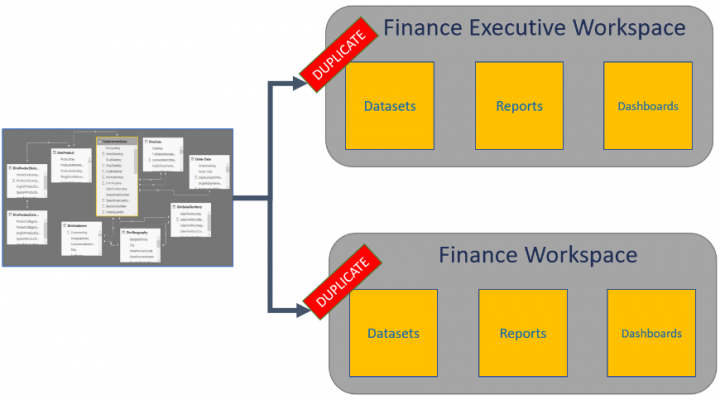

We could assign resources and spend time on designing and developing a model in Power BI which has the capability to answer all our business questions, yet we would have to publish that model multiple times across different workspaces. In a time where organizations are seeking to limit the number of data silos, deliver a single version of the truth and improve the decision-making process this does not seem like an efficient solution.

To put this into context, if we have a single Power BI dataset to be used by multiple reports, across different app workspaces we would have to publish that dataset in every app workspace. So, if we have a report that was required by members of the Finance workspace and the Finance Executive workspace, we would have to publish it multiple times, therefore, creating duplicate versions. This means unnecessary resources being used, more maintenance and creating data silos.

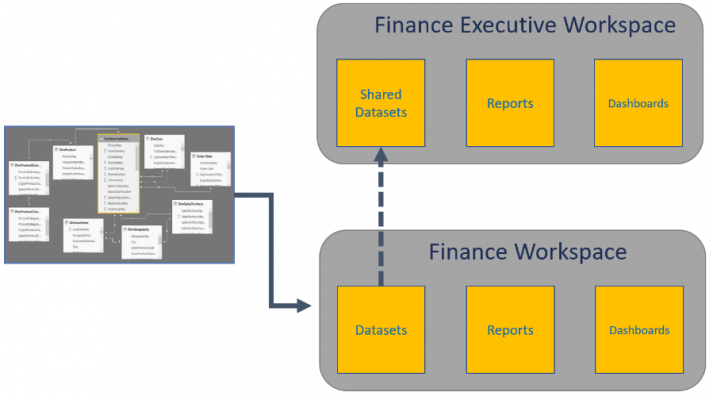

With Shared Datasets this is no longer the case! Power BI can be seen as a single, centralized version of the truth. We can now publish a single dataset to a single workspace and create multiple reports that use that dataset as a source and reside in different workspaces. This means we can publish our dataset to the Finance workspace then create reports that can be published directly to the Finance Executive workspace.

Another thing worth noting is the certification system that came with shared datasets. Having the ability to create Power BI datasets that can share reports across multiple workspaces can introduce a layer of confusion for the report authors in understanding which Power BI datasets have been tested, up to date and accurate. We can now assign the labels of Promoted & Certified to Power BI datasets to distinguish the most valued and trusted datasets. To make full use of the certification system, it is important to ensure only a group of people can certify datasets. This ensures only those who are qualified to determine the integrity of a dataset are doing so. Also, I find the definition of certified and promoted to be quite vague, so ensure everyone in the organization is aware of the difference.

This feature is not a Power BI Premium only feature and can be used with Power BI Pro licensing.

2. Power BI was not seen as a true data source, SSAS was!

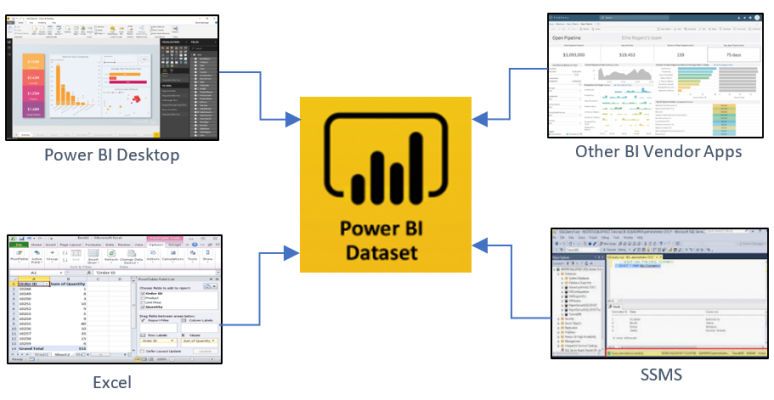

The only way we could connect to our Power BI dataset was through: Power BI Desktop and Excel. This was a great limitation and therefore Power BI datasets were classified as closed models. With Analysis Services, you could connect and pull the data from a list of tools, hence it was classified as an open model.

With XMLA endpoints being opened this is no longer the case. To me, this was one of the biggest capabilities that the Power BI team have delivered. We can now connect to a Power BI model not only from Power BI Desktop and Excel but also Management tools such as Management Studio, Debugging tools such as DAX Studio and even other BI vendor applications such as Tableau. This can be done by copying the workspace connection and connecting directly to it. Keep in mind this is currently read-only, so don’t expect to be able to right back changes to the model, but this is in the Power BI roadmap.

This is a Power BI Premium only feature, therefore the datasets hosted in the Power BI service must be within dedicated capacities.

3. Power BI did not support incremental refresh, SSAS did!

Analysis Services has partitioning which enables only a part of the data to be included in the recurring refresh process. How can we do it in Power BI? This was a major limitation, especially with how memory heavy Power BI is when conducting refreshes.

Every time a refresh was conducted on a Power BI Dataset, the entire dataset needed to be refreshed. However, with Incremental Refreshing being introduced in Power BI, refreshing data is now efficient. Refreshes will now be faster due to choosing only a frame of data to be refreshed, refreshes would be more reliable as we no longer required long lasting connections to source systems and memory consumption will be reduced due to partial datasets being refreshed.

To put this into context, imagine having to re-load all your data warehouse daily, rather than doing an incremental refresh. This would not make sense at all. More importantly, due to the way Power BI refreshes data it makes the process incredibly inefficient.

Configuring the incremental refresh can be done directly in Power BI Desktop. We are not going to go in-depth on the details of setting up an incremental refresh but it’s important to understand for those large-scale datasets, we will not be able to load all data directly into Power BI desktop as the majority of us won’t have machines that can handle this. For this reason, we use parameters which are pre-defined in Power Query which enable us to load only a subset of data in Power BI Desktop. Once we publish to the Power BI Service, the Incremental Refresh will take effect and load all data first time round.

Once again, this feature is a Power BI Premium only feature. This means that even though in Power BI desktop you can configure the parameters and enter the incremental refresh dialogue box, incremental refresh will not take place once you publish to the Power BI service.

4. Power BI couldn’t handle large datasets, SSAS could!

On a shared capacity, we are limited to a 1GB model that can be published to the service. Keep in mind this is after the compression has been applied which can reduce the overall size up to 15 times, depending on factors like high cardinality fields. However, even with Premium removing this limitation by enabling us to publish between 3GB to 10GB datasets depending on the Premium SKU purchased, the need for larger datasets was needed.

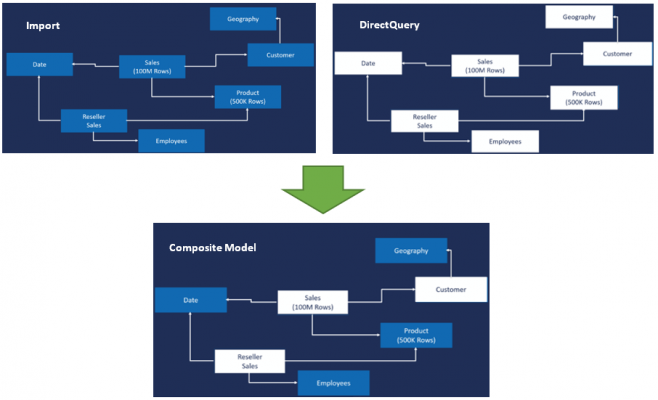

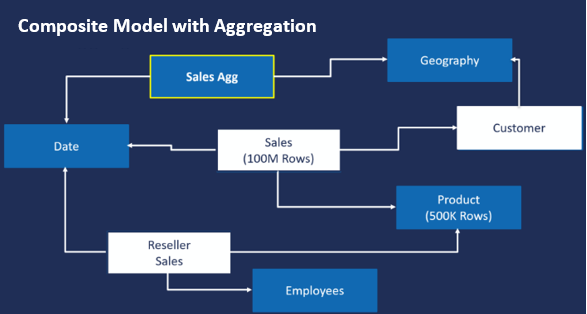

Aggregations and Composite models were the answer to this as they both work hand in hand to offer us the ability to work with much larger datasets by storing the data in memory at the aggregated level only. This unlocks massive datasets physically impossible before. In Power BI two of the connectivity types available are Import and DirectQuery which previously could not be combined in a Power BI dataset. The connectivity type Import loads all data directly into cache memory and is the most optimum way to use Power BI, therefore, results in delivering the best performance, whilst DirectQuery only stores the metadata of the source data and pulls the data on request through the DAX queries generated when users interact with the visuals. It’s important to understand that memory is our most important asset in Power BI, therefore when we use import with large datasets, we have the risk of exceeding the max memory capacity. However, when we use DirectQuery we can be presented with issues revolving around slow performance.

Composite data models now allow us to combine the best of both worlds: reporting on high volume data and reducing our memory footprint. We can store our large volume tables as a DirectQuery connectivity type, therefore, returning data only at the point of request and we can store our more static tables as an Import connectivity types.

However, with the above some concerns may still exist around the performance as the Sales fact table is in DirectQuery mode, which isn’t the most performant. To resolve this, we use an Aggregate table which allows us to store a summarised version of the sales fact table. The way this is done is through defining a virtual table that contains the sum of sales grouped by the PK’s in the Date and Geography, therefore a single row will be a combination of the Date and Geography PK’s. This can be created like any other table, so you can use Power Query, T-SQL, etc.

When the users of the report navigate and interact with a visual that is grouped on date and city the data will come through the aggregate table. However, if the user chooses to view Sales by date and customer name it can’t go through the in-memory aggregated data as it’s not grouped at this level. This will automatically be directed to the sales fact, therefore, the source as it’s a DirectQuery connectivity type.

One very important feature of Composite Models is the storage mode which allows you to specify how the table will be stored. Not the source the tables are coming from, but tables themselves. Let’s continue from the above example. If we do have a user who chooses to view sales by date and customer name, the data will be propagated as the below illustrates.

It’s worth highlighting that the Date dimension is now DirectQuery (white box) mode instead of Import (blue box) mode as it was previously. How can it be both Import and DirectQuery? This is through the ability to choose the storage modes: Import, DirectQuery & Dual. The storage mode dual can be either cached or not cached depending on the context of the query. Dual storage mode enables the table to flip back and forward. This is much more efficient as if the Date dimension remained as an Import this means all the 100M records would need to be loaded into memory first and only then could a join occur with the date dimension. However, it will be much easier in DirectQuery as it’s getting folded back to the source.

The ability to handle large volumes of datasets is expected from an enterprise BI platform and Composite Models with Aggregations certainly allows Power BI to check that box. This is not a Power BI Premium only feature, therefore using Power BI Pro will support this capability.

It’s worth pointing out the Power BI Roadmap as it’s the go-to place to see what we to expect in the near future. Regarding data volumes, the limit on dataset sizes is currently at 1GB for Power BI Pro Users and 10GB for Power BI Premium users depending on SKU purchased. There is also an upcoming feature where they are going to allow you to store much larger dataset sizes. More details available on the Power BI Roadmap.

5. Power BI was not seen as an enterprise reporting tool

There is no denying that the Power BI ecosystem was and is pretty cool with all the capabilities available. However, one aspect that was missing and was a major pullback was the lack of capabilities around operational reporting.

You may be thinking that this should be the case, after all, Power BI is not for operational reporting but for self-service and data discovery, which both have a different purpose. I would agree on this too, however for Power BI to be classified as enterprise-ready we must have the ability to develop reports that are pixel perfect, print-ready, fit into a single page and have the common features of subscriptions for distributing them out through email. Power BI Desktop and Power BI Report Builder cannot be used interchangeably, they both serve different purposes.

Power BI Paginated Report Builder now offers the traditional capabilities that we previously found in SSRS for developing reports (RDL) and publishing them either in the Power BI Service or in the Power BI Report Server, alongside our regular Power BI Reports (PBIX). Paginated Reports can also use Power BI Shared and Certified datasets as a source, therefore creating a suite of reports in the Power BI Service that source data from the same source.

As we are talking Paginated Reports and the factors that contribute to Power BI being an enterprise solution, it’s probably worth mentioning the Power BI Report Server (PBIRS), as many organizations want to host their data On-Premise behind their own firewalls. This reports server is a superset of SQL Server Report Services as everything that can be done in Reporting Services, can be done in Power BI Report Server with the added feature of supporting Power BI (PBIX) reports.

Wait, what? Where is…

When presenting the topic of how to successfully roll out Power BI at an enterprise scale, I usually get asked why I haven’t included some capabilities, such as Dataflows. This is relevant, as Dataflows can contribute when rolling out Power BI at an enterprise level, especially with our Power BI Champions (Dataset Designers), which we will be discussing in more detail within the part-two blog post. With that said, more capabilities exist now or on the Power BI roadmap that will further contribute to making Power BI an enterprise platform. However, I have chosen to highlight my top five.

Summary

Not too long ago I was classifying Power BI datasets as limited and downgraded versions of AS models. However, the Power BI team certainly have done a great job in evolving Power BI into what it is today! All the capabilities we discussed above contribute to making Power BI an enterprise-ready platform.

Many still have concerns, which are around how mature these capabilities are, which is natural as Analysis Services has been serving and delivering much longer then Power BI has been in the picture. But there is no denying that Power BI through Premium is merging with Analysis Services into an SSAS superset.

So, we went through some of the capabilities that make Power BI an enterprise-ready platform. Next time, we will be looking to answer:

- How do we avoid the common issues that large organizations are currently being faced with?

- What are the Power BI approaches we can take to avoid the common issues and increase chances of success?

- What factors should we consider when moving across the Power BI approaches?

Sources:

Governance and deployment approaches. Available: https://docs.microsoft.com/en-us/power-platform-release-plan/2019wave2/business-intelligence/planned-features.

______________________________

Twitter: @Data_Laz

Email: Lazaros.viastikopoulos@adatis.co.uk

Introduction to Data Wrangler in Microsoft Fabric

What is Data Wrangler? A key selling point of Microsoft Fabric is the Data Science

Jul

Autogen Power BI Model in Tabular Editor

In the realm of business intelligence, Power BI has emerged as a powerful tool for

Jul

Microsoft Healthcare Accelerator for Fabric

Microsoft released the Healthcare Data Solutions in Microsoft Fabric in Q1 2024. It was introduced

Jul

Unlock the Power of Colour: Make Your Power BI Reports Pop

Colour is a powerful visual tool that can enhance the appeal and readability of your

Jul

Python vs. PySpark: Navigating Data Analytics in Databricks – Part 2

Part 2: Exploring Advanced Functionalities in Databricks Welcome back to our Databricks journey! In this

May

GPT-4 with Vision vs Custom Vision in Anomaly Detection

Businesses today are generating data at an unprecedented rate. Automated processing of data is essential

May

Exploring DALL·E Capabilities

What is DALL·E? DALL·E is text-to-image generation system developed by OpenAI using deep learning methodologies.

May

Using Copilot Studio to Develop a HR Policy Bot

The next addition to Microsoft’s generative AI and large language model tools is Microsoft Copilot

Apr