In the last blog of this series: https://adat.is/35yDQMg we looked at delegation in more detail. This blog will focus on further points specific to PowerApps, which are worth keeping in mind during the development stage of a PowerApps application – particularly on communication done from PowerApps to the backend database.

Rate Limit

Whilst putting down a development concept for a PowerApps application, the minute you start replying to yourself “I don’t need to, it’s just a small application!” for consecutive architectural thoughts and development approaches, might be the minute you need to stop and re-think. There are many suggested practices that might seem to do the trick, but which will be a showstopper when it comes to scalability.

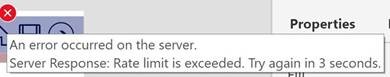

One of such examples, is the rate call limit. Both Flow and PowerApps have a limit on the number of calls (or communication requests) that can be done with an API or a datasource/ connection (whether its Azure SQL, SQL, Flow etc.) And even when or if the number of calls is not intending to be much, it is still suggested, as with best practices of development, to keep these the amount of calls to a minimum. It only takes a ForAll() loop to a direct and large datasource to fire up the number of calls – and this basically causes errors similar to the following:

It is also worth keeping in mind that these limitations go beyond multi-user usage of the application and through excessive calls to the database, limits could be reached by one user (since the limit is after all per user for PowerApps).

The way some commands tend to handle the calls to the database might differ from other, making some more prone. Here is a quick example of replicating this issue. In this example we are seeking specifically the intention of hitting the problem, and hence we will not be adopting best practices to avoid it or to implement normal development concepts.

The screen has one Gallery that is loading all the rows inside a Product List table – made up of 9905 rows. Our intention is to copy the contents of this SQL table into another SQL table, which is currently blank. Keeping in mind the limit that exists for non-delegatable commands, we are going to approach this by creating a SQL function that calculates how much re-runs need to be done to transfer the entire table (which will go beyond the delegation limit specified in PowerApps application – in this case it has been changed from the default of 500 to the maximum of 2000 in the application settings). Thus in this case, dividing the total rows by 2000, will result in the need of 5 re-runs.

In the OnSelect() of the Patch button, the following logic will run:

//Caching the ReRunsView

ClearCollect(RerunsPointer,'[dbo].[ReRunsView]');

//Setting Maximum number of re-runs

ForAll(RerunsPointer, Patch ('[dbo].[New Product List 1]', Filter ('[dbo].[Ori Product List 1]', (ProductID - 1) > RangeFrom && ProductID <= RangeTo)))

Hence for every pointer, the equivalent from/to range will be chosen from the source table and saved into the destination table, row-by-row.

As can be seen from the clip, during this process, the limit is being exceeded due to the numerous calls being done to the backend in a short amount of time.

The current number of call limits that exist in Flow and PowerApps for each API, can be found in a great blog post by Pieter Veenstra – accessible here: https://veenstra.me.uk/2018/07/17/microsoft-flow-connector-limits/ – this list has also been referred to in Microsoft communication in the past.

There are different mitigation steps to ensure such issues are decreased:

- Use Collections. Collections offer the capability of loading a datasource (for example a SQL table) in the application cache and be able to reference to it from there onwards instead of the original datasource. Hence in this case the call is being done to populate the collection, but then any further communication (for example LookUp() or Filter()) will be done on the collection without a call to the original datasource. It is worth mentioning though, that loading various table at the start might come as a trade-off of performance. To improve performance some might suggest Concurrent() function to start all at once – but see point 6 for this.

- Include Flow. In order to disperse the amount of calls being done over multiple datasources (and hence decrease the chances of reach the limit quicker), doing some of the read and writes from/to the database through stored procedures in Flow will be helpful. Please bear in mind though that Flow still will have a limit, and the limit in Flow is per user/per connection. Hence having multiple SQL connections in Flow, separate from the SQL connection used in PowerApps will help in controlling the calls.

- Filter data before the calls. There might be circumstances where an entire table, with a lot of records, is being loaded from the database and then filtered in the application itself. Hence if the call is done with a predicate and specific filters are applied, it will help in both performance and traffic.

- Decrease or if possible eliminate any ForAll() loops on read or write to the database, to avoid heavy triggers of data.

- Increase number of connections.

- Similar to any other programming language, concurrency is to be handled with care. Using the Concurrent() function to load simultaneous collections is beneficial, but doing concurrent Patching to the database will yield the same results of deadlocks, locks and other issues which would have been encountered with other technologies. Likewise this would lead to multiple simultaneous calls to the database, possibly leading to increased chances of rate call limit being exceeded.

Bulk inserts

In various blogs and documentation, ForAll() loops are suggested as an option for bulk inserts from PowerApps. Even if this will do the job of committing the values in the database especially for a handful of rows, I would personally avoid using these for inserts. Whenever a ForAll() loop is used, the row will be passed one by one, in a short time, firing multiple calls in a short time to the database, which is considered as bad practice apart from other risks involved architecturally.

Some alternatives to this will be to utilize Flow. This enables the use of an SQL stored procedure that can accept parameters and apply the INSERT or MERGE based on those passed procedures. In some cases it might also be worth passing the information to a collection first and then using the data in the collection to persist it in the database (instead of calling a Flow directly from an OnChange attribute in Gallery rows). This can be done for instance using the SaveData() command.

Other than these, bulk inserts might be deemed as limited at the moment in PowerApps – when considering bulk updates best practices.

This brings us to the end of this blog post. We will continue further on this series in the upcoming week/s.

In the meantime, as always, feel free to get in touch or bounce off any ideas – either on the comments below or even on twitter: @bonello_jason

Introduction to Data Wrangler in Microsoft Fabric

What is Data Wrangler? A key selling point of Microsoft Fabric is the Data Science

Jul

Autogen Power BI Model in Tabular Editor

In the realm of business intelligence, Power BI has emerged as a powerful tool for

Jul

Microsoft Healthcare Accelerator for Fabric

Microsoft released the Healthcare Data Solutions in Microsoft Fabric in Q1 2024. It was introduced

Jul

Unlock the Power of Colour: Make Your Power BI Reports Pop

Colour is a powerful visual tool that can enhance the appeal and readability of your

Jul

Python vs. PySpark: Navigating Data Analytics in Databricks – Part 2

Part 2: Exploring Advanced Functionalities in Databricks Welcome back to our Databricks journey! In this

May

GPT-4 with Vision vs Custom Vision in Anomaly Detection

Businesses today are generating data at an unprecedented rate. Automated processing of data is essential

May

Exploring DALL·E Capabilities

What is DALL·E? DALL·E is text-to-image generation system developed by OpenAI using deep learning methodologies.

May

Using Copilot Studio to Develop a HR Policy Bot

The next addition to Microsoft’s generative AI and large language model tools is Microsoft Copilot

Apr