Using Python and the Power BI REST API

Now, why would you want to do that?

There are some scenarios where this approach may be beneficial. To get the full picture and establish the landscape let’s first answer two questions:

Why a Databricks DataFrame?

Recently Databricks became an integral part of the Modern Datawarehouse approach when aiming for the Azure cloud. Its wide usage in data transformation begs for a richer variety of data destinations. The usual and most widely used persistence is the file store (lake, blob, etc.). It’s fine with large volumes of data, but then we have to go a long way before reaching the data presentation (additional storage layers, SQL, Tabular data model etc…).

What if we want to instantly update a Power BI report directly from Databricks? It could be a small dataset feeding a dashboard for example. It could be business data or data-related metrics that we want to see in (near)real-time. Or we want to monitor the data transformation process continuously in a Databricks streaming scenario.

Why a Push Dataset?

We can do real-time streaming in Power BI by using Streaming or PubNub streaming datasets, which is fine, but let’s see some of the advantages of using a Push dataset:

-

You can build a report on top of a Push dataset

-

You can pin report visuals to a dashboard, and they will update in real-time on each data push

-

Data is stored permanently in Power BI

-

You don’t need a data gateway in place

For a more detailed comparison of all the real-time streaming methods, please check here.

If you think that all this makes sense, then continue further.

Implementation

Since we’ll be utilising the Power BI REST API there are some limitations that we need to be aware of upfront. You can see them here.

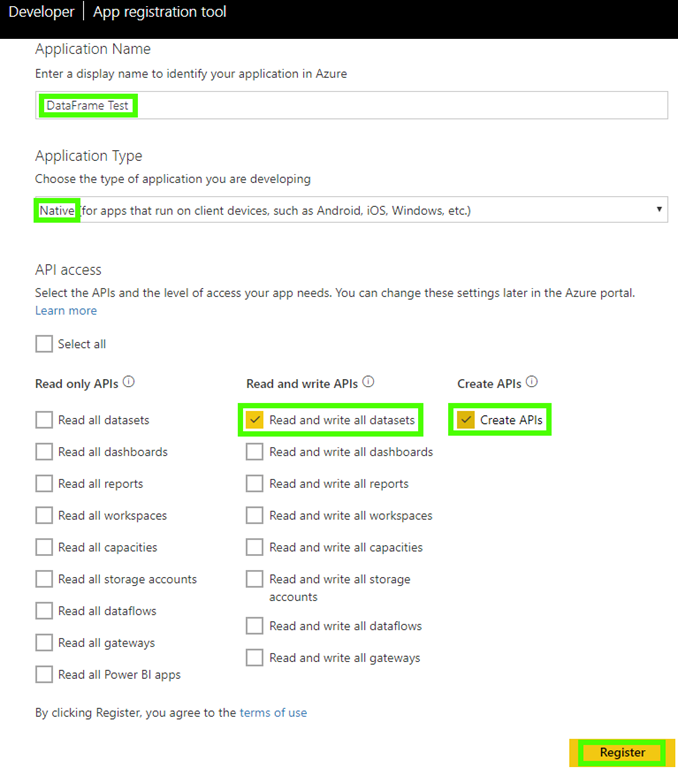

In order to be able to call the Power BI API you need to register an application and set proper permissions. Follow the steps here. App registration details below:

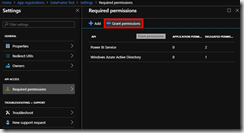

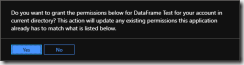

After creating the app registration, you should grant permissions in the Azure portal:

Without granting these permissions from the Azure portal you won’t be able to get authorisation token and use the REST API.

Now that we’re all set-up let’s go straight to the point.

My initial intention was to show you how to build the whole thing step-by-step in this post. But then it become complicated and I decided that an abstraction is needed here to keep things simple. That’s why I wrapped up all the “boring” stuff in a Python class called pbiDatasetAPI, which you can find on GitHub here. The comments in the code should be enough to understand the logic. The methods of this class will take care of:

-

Authentication

-

HTTP requests

-

HTTP requests JSON body generation (data and metadata)

-

Data type conversion (Spark to EDM)

-

Wrapping in a Python function of all the Power BI REST API operations that we need to perform

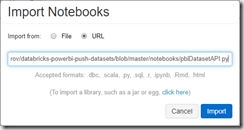

In order to start using it you should import a notebook (using the above-mentioned URL) in your Databricks Workspace:

Now that the class notebook is imported you can create a new Python notebook in the same folder to test how it’s working. Let’s call it “Inject DataFrame into Power BI Push Dataset”. First, we’ll execute our class notebook:

%run "./pbiDatasetAPI"

Next, we’ll need a DataFrame with data that will be pushed to the Power BI Push dataset. We can use some of the sample datasets which come with Databricks (in this case Amazon reviews):

dfInject = spark.read.parquet('dbfs:/databricks-datasets/amazon/test4K') dfInject = dfInject.select("brand", "img", "price", "rating", "review", "time").limit(200)

We take 200 rows, which is just enough.

In the next command we’ll create some variables and instantiate the pbiDatasetAPI class:

# Initialise variables username = "" password = "" application_id = "********-****-****-****-************" # Power BI application ID groupId = None # Set to None if not using Power BI groups datasetName = "InjectedDataset" # The name of the Power BI dataset tableNames = ["AmazonReviews"] # Table name or list of table names dataFrames = [dfInject] # DataFrame name or list of DataFrame names # Create a pbiDatasetAPI class instance pbi = pbiDatasetAPI(username, password, application_id)

You should set your username and password, and the application ID obtained in the previous steps. Optionally provide also a group ID or set it to None if you’re not using groups on powerbi.com.

The tableNames and dataFrames variables are lists, because we may want to insert multiple DataFrames in multiple tables. In our case it’s one DataFrame to one table.

Let’s create a dataset with one table in Power BI:

# Create the dataset and the table in PBI pbi.executePBIOperation("postdataset", groupId = groupId, datasetName = datasetName, tableNames = tableNames, dataFrames = dataFrames, reCreateIfExists = True)

The next step is to post all the DataFrame’s rows to the Push dataset.

# Get the datasetKey for the dataset (by name) datasetKey = pbi.executePBIOperation("getdatasetbyname", groupId = groupId, datasetName = datasetName)[0]["id"] # Insert the contents of the DataFrame in the PBI dataset table pbi.executePBIOperation("postrows", groupId = groupId, datasetKey = datasetKey, tableNames = tableNames, dataFrames = dataFrames)

This is where the magic happens. One important note here is that the dataset key is always unique. This does not apply to the dataset’s name, which means that we can have multiple datasets with the same name.

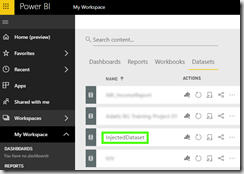

You can see the newly created dataset on powerbi.com:

You can create a report on top of this dataset and pin visuals to a dashboard (tiles will be updated automatically upon data change).

Now, let’s try to manipulate the metadata a bit – change the data type of the “rating” column in the DataFrame from double to string and update the Push dataset accordingly:

# Change the data type of the column 'rating' from double to string dfInjectModified = dfInject.withColumn("rating", dfInject.rating.cast("string")) # Get the datasetKey for the dataset (by name) datasetKey = pbi.executePBIOperation("getdatasetbyname", groupId = groupId, datasetName = datasetName)[0]["id"] # Update the metadata of the Power BI table pbi.executePBIOperation("puttable", groupId = groupId, datasetKey = datasetKey, tableNames = tableNames, dataFrames = [dfInjectModified])

The only thing remaining now is to try and delete all the rows (it’s all or nothing – we can’t delete some of the rows) from the table in the dataset and then delete the entire dataset:

# Get the datasetKey for the dataset (by name) datasetKey = pbi.executePBIOperation("getdatasetbyname", groupId = groupId, datasetName = datasetName)[0]["id"] # Delete all rows from the table(s) pbi.executePBIOperation("deleterows", groupId = groupId, datasetKey = datasetKey, tableNames = tableNames)

# Get the datasetKey for the dataset (by name) datasetKey = pbi.executePBIOperation("getdatasetbyname", groupId = groupId, datasetName = datasetName)[0]["id"] # Delete the dataset pbi.executePBIOperation("deletedatasetbyid", groupId = groupId, datasetKey = datasetKey)

All the code above you can import in a notebook using this URL.

If you closely examine the Power BI REST API documentation here, you’ll find that the pbiDatasetAPI class is not completely finished yet. There’s more that needs to be done, like:

-

Create measures with DAX

-

Create table relationships

-

Set cross-filtering behaviour

-

etc.

I intend to update the class in the future so that all the features will be available. Check GitHub now and then for updates.

Hi Ivan,

Nice article.

I need your help in resolving “401 response” from “executePBIRequest” method while”postingdataset” (pbi.executePBIOperation(“postdataset”, groupId = groupId, datasetName = datasetName, tableNames = tableNames, dataFrames = dataFrames, reCreateIfExists = True)),

whereas calling “pbiDatasetAPI(username, password, application_id)” gives me correct token

Hi,

Can you try executing executePBIOperation with the “getdatasets” method and see if you still get the 401 error?

same error

Btw, in order to get the token I am using below code

def getToken(self, tokenStringOnly = True):

“””Acquire an access token”””

data = {

“grant_type”: “client_credentials”

, “tenant” : “abcd”

, “client_id” : “efgh”

, “scope” : “openid”

, “client_secret” : “sdasd”

, “redirect” : “https://login.live.com/oauth20_desktop.srf”

, “resource” : “https://analysis.windows.net/powerbi/api”

}

token = requests.post(“https://login.microsoftonline.com/common/oauth2/token”, data = data)

assert token.status_code == 200, “Failed to retrieve token: {}”.format(token.text)

self.token = token

self.accessToken = token.json()[‘access_token’]

print(self.accessToken)

self.tokenExpirationDateTime = datetime.datetime.fromtimestamp(int(token.json()[“expires_on”]))

if tokenStringOnly:

return self.accessToken

else:

return self.token

Next thing. Since you’re using different grant_type, could you please print(pbi.token.json()) and let me know if the json structure is like this:

{

'token_type': 'Bearer',

'scope': 'Content.Create Dataset.ReadWrite.All',

'expires_in': '3599',

'ext_expires_in': '3599',

'expires_on': '1582127672',

'not_before': '1582123772',

'resource': 'https://analysis.windows.net/powerbi/api',

'access_token': 'xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx'

}

Hi Ivan,

What should i put as username and password?