As I’m sure you’ve heard, CTP3 of SQL Server Denali was released yesterday, and can be downloaded here. Denali includes Data Quality Services (DQS), Microsoft’s new data cleansing and matching component that’s based on the Zoomix acquisition that occurred a couple of years back. Data Quality Services didn’t make it into the first CTP, but is now available, so I though it would be worth a quick blog post.

Installing Data Quality Services

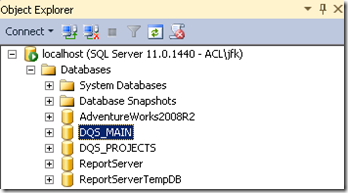

Data Quality Services is an option in the main install, but when you go to run the Data Quality Client, you’ll get a message stating that DQS is not installed. As far as I can tell, DQS needs to be installed manually, by running the DQSInstaller.exe, which you can find in the SQL Server Binn directory. This will create two SQL Server databases:

Data Quality Client

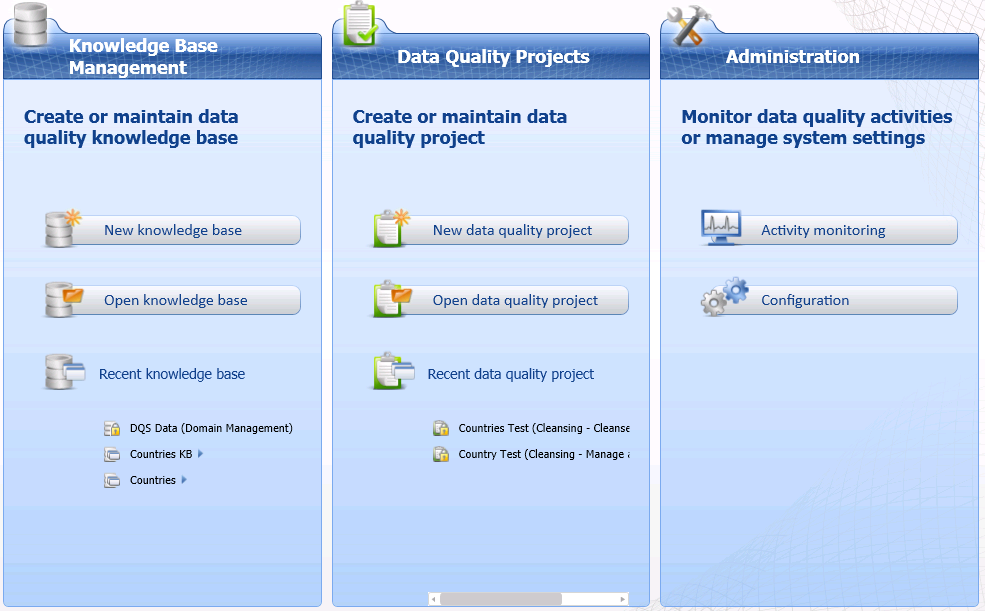

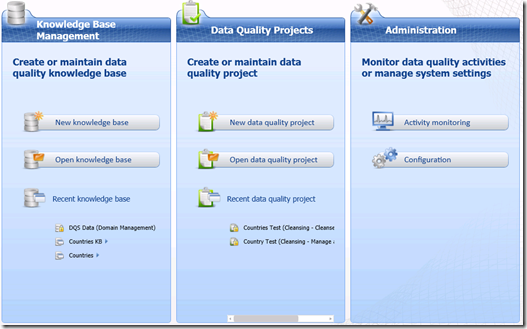

Once DQS is configured, you’ll be in a position to use the Data Quality Client, which is a windows application, available in 32 or 64 bit. Once you connect, you’ll get the following screen:

The knowledge base is the key to how DQS works, being utilised to determine the data quality of your source data. You can create your own knowledge base, using your own data, or even cloud-based data. For this example, I’m going to use the built in knowledge base called DQS Data.

As an example, I’ve created some data that I want to cleanse. It’s adventure works country data that I’ve put into a separate table that I’ve called dbo.Country.

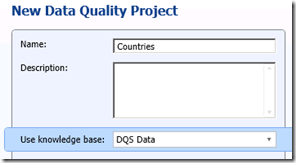

My task now is is to clean this data, which I can do by creating a data quality project:

I’ve called my project Countries, and I’ve picked the built-in DQS Data knowledge base, which I noticed contains reference data for countries. The activity that I’ve selected is Cleansing, and then I’ve clicked Create.

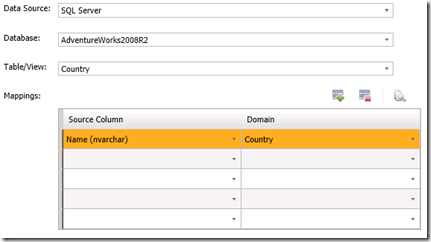

DQS will then prompt for mapping the source data to one of the domains in the Knowledge Base. Here I’ve mapping my country name to the Country domain from the knowledge base:

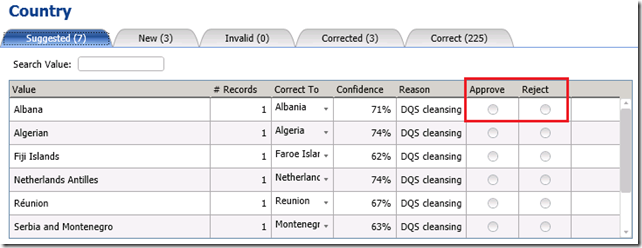

After clicking the Start button on the next screen, the cleaning process starts, which gives the following results:

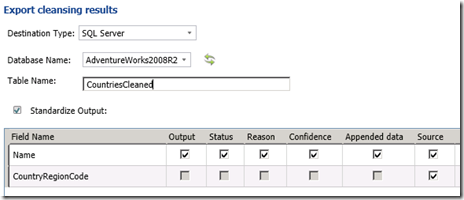

I put a couple of typos into my source data, such as changing Algeria to ‘Algerian’ and Albania to ‘Albana’. These were picked up by DQS, along with a few others, but a user has the opportunity to approve or reject, via the radio buttons shown above. I chose to approve the first two, and then clicked next. In the final screen, DQS allows you to output the cleaned data to a separate table, as shown below:

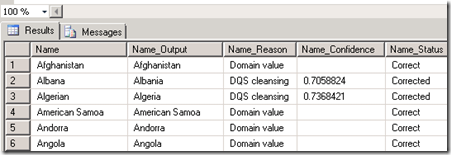

If you go into the table that DQS has created, you will see that there is a Name_Status column, which holds the cleaning status on a per record basis:

This can be used to update the source data, if required, and therefore address the data quality issues.

Summary

This has been a quick introduction to cleaning data with Data Quality Services. There’s plenty more to look at, and I hope to find time to do so at some point – In particular I’m keen to take a look at how DQS works with Master Data Services. I’ve noticed that there’s also a new MS DQS blog (http://blogs.msdn.com/b/dqs/) – I’m sure that will be a great resource for DQS learning.

Introduction to Data Wrangler in Microsoft Fabric

What is Data Wrangler? A key selling point of Microsoft Fabric is the Data Science

Jul

Autogen Power BI Model in Tabular Editor

In the realm of business intelligence, Power BI has emerged as a powerful tool for

Jul

Microsoft Healthcare Accelerator for Fabric

Microsoft released the Healthcare Data Solutions in Microsoft Fabric in Q1 2024. It was introduced

Jul

Unlock the Power of Colour: Make Your Power BI Reports Pop

Colour is a powerful visual tool that can enhance the appeal and readability of your

Jul

Python vs. PySpark: Navigating Data Analytics in Databricks – Part 2

Part 2: Exploring Advanced Functionalities in Databricks Welcome back to our Databricks journey! In this

May

GPT-4 with Vision vs Custom Vision in Anomaly Detection

Businesses today are generating data at an unprecedented rate. Automated processing of data is essential

May

Exploring DALL·E Capabilities

What is DALL·E? DALL·E is text-to-image generation system developed by OpenAI using deep learning methodologies.

May

Using Copilot Studio to Develop a HR Policy Bot

The next addition to Microsoft’s generative AI and large language model tools is Microsoft Copilot

Apr