Artificial Intelligence (AI) is seen by many as a great transformative technology. Researchers have been working on data science and AI for decades. Progress has been accelerated over the last few years thanks to three main developments; the increased availability of data, growing cloud computing power and more powerful algorithms developed by AI researchers.

As AI hits a critical mass and becomes part of the fabric of organisational success, business leaders, policymakers, researchers, academics and those from non-governmental groups must work together to ensure that AI-based technologies are designed and deployed in a way that will earn the trust of the people who use them, as well as the individuals whose data is being collected.

Harnessing the power for good will require international cooperation, and a completely new approach to tackling difficult ethical questions relating to the following topics:

- Transparency: A lack of transparency in AI or an inability to explain how or why a result has been presented, can undermine trust in the system.

- Security and Privacy: Large volumes of relevant data is essential for AI to make actionable decisions. There will be increasing chances for people’s data to be collected, stored and manipulated without their consent. People won’t share their data unless they are confident that their privacy is protected, their data is secure and they are aware how and where it is being used.

- Bias and Discrimination: Technology can be influenced by human conscious and unconscious bias or skewed, incomplete datasets. AI technologies need to benefit and empower everyone and incorporate and address the broad range of human needs and experiences.

- Accountability and Regulation: As more AI driven systems are introduced, people will have more expectations on accountability and responsibility. The question then becomes who is responsible for the use and potential misuse of the AI systems.

- Automation and Human Control: Introducing automation through AI systems, makes it easy to forget the impact that wrong predictions can have. The consequences of incorrect predictions need to be understood, especially when it can have significant impact on human lives.

- Personal and public safety: As driverless cars become the norm, increased surveillance happens worldwide, and the unknown threat of weaponised AI, a key issue is how can we ensure human and societal safety.

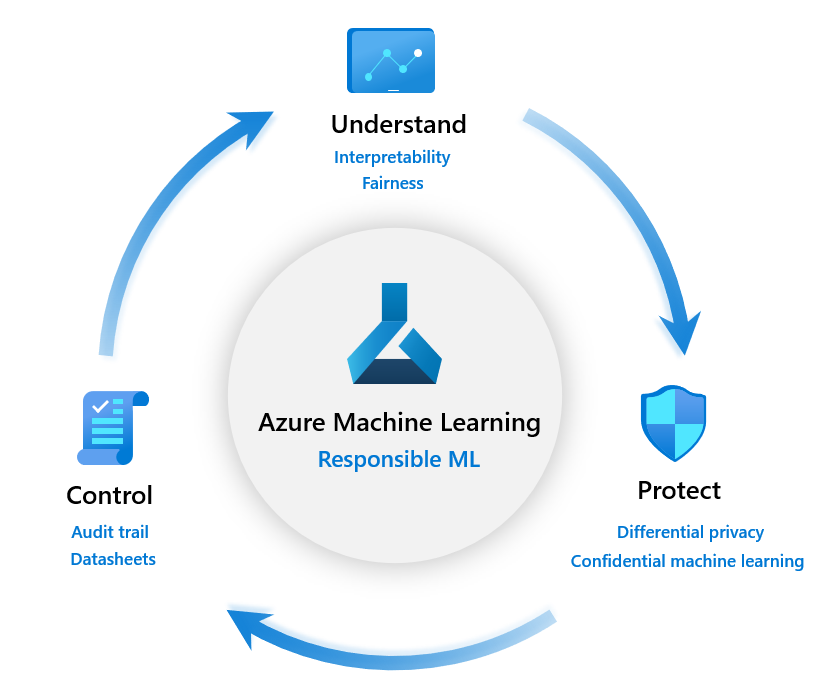

Microsoft’s Azure Machine Learning platform has been enabling AI for many organisations and streamlining the route to impactful, actionable insights. A fundamental building block of Azure Machine Learning is a commitment to responsible ML. Microsoft approach responsible ML with a range of innovative technical solutions that include open source toolkits and functionality that is embedded into the core of the platform. Each of these technical capabilities aligns to one of the three pillars of responsible ML, Understand, Protect, and Control.

Understand

Providing clear insight into the factors and reasoning behind a decision or prediction helps to mitigate unfairness. If there is a lack of transparency in AI or an inability to explain how or why a result has been presented, this can undermine the trust in the system. External factors such as human bias and poor data can influence the neutrality of AI, greatly reducing the model’s ability to generalise impartially.

Azure Machine Learning introduces the InterpretML toolkit, a set of capabilities that allow high impact features to be analysed and understood, providing full visibility into the driving factors behind the resulting prediction. Taking this a step further, What-If analysis means that predominant features can be adjusted to understand how different values would have affected the outcome. Crucially however, Azure Machine Learning introduces Fairlearn, which means models can learn to mitigate an identified “unfairness” and provide models that trade off accuracy against fairness at varying levels.

Protect

Complete datasets are essential for AI to make accurate decisions and often this data can contain sensitive information about individuals. Whilst features for such models may require this type of information, it is paramount that people data is protected and that individuals cannot be identified from predictions. Microsoft’s WhiteNoise provides a differential privacy toolkit that allows data scientists to control the amount of information exposure and introduce statistical noise into datasets, meaning discrete data points can be obfuscated reducing the possibility of re-identification.

With the introduction of the GDPR and other similar acts, data privacy is high on the list of peoples concerns regarding artificial intelligence. Azure provides a highly secure environment whereby data is secured both at rest and in-transit with artefacts produced through model training and analysis being encrypted and secured also. By using a secure platform such as Azure and obtaining consent to use an individual’s data, organisations can confidently fulfil their responsibilities when using sensitive information.

Control

Managing the end to end life-cycle of AI models using audit trails and logging provides confidence around data lineage, allows organisations to meet regulatory requirements and demonstrates the investment in responsible AI. Azure Machine Learning uses constant monitoring to track many data points such as experiment run history, training parameters and model explanations through the entire AI life-cycle. Developers can produce datasheets that summarise this information into easily digestible documents which ensure responsible decisions can be made at each stage. As questions around accountability start to become more critical, this audit information allows organisations to share each step of their responsible AI process.

The new responsible ML innovations and resources from Microsoft have been designed to enable developers and data scientists build more reliable, fairer, and trustworthy machine learning. To find out how Adatis can help you begin your journey with responsible ML, please contact us. Alternatively, there are some helpful resources from Microsoft:

- Learn more about responsible ML

- Get started with a free trial of Azure Machine Learning.

- Learn more about Azure Machine Learning and follow the quickstarts and tutorials

Introduction to Data Wrangler in Microsoft Fabric

What is Data Wrangler? A key selling point of Microsoft Fabric is the Data Science

Jul

Autogen Power BI Model in Tabular Editor

In the realm of business intelligence, Power BI has emerged as a powerful tool for

Jul

Microsoft Healthcare Accelerator for Fabric

Microsoft released the Healthcare Data Solutions in Microsoft Fabric in Q1 2024. It was introduced

Jul

Unlock the Power of Colour: Make Your Power BI Reports Pop

Colour is a powerful visual tool that can enhance the appeal and readability of your

Jul

Python vs. PySpark: Navigating Data Analytics in Databricks – Part 2

Part 2: Exploring Advanced Functionalities in Databricks Welcome back to our Databricks journey! In this

May

GPT-4 with Vision vs Custom Vision in Anomaly Detection

Businesses today are generating data at an unprecedented rate. Automated processing of data is essential

May

Exploring DALL·E Capabilities

What is DALL·E? DALL·E is text-to-image generation system developed by OpenAI using deep learning methodologies.

May

Using Copilot Studio to Develop a HR Policy Bot

The next addition to Microsoft’s generative AI and large language model tools is Microsoft Copilot

Apr