Polybase has now made it over to SQL Server 2016 and is available to use as part of the SQL Server 2016 CTPs. One of its capabilities is to connect to Azure Blob Storage, so this blog post gives an overview of how you can use Polybase to connect to data in Azure HDInsight.

Installing Polybase in SQL Server 2016

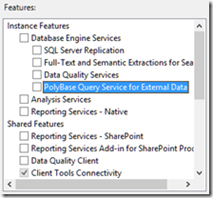

Polybase will appear in the standard feature list of the SQL Server 2016 CTP installs, alongside the other database engine features, so it’s just a selection box to install it:

Having said that, there’s one small limitation at the moment in the Polybase install, which is that only the SQL_Latin1_General_CP1_CI_AS and Latin1_General_100_CI_AS_KS_WS collations are supported, as explained in the following getting started guide. If you don’t have the correct collation and you try and use Polybase, then you may get the issue of the Polybase windows service stopping.

Azure HDInsight

For this post, I’m just going to use the sensor data sample that comes with Azure HDInsight when you provision a cluster. If you run through the following HDInsight Tutorial, then you can get up and running with an HDInsight cluster and use the samples.

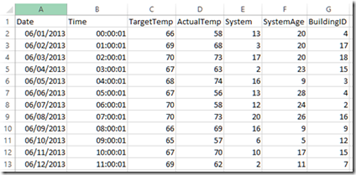

In this case, I’m using the sensor data analysis sample, which comes with a csv file called HVAC.csv – this is a set of temperature readings. The file looks as follows when opened in Excel:

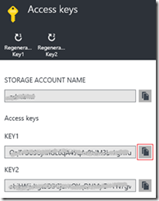

Within the Azure portal, if we go to the HDInsight cluster, then we need to note down the access keys that will allow Polybase to connect to Azure:

We can then copy one of the access keys, which we’ll need for later:

Polybase in SQL Server Management Studio

Over to SQL Server Management studio now, where we need to create an External Data Source, a File Format and an External Table to access the Azure table. Before creating these objects, we need to create a credential, where we will need to specify the Azure access key that we copied earlier:

--Enable traceflag for using a database-scoped credential. DBCC TRACEON(4631,-1); -- Create a db master key if one does not already exist, using your own password. CREATE MASTER KEY ENCRYPTION BY PASSWORD='MyP@ssword31'; --Enter the Azure account name and the acccess key as the secret CREATE CREDENTIAL AzureCred ON DATABASE WITH IDENTITY = 'accountname', SECRET='accesskeyhere'

Now that we’ve set up the credential, we need to create an external data source, so that Polybase knows where it needs to go to get the data. Notice how we’re referencing the credential that we created earlier:

CREATE EXTERNAL DATA SOURCE AzureDs WITH ( TYPE = HADOOP, --Specifiy the container name and account name LOCATION = 'wasbs://containerd@accountname.blob.core.windows.net/', --Specify the credential that we created earlier CREDENTIAL = AzureCred );

Now we need to specify the format of the file that we want to access. In this case, having looked at the CSV, its comma delimited, so the SQL required is as follows:

CREATE EXTERNAL FILE FORMAT CommaFormat WITH ( FORMAT_TYPE = DELIMITEDTEXT, FORMAT_OPTIONS (FIELD_TERMINATOR =',') );

Finally we need to create an external table – this is the object that we will be able to query with a T-SQL statement and consume data within the Azure HDInsight cluster. The syntax for this example is:

--Define the table structure CREATE EXTERNAL TABLE Hvac ( Date varchar(10), Time varchar(10), TargetTemp smallint, ActualTemp smallint, SystemID smallint, SystemAge smallint, BuildingID smallint ) WITH ( --Set the file to be the HVAC sensor sample file LOCATION='/HdiSamples/SensorSampleData/hvac/HVAC.csv', DATA_SOURCE = AzureDs, FILE_FORMAT = CommaFormat, --We will allow the header to be rejected REJECT_TYPE = VALUE, REJECT_VALUE = 1 );

Interestingly you can’t tell Polybase to skip a header record, as confirmed in the following connect issue, so I found that my statement was failing when trying to query the data. The solution is to use the REJECT_TYPE and REJECT_VALUE, which will allow us to reject a number of rows, as shown above.

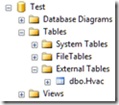

Once this is done, then we’ll see that we have an External Table:

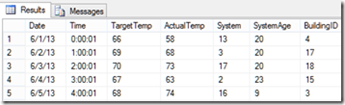

Querying it is just like any other table with a SELECT statement, which gives the following results in this case:

So that’s it – by creating the external data source, file format and external table, we can now use T-SQL in SQL Server 2016 to easily query data hosted within Azure HDInsight.

Introduction to Data Wrangler in Microsoft Fabric

What is Data Wrangler? A key selling point of Microsoft Fabric is the Data Science

Jul

Autogen Power BI Model in Tabular Editor

In the realm of business intelligence, Power BI has emerged as a powerful tool for

Jul

Microsoft Healthcare Accelerator for Fabric

Microsoft released the Healthcare Data Solutions in Microsoft Fabric in Q1 2024. It was introduced

Jul

Unlock the Power of Colour: Make Your Power BI Reports Pop

Colour is a powerful visual tool that can enhance the appeal and readability of your

Jul

Python vs. PySpark: Navigating Data Analytics in Databricks – Part 2

Part 2: Exploring Advanced Functionalities in Databricks Welcome back to our Databricks journey! In this

May

GPT-4 with Vision vs Custom Vision in Anomaly Detection

Businesses today are generating data at an unprecedented rate. Automated processing of data is essential

May

Exploring DALL·E Capabilities

What is DALL·E? DALL·E is text-to-image generation system developed by OpenAI using deep learning methodologies.

May

Using Copilot Studio to Develop a HR Policy Bot

The next addition to Microsoft’s generative AI and large language model tools is Microsoft Copilot

Apr