In the first blog of this series: http://tiny.cc/40p67y we looked at some tips to keep in mind when doing application development with particular focus on PowerApps. This blog will focus on an important point, more specific to PowerApps, which is worth keeping in mind during the development stage of a PowerApps application.

What is Delegation for?

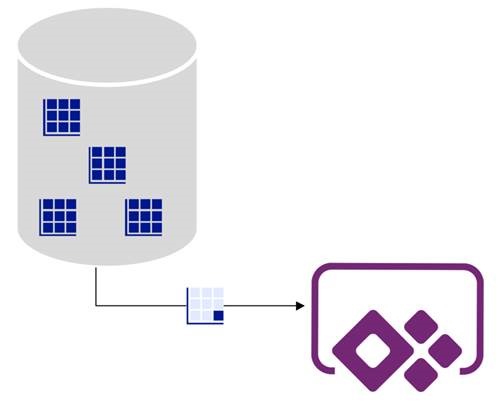

Delegation permits the data processing to be done on the datasource’s end, and get only the required data back to the application, in order to avoid shifting all the data in the application itself and process it within. However, some of the functions in PowerApps do not qualify for delegation. Delegation is only supported for certain tabular datasources only – including SQL. If the application being developed is foreseen to work with small datasets that do not exceed 2000 rows (now or in the future), this will not be a concern. In these instances, any datasource or function can be utilized without delegation risks, as if the function cannot be delegated there will still be the ability of the data being processed locally within the application.

Difference between a delegatable and non-delegatable

In short, what will be the implications if a function cannot be delegated? The data brought from the datasource will be much less (maximum 2000 rows) than what actually exist in the database, hence producing incomplete results (especially for lists or galleries) or inaccurate results (especially for calculations). Let’s go through an example of this, which architecturally might not be a feasible option to be implemented in a realistic application, but will help us understand better this concept. Let’s say a developer is doing a Form in a PowerApps that will take the details of the end user and submit it to a SQL database. In order to decrease chances of typos and allow better analysis on the gathered data, most of the dropdowns or lists within the Form are being loaded from another SQL database containing master data. And one of these lists is to be populated from the City table, allowing the user to choose from a pre-defined list of cities. The city table in the master database has thousands of records. Without going into the argument of whether this is a good approach or not, let’s say the developer populates a collection first directly from the master data table to be able to hook the list to it with improved performance. The SELECT query passed to the datasource as part of the Collect() function will include a TOP 2000 (beyond the developer’s control), and will only get back 2000 records of the full table. Likewise if a filter was being done or search directly on the database, it will only be considering the initial 2000 records out of the entire table in the datasource.

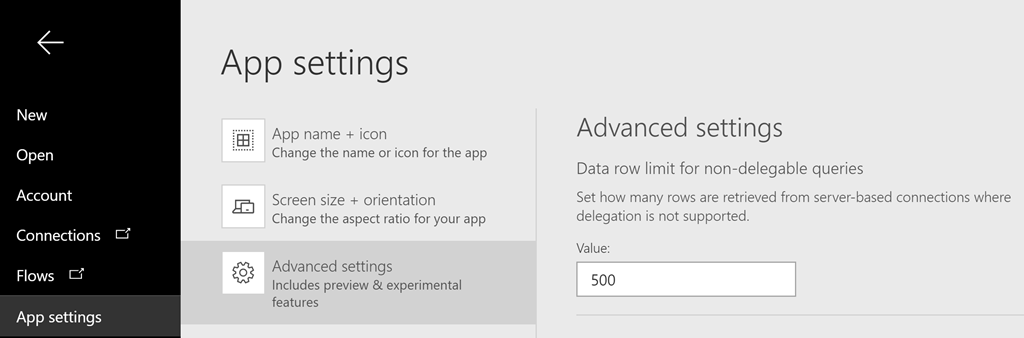

Data row limit for non-delegatable functions

It is important to note that by default the data row limit for non-delegatable queries is by default 500 (on creation of a new application). However, this can be increased to a maximum of 2000 (at the point of writing this blog) from the App Settings:

Delegation Warnings

Whilst developing the application, you might encounter warnings that will highlight that that particular action or behaviour might not work properly due to delegation. And this applies were that command will turn into a non-delegatable function. It is normally underlined with a light blue line, similar to the below. Here we are trying to get a dynamic list of all the products that have been inserted within this month and year:

The same will happen if we try to get the products inserted in the last 7 days:

The relevant control will also have a warning triangle displayed in Edit mode:

Proceeding with publishing this application will mean that when the data row limit is exceeded in the source, not all the dataset will be considered for this command (and hence the result will not be exact).

Possible workarounds?

In certain cases like the above delegation warnings, a different approach for the same command can do the trick. So if upon starting the action we are getting the Start Date once and putting that in a variable, whilst filtering against the range of that and today, then no delegation warnings will be given:

A similar solution would be to refer to a used Label on the canvas that is calling the DateAdd(Today(),-7) and referring to it in the Filter instead of the variable.

One other option to go around delegation is loading a collection at the start of the application from a large table (as long as it is controllably large, and not millions of rows), and looping through it a number of times (the maximum key in the datasource divided by the default data row limit of 500) to populate the collection with the entire dataset.

This can be done either directly in PowerApps or even using a SQL function similar to the following. In this table-valued function we are aiming to output a table with 3 columns. The first column will be the PointerID holding the list of times we are going to re-loop over a database to get up to the maximum row. For each PointerID we will be setting the RangeTo and Range From, when considering the data row limit in PowerApps (which is accepted as a parameter).

Following is the SQL code for the function:

CREATE FUNCTION [dbo].[GenerateReRunTable] (@Limit INT)

RETURNS @ReRunTable TABLE (

PointerID INT

,RangeFrom INT

,RangeTo INT

)

AS

BEGIN

–Replace [dbo].[LargeTable] with your table name and the [Id] column with your key

–Getting the minimum and maximum from the table to set the entire range

DECLARE @RangeFrom INT = (

SELECT MIN(L.Id)

FROM [dbo].[LargeTable] L

)

DECLARE @RangeTo INT = (

SELECT MAX(L.Id)

FROM [dbo].[LargeTable] L

);

–Calculating the number of reruns that need to be done by dividing the range of records with the data row limit in PowerApps

WITH MaxReRuns

AS (

SELECT ((@RangeTo – @RangeFrom) / @Limit) + 1 AS [MaxReRuns]

)

,ReRuns

AS (

–Generating the runs including the unique PointerID for each and their start and end range to then be picked by PowerApps for filteringSELECT 1 AS PointerID

,@RangeFrom AS [RangeFrom]

,CASE

WHEN @Limit < @RangeFrom

THEN @RangeFrom

ELSE @Limit

END AS [RangeTo]

UNION ALL

SELECT PointerID + 1

,[RangeFrom] + @Limit

,[RangeTo] + @Limit

FROM ReRuns

WHERE PointerID + 1 <= (

SELECT [MaxReRuns]

FROM MaxReRuns

)

)

INSERT INTO @ReRunTable

SELECT *

FROM ReRuns

OPTION (MAXRECURSION 10000)

RETURN

END

GO

A SQL View can then be created to get the results from the function. That way whenever we are loading the view, we will be getting the latest result based on the updated data, like for example:

CREATE VIEW [dbo].[ReRunsView]

AS

SELECT * FROM [dbo].[GenerateReRunTable] (500)

In this case, the [dbo].[LargeTable] had about 5203 rows. Hence the view would show the following output:

In PowerApps, we are then making use of this code (either in the OnVisible() section of the screen or in the OnSelect() of a button):

ClearCollect(ReRunsColl,'[dbo].[ReRunsView]’);

ForAll(ReRunsColl,

Collect (ProductListColl,

Filter (‘[dbo].[Ori Product List]’, ProductID >= RangeFrom && ProductID <= RangeTo)))

So, for each line in the SQL view (that has been loaded to the collection), we will be posting data to a PowerApps collection named ProductListColl all the contents from the SQL table named [dbo].[Ori Product List] in batches filtering on the specific range for that run (so that we bypass the delegation row limit, which will else disregard the rows above the row limit). Else, a normal Collect(ProductListColl, ‘[dbo].[Ori Product List]’) outside the loop would have only populated the collection with the maximum data row limit allowance of 500 – providing an incomplete dataset. This fully populated collection will also enable us to refer to the ProductListColl locally (as a replica of the entire [dbo].[Ori Product List] SQL table), increasing also the performance of data retrieval from this particular dataset.

We need to keep in mind though that eventually in PowerApps, we will still are using a ForAll() loop to read and hence doing multiple calls to the database when dealing with large datasets, increasing the chances of encountering issues which I will be explaining in later releases of this blog series. This will also have an effect on the performance (till the loop is being done and collection populated).

I also intend to finish a more practical example related to this to highlight better how delegation works in PowerApps in the upcoming blog post within this series – so please stay tuned for next release! In the meantime feel free to exchange any ideas or comments on this topic!

Introduction to Data Wrangler in Microsoft Fabric

What is Data Wrangler? A key selling point of Microsoft Fabric is the Data Science

Jul

Autogen Power BI Model in Tabular Editor

In the realm of business intelligence, Power BI has emerged as a powerful tool for

Jul

Microsoft Healthcare Accelerator for Fabric

Microsoft released the Healthcare Data Solutions in Microsoft Fabric in Q1 2024. It was introduced

Jul

Unlock the Power of Colour: Make Your Power BI Reports Pop

Colour is a powerful visual tool that can enhance the appeal and readability of your

Jul

Python vs. PySpark: Navigating Data Analytics in Databricks – Part 2

Part 2: Exploring Advanced Functionalities in Databricks Welcome back to our Databricks journey! In this

May

GPT-4 with Vision vs Custom Vision in Anomaly Detection

Businesses today are generating data at an unprecedented rate. Automated processing of data is essential

May

Exploring DALL·E Capabilities

What is DALL·E? DALL·E is text-to-image generation system developed by OpenAI using deep learning methodologies.

May

Using Copilot Studio to Develop a HR Policy Bot

The next addition to Microsoft’s generative AI and large language model tools is Microsoft Copilot

Apr