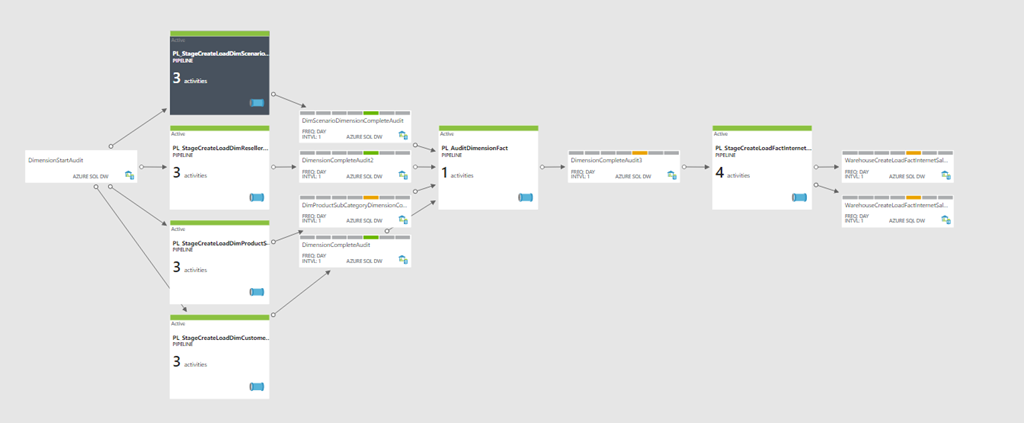

(Image 1 – Azure Data Factory – Transformation Pipeline)

Overview:

Azure data factory (ADF) is a big data processing platform from Microsoft on the Azure platform. For database developers, the obvious comparison is with Microsoft’s SQL Server integration services (SSIS). SSIS is an ETL tool (extract data, transform it and load), ADF is not an ETL tool. ADF is more akin to ELT frameworks (Extract-Load-Transform), while the terms are similar, the process is very different. For those who have worked in the data warehouse arena for a number of years, you will be very familiar with ETL and switching to ELT can feel somewhat alien and cumbersome. As such, making obvious comparisons to SSIS will only hinder your understanding of the core concepts of ADF. ADF is not a cloud version of SSIS as many would have you believe, it is both more and less.

On the topic of ETL, Dan Linstedt (The father of Data Vault), published an interesting article “ETL is Dead! Long Live ELT” (www.datavaultalliance.com). In this, Linstedt argues that ETL is now defunct for a number of reasons, the primary being big data. To enable fast processing of big data, we have seen a shift to parallel processing with tools such has Hadoop’s HDFS, Cassandra, Spark and on the Microsoft side, Data Lake and Azure Data warehouse. By having our data spread across many nodes we can push the processing to the data via a MapReduce job. ADF is designed to be a big data processing pipeline, so it makes sense to process where the data lives rather than extracting all our data, transforming it and then loading it in one processes. We instead ingest the data and load it in to a parallel storage engine (HDFS) and then transform. If this is the first time you’re reading about ELT, I recommend both Linstedt’s article where Linstedt states “ETL truly is DEAD. Metadata & Lineage are NOT dead, ELT is the future” and also James Serra’s blog “The difference between ETL and ELT” (http://www.jamesserra.com/archive/2012/01/difference-between-etl-and-elt/).

ADF is often described as an “Orchestration” tool (https://azure.microsoft.com/en-gb/services/data-factory/) and this is because of its two functions. Being ELT and not ETL, the two main functions might not surprise you. They are moving data and transforming it. When I say transforming data you might assume ADF does the transformation, however the only action ADF actually preforms is to issue a command for a transformation to happen (Issue an EXEC for a stored procedure or a MapReduce job for example). It is this issuing of commands which makes the tool an orchestration and not a multipurpose ETL tool such as SSIS.

The data processing landscape is change, while ADF is still a relatively new tool, a lot of development is ongoing and improvements are being made. ADF is available through the Azure Portal and using the ADF add-in for visual studio (https://azure.microsoft.com/en-us/blog/azure-data-factory-visual-studio-extension-for-authoring-pipelines/).

Now we know what ADF does, let look at how it does it. ADF has 3 main components, linked services, datasets and pipelines.

Core Concepts:

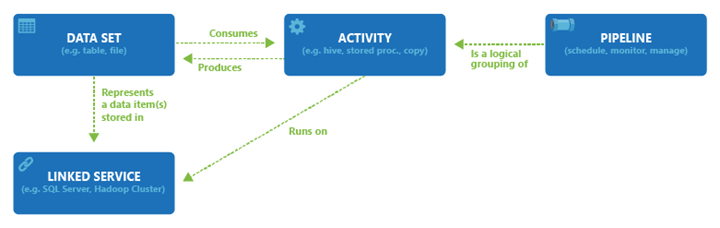

(Image 2 – High level ADF)

The diagram above is showing how ADF all slots together. If we follow this starting with a linked service we can explore this further. A linked service is our connection to some form of resource. This could be an on premise SQL database, a blob storage container, an azure SQL data warehouse or something else. It is here that we want something to happen. That could be extracting data, sinking data (an ADF term for storing data) or transforming data using stored procedures, MapReduce etc. A dataset is on which part of a linked service you want an activity to be performed (a table, view, stored procedure). A dataset is not a database, but rather a table or a container in blob storage. A linked service can have one or many datasets. An activity is performed by a pipeline which will perform an action/actions on a dataset/datasets.

Linked services

A linked services is best thought of as a logical data connection, allowing ADF to connect to an external resource. A linked services acts as a representation of a data store or a compute resource.

A data store is used as part of a data movement activity. A compute resource is executed as part data transformation exercise.

Click here for more information on the creation and different sources you can use for linked services.

Datasets

A dataset represents a data structure in ADF, each belonging to a linked service. A linked service might be an Azure Blob storage account or an Azure SQL database, the dataset is a representation of the data, a folder of files in blob storage or a table in an Azure database, not the database itself.

For a full list of sources and sinks you see the list of links below.

Pipelines

Pipelines offer the power for ADF. There are 2 types of pipeline which support our notion on ELT.

Data movement pipeline – The Data movement pipeline does what its name implies. It moves data from one dataset to another. When referring to Azure documentation these two datasets will be referred to as the source and the sink. In a data movement activity, data can be moved from any source to any sink. For example, you can move data from Azure Blob storage in to a SQL database or from HDFS to an Azure SQL Data warehouse. This part handles the E and the L of our ETL. Data is extracted and loaded. The only difference to SSIS, is that at this point there is no T. No transformation has happened at this point.

Data transformation pipeline – The data transformation activities again do as the name implies – Transform. As ADF is an orchestration tool, a transformation could be a MapReduce job, a streaming job, a stored proc or a data lake U-SQL query. To fully use ADF you will need to know how you’re storing data (ADFS/Data lake) and then what language you want to use to process the data. Knowing how ADF functions is only half the battle.

In relationship terms, you can think of the interconnection of linked services, pipelines and datasets as follows: A listed service has one or more datasets (A SQL database with many tables), a pipeline performs an action on one or more datasets.

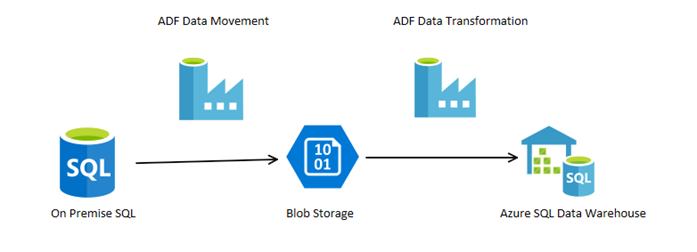

An Example:

Let’s imagine a process where you have an on premise SQL server box, you want to move multiple tables to blob storage, from there you then want to issue a stored procedure which will consume that data in to an Azure SQL data warehouse via PolyBase – As illustrated in the image below.

(Image 3 – Example Process)

To complete this we would need to create the following artefacts in ADF:

Linked services:

On-premise SQL database

Azure BLOB storage

Azure SQL data warehouse

Datasets:

Table in On-premise SQL database

The blob container

The stored procedure

Pipelines:

Pipeline to move data from SQL database to blob storage

Pipeline to issue stored procedure

In later blogs we will look at creating this example in full, however if you want to get started now, the links at the bottom of the page will guide you through setting up ADF.

How to get started:

There are 3 ways to develop for ADF.

- Azure portal – Semi-rich IDE. All in the Azure portal.

- Visual studio with ADF add-in – Richer development environment. Allows for source control of code through TFS and automated deployment of changes. This is required as the Azure portal can sometime get caught in a syntax loop where nothing will deploy. VS will remove and deploy artefacts which makes this a lot easier. You will sometime have to delete your ADF and start again. If your code is in VS all you need to do is deploy.

- PowerShell – Anything you can in the portal you can do in PowerShell.

My development preference is 2 and 3. I use Visual studio for all my code which is source controlled with TFS, then I use PowerShell to build my ADF environment.

Thanks for reading this introduction to ADF. I hope you have found it somewhat insightful. We have a whole series of blogs looking in depth at different aspects and design patterns for loading a data warehouse with Azure Data Factory. You can read more in our next blog looking at setting up your first Azure Data Factory.

Links:

Learning path: https://azure.microsoft.com/en-gb/documentation/learning-paths/data-factory/

Documentation: https://docs.microsoft.com/en-us/azure/data-factory/

Introduction to ADF [YouTube]: https://www.youtube.com/watch?v=_hOYAT69yIw

ADF overview [Channel9] https://channel9.msdn.com/Blogs/Azure/Azure-Data-Factory-Overview

Introduction to Data Wrangler in Microsoft Fabric

What is Data Wrangler? A key selling point of Microsoft Fabric is the Data Science

Jul

Autogen Power BI Model in Tabular Editor

In the realm of business intelligence, Power BI has emerged as a powerful tool for

Jul

Microsoft Healthcare Accelerator for Fabric

Microsoft released the Healthcare Data Solutions in Microsoft Fabric in Q1 2024. It was introduced

Jul

Unlock the Power of Colour: Make Your Power BI Reports Pop

Colour is a powerful visual tool that can enhance the appeal and readability of your

Jul

Python vs. PySpark: Navigating Data Analytics in Databricks – Part 2

Part 2: Exploring Advanced Functionalities in Databricks Welcome back to our Databricks journey! In this

May

GPT-4 with Vision vs Custom Vision in Anomaly Detection

Businesses today are generating data at an unprecedented rate. Automated processing of data is essential

May

Exploring DALL·E Capabilities

What is DALL·E? DALL·E is text-to-image generation system developed by OpenAI using deep learning methodologies.

May

Using Copilot Studio to Develop a HR Policy Bot

The next addition to Microsoft’s generative AI and large language model tools is Microsoft Copilot

Apr