After creating an integration account that has partners and agreements, we are ready to create a business to business (B2B) workflow for your logic app with the Enterprise Integration Pack.

The covered scenarios will be:

Adatis ->generate 940 x12 ->encrypt that message and send it to ABC as2 and sftp endpoints->

ABC ->receive 940 send by Adatis using As2->decrypt As2 message->Apply xsl stylesheet

The EDI 940 Warehouse Shipping Order transaction set is used to instruct remote warehouses to ship orders. It is commonly used by a seller,

such as a manufacturer or wholesaler, to authorize a warehouse to make a shipment to a buyer, such as a retailer.

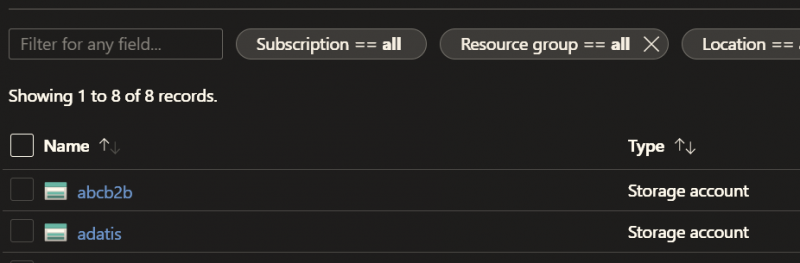

Before start with logic apps, we need to create two storage accounts

In every of the storage account will need to create EDI container

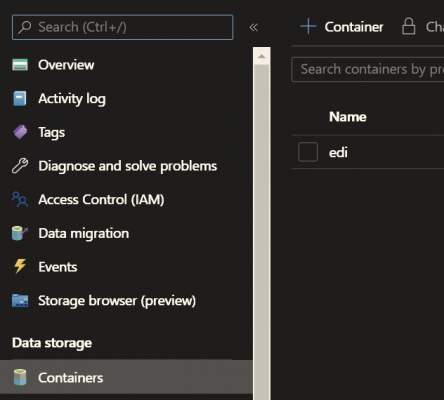

Next, we will need to create for the same storage accounts and queues: as listed below:

Format of exported data from our core system will be in xml format:

<?xml version=”1.0″ encoding=”utf-8″?>

<ns0:X12_00401_940 xmlns:ns0=”http://schemas.microsoft.com/BizTalk/EDI/X12/2006/adatis_abc”>

<ns0:W05>

<W0501>N</W0501>

<W0502>SON0245519</W0502>

<W0503>821337</W0503>

<W0504>001001</W0504>

<W0505>1962694</W0505>

<W0506>CL</W0506>

</ns0:W05>

……………………………………………………

<ns0:W76 xmlns:ns0=”http://schemas.microsoft.com/BizTalk/EDI/X12/2006/adatis_abc”>

<ns0:W7601>-15</ns0:W7601>

<ns0:W7602>444.00</ns0:W7602>

<ns0:W7603>LB</ns0:W7603>

</ns0:W76>

</ns0:X12_00401_940>

Basically, we can generate xml export from any database type where the only condition we need to met is to be formatted as related edi document type we wish to process. Once the xml is exported in our on premise environment we need to upload it into Azure blob storage. This can be achieved by many ways and we will not cover this topic.

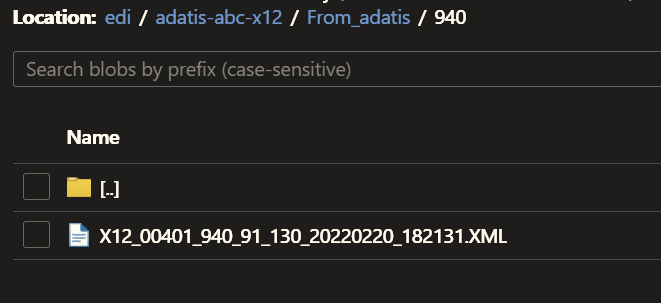

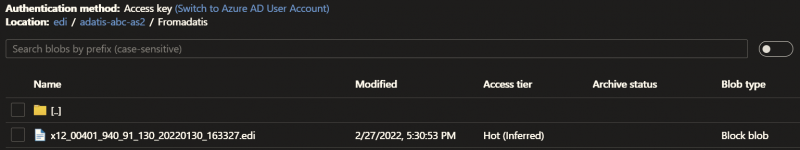

The goal is to have uploaded into Adatis storage account in the edi container our physical data export:

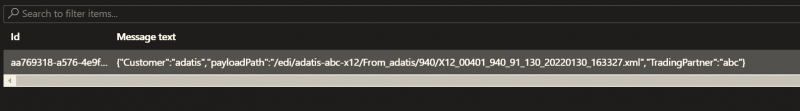

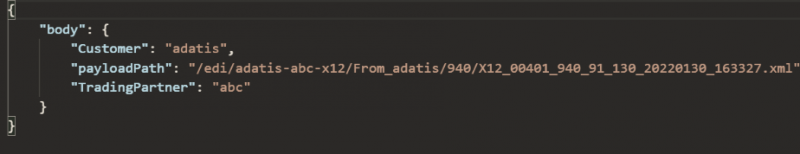

Also, inside the queue we will need to post a message which will trigger our workflow execution

The content of the queue messages is just the name of Customer which is Adatis

physical location inside Azure of our exported xml document:

/edi/adatis-abc-x12/From_adatis/940/X12_00401_940_91_130_20220130_163327.xml

and the name of the trading partner: ABC

To make the process of send and deliver edi messages more flexible we will split it into two main flows each of it will be created by the combination of two logic apps

First part will cover the case when we need to send edi messages:

- Logic app which will convert the xml to edi format – x12-out-abc

- Logic app to deliver to as2 endpoint – as2-out-abc or

- Logic app to deliver to sftp endpoint – sftp-out-abc

Second part will cover the case when we need to receive edi messages:

- Logic app to receive from sftp endpoint – as2-in-adatis or

- Logic app to receive from as2 endpoint – sftp-in-adatis

- Logic app which will convert the edi message into xml – x12-in-adatis

Queue is used to trigger workflow execution deliver some metadata between the individual logic apps quick inside information of what has been processed and in which stage is our message as long as and the physical location inside our blob storage of that message.

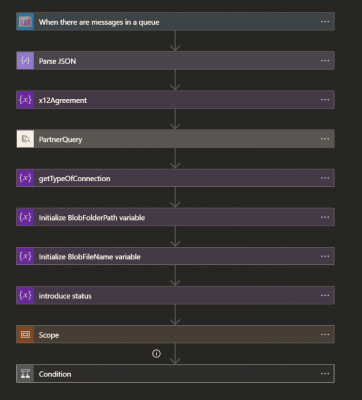

Our logical app which will process our xml once it has been uploaded into Azure will look like:

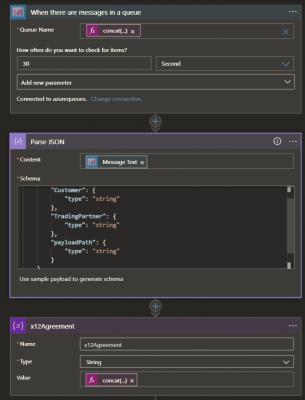

First part group of action are triggering the workflow by adding message into the que,

parse the que message and load the data into variables:

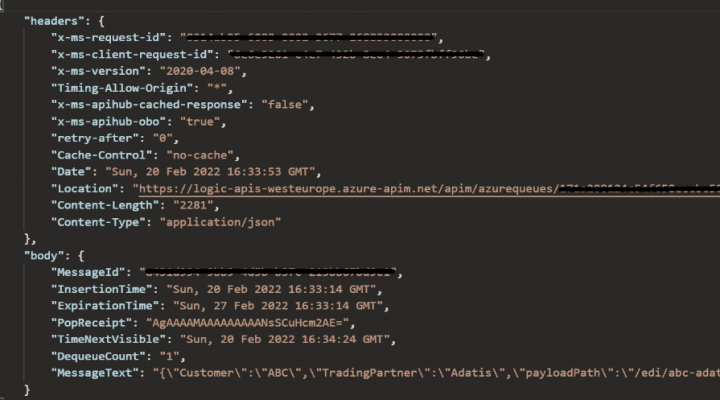

The outputs of this actions are as follow:

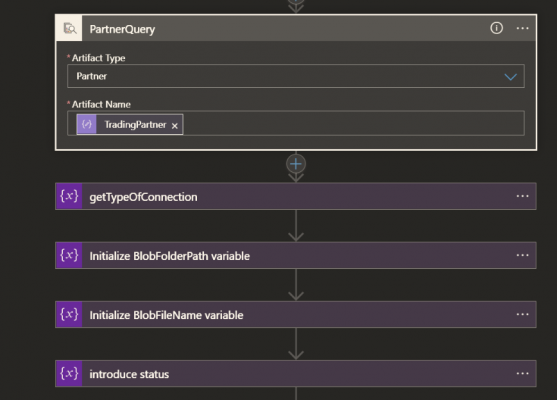

Next will call the Integration account and get from there some details about the trading partner and construct a couple variables which will be used latter:

The output from the PartnerQuery output is:

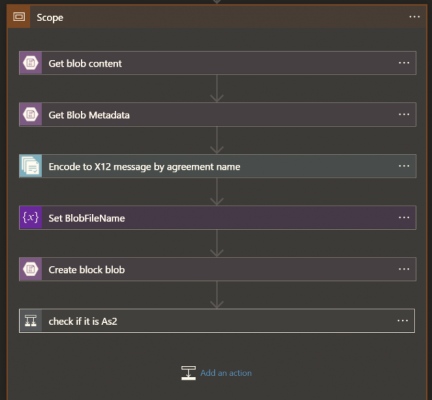

Next group of actions will use collected information from storage account from previous step to construct EDI message from the imported xml file:

Once the xml is converted into edi format we will create in our blob store new physical record of our converted edi message.

Reason to keep both formats of the same message is for audit purpose and errors investigation. This will help us if customer has data discrepancy very fast and easy to provide information of what has been exported from the core system and compare to what his trading partner has been receive and what we have generate as an output message.

Format of our converted from xml into edi message will look like:

ISA*00* *00* *ZZ*AdatisEdi *ZZ*ABCEdi *220227*1530*U*00401*000000122*1*P*:~

GS*OW*AdatisEdi*ABCEdi*220227*1530*122*T*00401~

ST*940*0122~

W05*N*SON0245519*821337*001001*1962694*CL~

N1*ST*AMELIAS GROCERY OUTLET*6*0100020582~

N3*43 GRAYBILL RD~

N4*LEOLA*PA*17540-1910*USA~

N1*WH*IL-1*9*517~W66*DF*M***ABC MARK*****SEDA~LX*1~

W01*15*CA**MG*0*UK*20140527~

G69*LB~

LX*2~

W01*96*CA**MG*0*UK*20140527~

G69*LB~

W76*-15*444.00*LB~

SE*15*0122~

GE*1*122~IEA*1*000000122~

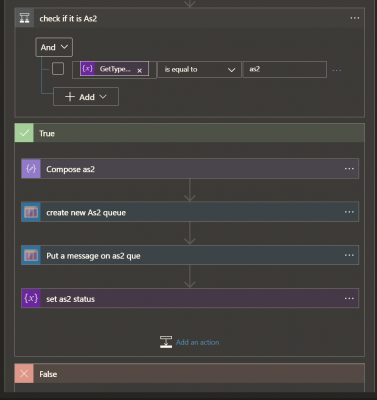

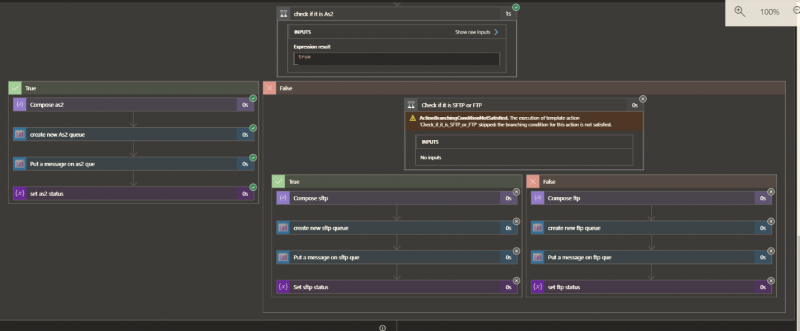

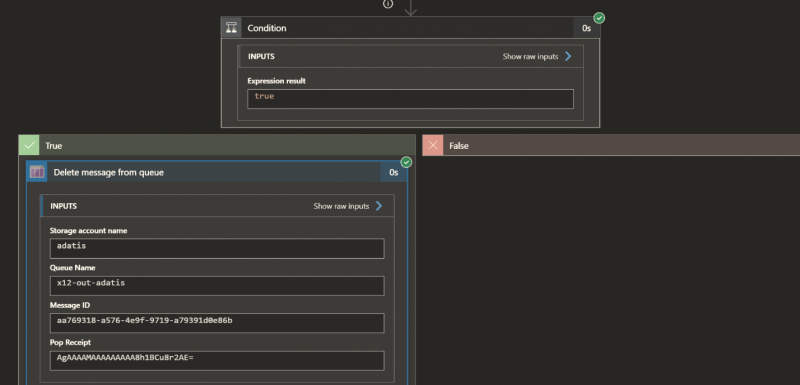

Follow the logic of our first logic app we met the last group of actions which will check what is the type of our trading partner endpoint from the Integration account and call second logic app from our first flow group by post in his message que notification which will trigger his execution.

Here we dynamically choose if it is a as2 or sftp or ftp. For every type of endpoint, we need to have separate logic app because of different logic and settings needs to be applied but only one type can be executed at the same time.

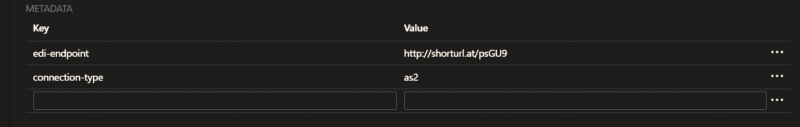

When we set the Integration account, we create one variable under the partners settings called connection-type.

Inside that variable we define the trading partner endpoint if it is as2, sftp, ftp.

Our workflow will check what is the endpoint type and dynamically will chose next logic app needs to be executed:

Last action from current logic app flow is to post in the second logic app message queue message which will trigger its execution and to delete from current logic app the queue message. This will help very fast orient in which stage is our message

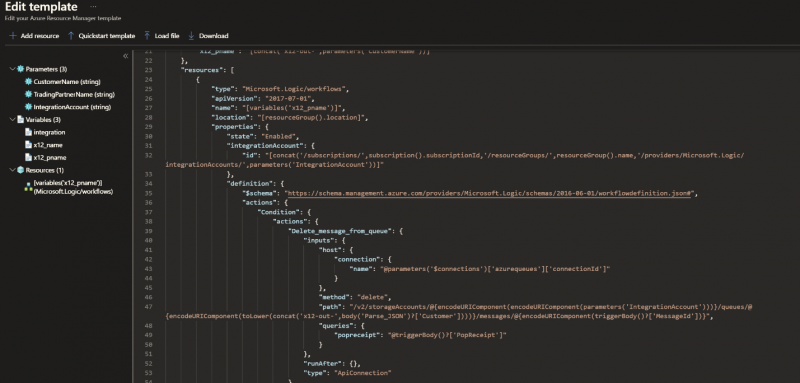

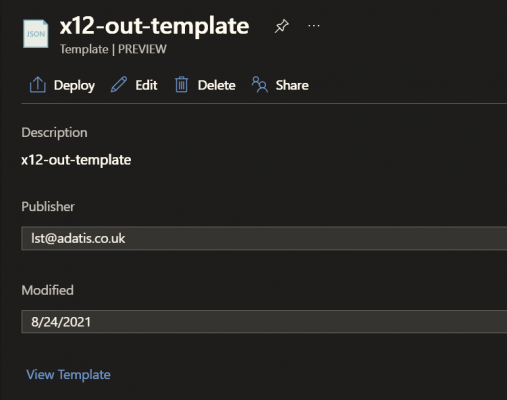

To make this workflow re-usable which means that every new trading partner integration will re-use same workflow we can generate ARM template and create a parameter for all dynamic

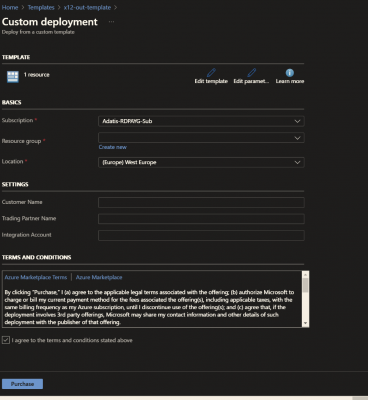

When new customer requests a trading partner integration we will need only to fulfill Customer name, Trading partner name and the name of the integration account which will be used.

Deployment from ARM template will look like:

Introduction to Data Wrangler in Microsoft Fabric

What is Data Wrangler? A key selling point of Microsoft Fabric is the Data Science

Jul

Autogen Power BI Model in Tabular Editor

In the realm of business intelligence, Power BI has emerged as a powerful tool for

Jul

Microsoft Healthcare Accelerator for Fabric

Microsoft released the Healthcare Data Solutions in Microsoft Fabric in Q1 2024. It was introduced

Jul

Unlock the Power of Colour: Make Your Power BI Reports Pop

Colour is a powerful visual tool that can enhance the appeal and readability of your

Jul

Python vs. PySpark: Navigating Data Analytics in Databricks – Part 2

Part 2: Exploring Advanced Functionalities in Databricks Welcome back to our Databricks journey! In this

May

GPT-4 with Vision vs Custom Vision in Anomaly Detection

Businesses today are generating data at an unprecedented rate. Automated processing of data is essential

May

Exploring DALL·E Capabilities

What is DALL·E? DALL·E is text-to-image generation system developed by OpenAI using deep learning methodologies.

May

Using Copilot Studio to Develop a HR Policy Bot

The next addition to Microsoft’s generative AI and large language model tools is Microsoft Copilot

Apr