The second release of Azure Data Factory (ADF) includes

several new features that vastly improve the quality of the service. One of

which is the ability to pass parameters down the pipeline into datasets. In

this blog I will show how we can use parameters to manipulate a generic

pipeline structure to copy a SQL table into a blob. Whilst this is a new

feature for ADF, this blog assumes some prior knowledge of ADF (V1 or

V2)

Creating

the Data Factory V2

One other major difference with ADF V2 is that we no

longer need to chain datasets and pipelines to create a working solution. Whilst

the concept of dependency still exists, that is no longer needed to run a

pipeline, we can now just run them ad hoc. This is important for this demo

because we want to run the job once, check it succeeds and then move onto the

next table instead of managing any data slices. You can create a new V2 data

factory either from the portal or using this command in

PowerShell:

$df = Set-AzureRmDataFactoryV2 -ResourceGroupName -Location -Name

If you are familiar with the PowerShell cmdlets for ADF

V1 then you can make use of nearly all of them in V2 by appending “V2” to the

end of the cmdlet name.

ADF V2

Object Templates

Now we have a Data Factory to work with we can start

deploying objects. In order to make use of parameters we need to firstly

specify how we will receive the value of each parameter. Currently there are

two ways:

1. Via a parameter file

specified when you invoke the pipeline

2. Using a lookup

activity to obtain a value and pass that into a

parameter

This blog will focus on the simpler method using a

parameter file. Later blogs will demonstrate the use of the lookup activity

When we invoke our pipeline, I will show how to reference that file but for now we know we are working with a single parameter called “tableName”.

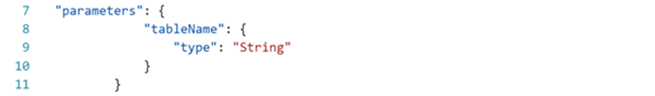

Now we can move on to our pipeline definition which is where the parameter values are initially received. To do this we need to add an attribute called “parameters” to the definition file that will contain all the parameters that will be used within the pipeline. See below:

The same concept needs to be carried through to the dataset that we want to feed the parameters in to. Within the dataset definition we need to have the parameter attribute specified in the same way as in the pipeline.

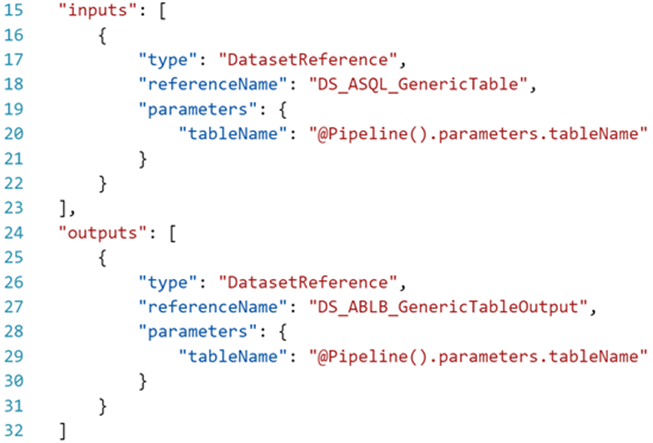

Now that we have declared the parameters to the necessary objects I can show you how to pass data into a parameter. As mentioned before, the pipeline parameter will be populated by the parameter file however the dataset parameter will need to be populated from within the pipeline. Instead of simply referring to a dataset by name as in ADF V1 we now need the ability to supply more data and so the “inputs” and “outputs” section of our pipeline now looks like the below:

- Firstly, we declare the reference type, hence “DatasetReference”.

- We then give the reference name. This could be parameterised if needed

- Finally, for each parameter in our dataset (in this case there is only one called “tableName”) we supply the corresponding value from the pipelines parameter set. We can get the value of the pipeline parameter using the “@Pipeline.parameters.” syntax.

At this point we have received the values from a file, passed them through the pipeline into a dataset and now it is time to use that value to manipulate the behaviour of the dataset. Because we are using the parameter to define our file name we can use its value as part of the “fileName” attribute, see below:

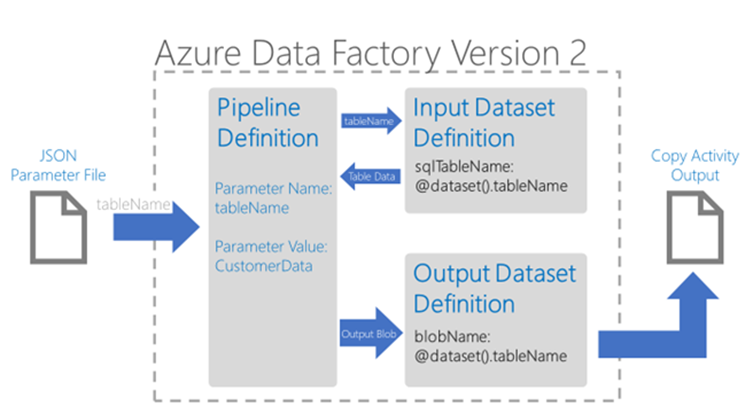

Now we have the ability to input a table name and our pipeline will fetch that table from the database and copy it into a blob. Perhaps a diagram to help provide the big picture:

Now we have a complete working pipeline that is totally generic, meaning we can change the parameters we feed in but should never have to change the JSON definition files. A pipeline such as this could have many uses but in the next blog I will show how we can use a ForEach loop (another ADF v2 feature) to copy every table from a data base still only using a single pipeline and some parameters.

P.S. Use this link to see the entire json script used to create all the objects required for this blog. http://bit.ly/2zwZFLB

Introduction to Data Wrangler in Microsoft Fabric

What is Data Wrangler? A key selling point of Microsoft Fabric is the Data Science

Jul

Autogen Power BI Model in Tabular Editor

In the realm of business intelligence, Power BI has emerged as a powerful tool for

Jul

Microsoft Healthcare Accelerator for Fabric

Microsoft released the Healthcare Data Solutions in Microsoft Fabric in Q1 2024. It was introduced

Jul

Unlock the Power of Colour: Make Your Power BI Reports Pop

Colour is a powerful visual tool that can enhance the appeal and readability of your

Jul

Python vs. PySpark: Navigating Data Analytics in Databricks – Part 2

Part 2: Exploring Advanced Functionalities in Databricks Welcome back to our Databricks journey! In this

May

GPT-4 with Vision vs Custom Vision in Anomaly Detection

Businesses today are generating data at an unprecedented rate. Automated processing of data is essential

May

Exploring DALL·E Capabilities

What is DALL·E? DALL·E is text-to-image generation system developed by OpenAI using deep learning methodologies.

May

Using Copilot Studio to Develop a HR Policy Bot

The next addition to Microsoft’s generative AI and large language model tools is Microsoft Copilot

Apr