One of, if not the most, used transformations in SQL Server Integration Services (SSIS) must be the Lookup transform. Pretty much every ETL routine will make use of the component at some point, especially at the time of loading fact tables. Given the nature of fact and dimension tables, these lookups can be tasked with loading large amounts of data in memory to perform high speed lookups. There are occasions when a few optimisations on these lookups can greatly improve the efficiency of your ETL.

Choosing the Best Cache Option

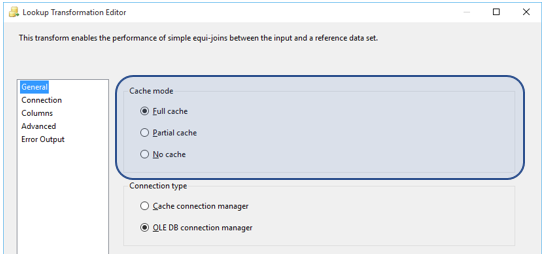

One of the most important options featured on the Lookup Transform is the Cache Mode. By understanding the differences between these choices, you can be sure to pick the option that best suits your ETL needs.

Using the Full Cache Option (Default)

The Full Cache option tell SSIS to consume all your reference data into memory and then perform its lookups on that data. This has the benefit of only hitting the database once to fetch the data but can cause delay on package start-up as all the lookup data will have to be loaded prior to executing the package. You can optimise this by using a SQL Command to fetch only the columns you need but as you can imagine, a dimension with > 1million rows will take some time to cache and this is before you have even done any lookups!

Be aware that if you cannot fit your entire reference set into memory your package will FAIL!! SSIS cannot spool data out to disk if Full Cache is chosen and there is no graceful way of handling this error either. Luckily there is a great blog on how to calculate the cache size here.

Use this option when:

- You want to avoid excessive traffic to your database or it is remote

- You have a small reference table that can be cached quickly

- You can fit your whole lookup dataset into memory

Using the Partial Cache Option

The Partial Cache is a good half way house. This option will tell SSIS to fetch the data at runtime, meaning a quicker start-up time but also a lot more queries to the database. Any value not currently in the cache will be queried for in the database and then stored in the cache, making it available for future lookups. The efficiency here comes from not having to charge the cache on package start-up and then also from having any previously fetched items stored in memory. One very specific benefit the partial cache has is that if your lookup table is being written to during the data flow, the full cache wouldn’t see those rows as it only queries the database once prior to execution. The partial cache however works perfectly here as it will see all the rows in the table at runtime.

Additional performance can be gained by making use of the Miss Cache. This option (found on the advanced page) allows a portion of the cache to be reserved for rows that don’t have a match in the database. SSIS will then know not to look for these rows again, thereby saving any unnecessary queries.

Use this option when:

- You have a small reference set not worth charging a full cache for

- You want to optimise your cache for more frequently accessed rows.

- Your reference table is being updated within your dataflow.

Using the No Cache Option

Finally, No Cache literally means that no data will be cached. This means that every single row will be looked up against the database, even if that row has already been looked up before.

Use this option when:

- You only need to process a small number of rows

- You have severe memory limitations

- For some reason, the partial cache wasn’t a better option

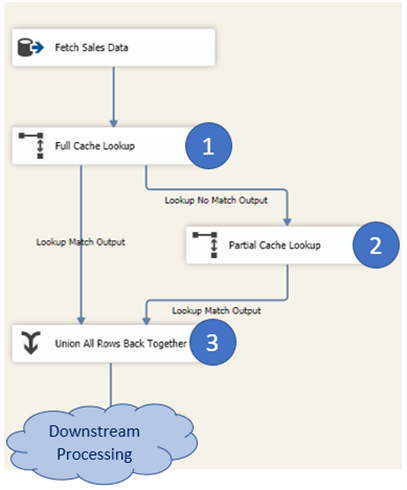

The Cascading Lookup Pattern

A nice way of optimising your lookups is to combine a full and partial cache creating what is known as a Cascading Lookup. The idea here is to use the full cache to store a subset of your reference data that is frequently looked up and then a partial cache for the rest. This works because the full cache shouldn’t take too long to charge, as it’s only a subset of the data, but will still have the benefits of being in memory. The partial cache will be used for the larger, non-frequently looked up data as it will have no charging time but can also make use of the cache to avoid querying the database twice for the same lookup value. An added benefit is that if the reference table is written to during the data flow, these rows will be caught by the partial cache lookup.

In this example, we have a large customer dimension, but we know that only 500 of those customers make a lot of purchases and so we can use a cascading lookup to optimise this dataflow.

- Firstly, choose the Full Cache option. Then use a select statement to get only the data that is most looked up against. In this case, the 500 most loyal customers. Remember to redirect non-matching rows to the non-matching output!

- Now choose the Partial Cache option and then use a select statement to get the rest of the dataset – excluding the loyal customers.

- Finally, union the rows back together so that you have a single result set.

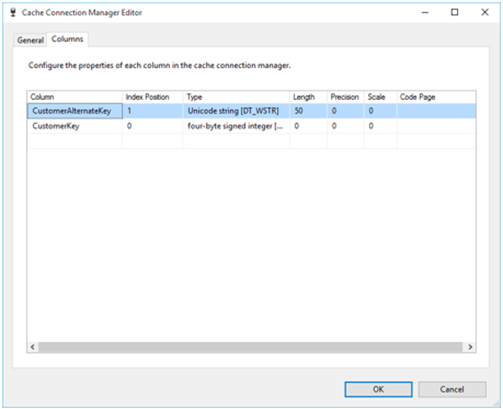

The Cache Connection Manager

Another option that is available is to use the Cache Connection Manager. This is akin to having a persisted full cache that can be referenced several times throughout your package. Better yet, you can convert a package cache connection manager to a project cache connection manager so that it can be referenced by other packages in the project! This offers multiple benefits including:

- Charging your lookup caches in parallel to speed up package start-up

- Caches can be loaded at any given time (although must be before the lookups are needed) so if you need to load the dimension during your ETL, you can.

- Caches will only need to be charged once for your whole ETL project, meaning you can avoid similar lookups querying the same data twice.

The way to implement a Cache Connection Manager is seriously easy!

- To start you need to create a data flow task and then drag a data source onto the design surface.

- Below that, add a Cache Transform and connect the two up. Once you have configured your data source (remembering to select only the columns you absolutely need!), open the editor for the cache transform. Where prompted for a cache connection manager, select New.

- On the General page, you can add a name and description.

- On the Columns page, you will need to do some configuration. For the column/columns you want look up on you should add an Index Position. The lowest index number that is > 0 will be looked up first followed by any other subsequent columns with an Index Position > 0. Any columns with a 0 index are columns that you want to pull into the data flow. In this case that is the CustomerKey. You can also set data types here.

- Once that’s complete you can map the columns in the cache transform.

- Finally, you will now see a new connection manager in your Connection Managers window. Right click on the new cache connection manager and click Convert to Project.

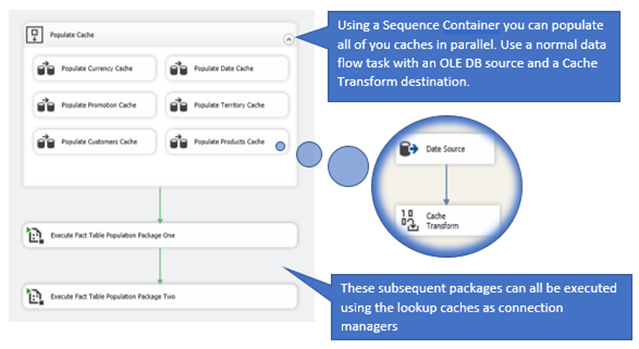

An implementation of a cache connection manager might have a parent package like the below. Within each “Populate XXXXXX Cache” is a dataflow that extracts source data and puts it into a cache transform. The fact table load packages below that can now reference all the dimensional data using the cache connection manager.

In conclusion, there is a lot of optimisation that can be done with regards to lookups and their caching options. Not all of these need to be implemented all of the time. It is more on a case by case basis but knowing that there are options is half the battle.

Introduction to Data Wrangler in Microsoft Fabric

What is Data Wrangler? A key selling point of Microsoft Fabric is the Data Science

Jul

Autogen Power BI Model in Tabular Editor

In the realm of business intelligence, Power BI has emerged as a powerful tool for

Jul

Microsoft Healthcare Accelerator for Fabric

Microsoft released the Healthcare Data Solutions in Microsoft Fabric in Q1 2024. It was introduced

Jul

Unlock the Power of Colour: Make Your Power BI Reports Pop

Colour is a powerful visual tool that can enhance the appeal and readability of your

Jul

Python vs. PySpark: Navigating Data Analytics in Databricks – Part 2

Part 2: Exploring Advanced Functionalities in Databricks Welcome back to our Databricks journey! In this

May

GPT-4 with Vision vs Custom Vision in Anomaly Detection

Businesses today are generating data at an unprecedented rate. Automated processing of data is essential

May

Exploring DALL·E Capabilities

What is DALL·E? DALL·E is text-to-image generation system developed by OpenAI using deep learning methodologies.

May

Using Copilot Studio to Develop a HR Policy Bot

The next addition to Microsoft’s generative AI and large language model tools is Microsoft Copilot

Apr