TensorFlow is an open source software library for Deep Learning that was released by Google in November 2015. It’s Googles second generation deep learning system succeeding the DistBelief program.

Deep learning is a sub-category of machine learning. Deep learning uses layers of interconnected neurons to find patterns in raw data and create data representations from it. See my previous blog on neurons and networks here:- https://adatis.co.uk/Introduction-to-Deep-Learning-Neural-Network-Basics

The networks automatically learn by adapting and correcting themselves, fitting patterns observed in the data. One of the key advantages over conventional machine learning is that they don’t require the domain expertise and manual feature engineering usually associated with machine learning.

Installation

TensorFlow can be installed on Mac OS X, Ubuntu or Windows computers by downloading precompiled executables or on Mac OS X and Ubuntu by downloading the source code and compiling the it locally. All can be found at:- https://www.tensorflow.org/install/

The compiled versions are provided either with CPU support only or with GPU support. I choose CPU support only initially, this is the simplest install path and therefore the fastest way to get up and running. It wasn’t long before I needed to upgrade to the GPU support version, training deep learning models is computationally expensive and you either need to install the GPU support version or be a very patient person. For comparison I ran the same experiment on 2 machines:

Machine 1 – 4 core i7 2.8GHz CPU 16 GB RAM SSD no external graphics card

Machine 2 – 4 core i3 3.3GHz CPU 4 GB RAM HDD NVIDIA GTX760 graphics card. This is an entry level graphics card.

On the machine with the graphics card and running the experiment on GPU the experiment took 426 seconds. On machine with no GPU 3466 seconds.

Luckily upgrading from CPU to GPU version is simple so it’s not worth getting hung up on if you just want to dip in.

The TensorFlow libraries are best accessed through Python APIs, but if you prefer you can access them through C, Java or Go

Lets Get Started

TensorFlow represents machine learning algorithms as computational graphs. A computation graph is made up of a set of entities (commonly called nodes) that are connected via edges. To understand the graph, its sometimes helpful to think of the data as flowing from node to node via the edges being operated on as it goes.

So let’s look at a couple of simple TensorFlow programs, then we can describe the components which are created and what they do. Below is a simple example below which is taken from an interactive Python session:

>>> import tensorflow as tf

>>> hello = tf.constant(‘Hello, TensorFlow!’)

>>> sess = tf.Session()

>>> print(sess.run(hello))

The first line finds and initialises TensorFlow.

The 2nd line build the computational graph, the node called “hello” is defined in this step.

The 3rd line creates a TensorFlow session

The final line executes the graph to calculate the value of the node “hello” which is passed in as a parameter to the session run method.

Let’s now consider this other example:

>>> import tensorflow as tf

>>> a = tf.constant(3, tf.int64)

>>> b = tf.constant(4, tf.int64)

>>> c = tf.add(a,b)

>>> sess = tf.Session()

>>> result = sess.run(c)

>>> print(result)

7

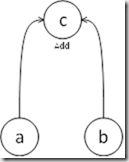

In this example 3 nodes are created a, b and c. This would be represented in the computational graph as below:-

In this graph node c is defined as being an Add operation of the values from nodes a and b. When the sessions run method is called asking for the computation of c TensorFlow works backwards down the graph computing the precedent nodes a and b to enable it to compute node c.

From this you can see why TensorFlow programs are often described as consisting of two sections:

- Building of a computational graph

- Executing of a computation graph.

as the computational graph is constructed before being “run” in a session.

TensorFlow Principal Elements

We can break the Tensorflow down into a set of its principal elements:

- Operations

- Tensors

- Sessions

Operations

Operations are represented by Nodes that handle the combination or transformation of data flowing through the graph. They can have zero or more inputs (an example of an operation with zero inputs is a constant), and they can produce zero or more outputs. A simple operation would be a mathematical function as above but may instead represent control flow direction or File I/O.

Most operations are stateless, values are stored during the running of the graph and are then disposed of, but variables are a special type of operation that maintain state. Under the covers adding a variable to a graph adds 3 separate operations: the variable node, a constant to produce an initial value and an initialiser operation that assigns the value.

Tensors

From a mathematical perspective, tensors are multi-dimensional structures for data elements. The tensor may have any number of dimensions that is called its rank. A scalar has a rank of zero, a vector a rank of one and a matrix a rank of two and onward and upwards without limit. A tensor also has a shape which is a tuple describing a tensors size, i.e. the number of components in each direction. All the data in a tensor must be of the same data type.

From TensorFlows perspective, tensors are objects used in a computational graph. The tensor doesn’t hold data itself, it’s a symbolic handle to the data flowing from operation to operation so it can also be thought of as the edge in the computational graph as it holds the output of one operation which forms the input of the next.

Sessions

A session is a special environment for execution of operations and evaluation of tensors, it is responsible for allocation and management of resources. The session provides a run routine which takes as an input the nodes of the graph to be computed. TensorFlow works backwards from the node of the graph to be computed calculating all preceding nodes which are required.

When run, the nodes are assigned to one or many physical execution units which can be executed on CPU and GPU devices that can be physically located on a single machine or distributed across multiple machines.

Summary

So thats a start, TensorFlow is a software library designed as a framework for Deep Learning which uses a computational graph to process data in the form of Tensors.

Introduction to Data Wrangler in Microsoft Fabric

What is Data Wrangler? A key selling point of Microsoft Fabric is the Data Science

Jul

Autogen Power BI Model in Tabular Editor

In the realm of business intelligence, Power BI has emerged as a powerful tool for

Jul

Microsoft Healthcare Accelerator for Fabric

Microsoft released the Healthcare Data Solutions in Microsoft Fabric in Q1 2024. It was introduced

Jul

Unlock the Power of Colour: Make Your Power BI Reports Pop

Colour is a powerful visual tool that can enhance the appeal and readability of your

Jul

Python vs. PySpark: Navigating Data Analytics in Databricks – Part 2

Part 2: Exploring Advanced Functionalities in Databricks Welcome back to our Databricks journey! In this

May

GPT-4 with Vision vs Custom Vision in Anomaly Detection

Businesses today are generating data at an unprecedented rate. Automated processing of data is essential

May

Exploring DALL·E Capabilities

What is DALL·E? DALL·E is text-to-image generation system developed by OpenAI using deep learning methodologies.

May

Using Copilot Studio to Develop a HR Policy Bot

The next addition to Microsoft’s generative AI and large language model tools is Microsoft Copilot

Apr