This is the first of a series of posts around the Google Cloud Next 2017 conference and some of the services showcased there.

What is it?

This is a new Managed Relational Service with two big considerations:

- Horizontally scalable

- Transactional Consistency across globally distributed database nodes

Scaling is apparently effortless, with sharding just happening as you’d want it no questions asked, no effort required. It supports ANSI SQL 2011, (with some minor changes required around data distribution and primary keys), uses relational schemas, giving tables and views that we are all familiar with, and will be auto-replicated globally (this feature coming later this year). It is going to GA on May 16th 2017. It will support databases of 100s of TB in size, with 99.999% (“five nines”) availability and offers single digit millisecond latency.

So why would we need this? These considerations are of significance in applications that require precision on event times to remove any potential for transactional indecision that would otherwise be possible due to a lack of temporal granularity, whilst being available across the globe at scale. Imagine for example two bank account holders withdraw funds at the same time, one of which will take the account into the red. Who should get declined, holder A, holder B or both? Although not critical many types of database applications, it is essential in Inventory Management, Supply Chain and Financial Services for example, where sub-second precision really is essential when there may be hundreds or thousands of transactions every second. The demonstration given at Google Cloud Next was one of a ticket ordering app receiving around 500 thousand requests for event tickets across the globe (simulated by a bot network of pseudo event-going customers). You can see the YouTube video of this here. Apparently this combination of true transactional consistency and horizontal scale is simply not possible with other sharding approaches.

Hybrid Transactional Analytical Processing (HTAP)

This combination of Horizontal Scale and Transactional Consistency allow for what Gartner have termed “Hybrid Transactional Analytical Processing” (HTAP) applications, where massive analytical capabilities are possible from within the application. So Operational Analytics at really big scale, no data movement, no additional platforms, just straight out of the Operational system. That is really quite a big deal. You can read more on the benefits of HTAP in this free Gartner document here so I won’t elaborate further. Suffice to say not a sliver bullet for all analytical needs across a complex ecosystem of applications, but definitely a game changer when there is only one instance of an application to report from. And if your database can scale to handle it, maybe you will be putting multiple systems into the same repository.

NewSQL

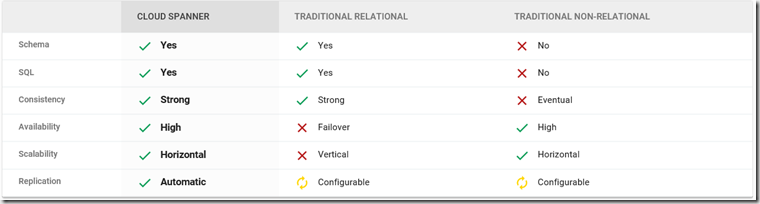

Eh? Is that a typo? Nope. That’s right, another term to get your head round, just when you thought all the NoSQL stuff was settling in. Okay, not really, just a consideration for what tries to be the best of both worlds, at least that’s the idea. So, for the two main considerations for the database world, scalability and consistency, NoSQL offers massive scaling at the expense of transactional consistency, SQL offers transactional consistency over scaling, ‘NewSQL‘ claims to offer both. Simples right?

The CAP Theorem – Two Out Of Three Is The Best You Can Hope For

Proposed by Eric Brewer in 1998, CAP Theorem is a big consideration when we talk about distributed database systems. You can read about this on Wikipedia here.

In essence It states that Consistency of transactions, Availability of the system and the tolerance of network Partitioning, where messages may be lost over the network, is not possible in unison. You can have two of these but never all three. According to Eric Brewer (now working at Google but not on the Spanner team) Google Cloud Spanner doesn’t overcome this, but it comes pretty close. I won’t go into the innards of CAP here, but suffice to say you need to consider the compromise that you are making with the missing C, A, or P element and how that will impact your system. Google describes the system as CP with very good A. Five nines A on this sort of scale is pretty good I’m sure you’ll agree.

So Two and Three Quarters Then? Okay, Tell Me More

So what’s in the secret sauce that apparently gets us close to all three of our CAP Theorem wishes? There are three main ingredients that make this happen.

- Google “TrueTime” time synchronisation, which is based on atomic clock signals and GPS data broadcast throughout the Google network backbone, to ensure transactional consistency. Each datum is assigned a globally consistent timestamp and globally consistent reads are possible without locking. TrueTime defines a known time that has passed, and a time that definately hasn’t passed yet, so a range of time, albeit generally very small. If this range is large for some reason, Google Spanner waits till it has passed to ensure time consistency across the system. There is a lot more to it than that, which you can find out from Eric Brewer here, so I’ll leave that to the experts to explain.

- Consensus on writes across nodes is achieved using the Paxos algorithm, (nothing to do with Greek 18-30 holidays or Sage and onion stuffing), a robust approach to ensuring that writes are consistent over available nodes, using a Quorum approach similar to the ideas round in high availability fail over. So the system determines what is an acceptable level of availability of nodes to guarantee writes are safe.

- Dedicated internal network within Google data centres that allows optimised network communications across nodes globally distributed within Google’s various data centres.

Summing Up

This technology has been used internally for 10+ years, originating from a need to use a better option than the standard MySQL sharding that was the previous approach at Google when needing relational scale-out. It is the underlying technology for the Google F1 database that is used for AdWords and Google Play, both critical for Google’s success and having some demanding requirements for both scale and consistency. You can read more on Google F1 and the internal Spanner database project here.For the Google Spanner product blurb, see here. You’ll see that this is not bleeding edge risky tech. The term ‘battle-tested’ was used far too often for my pacifist side but probably hits the nail on the head (preferably with a foam hammer of course). Ten years running some of the most demanding data processing needs on the planet probably means you’re in safe hands I guess. The potential for HTAP and a truly global relational database that scales effortlessly is going to cause some real changes. Of course Microsoft have not been resting on their laurels in this area either and have this week announce their competitor offering Cosmos DB, although that is essentially a NoSQL approach with less of a consistency focus. More on that in an upcoming post…

Introduction to Data Wrangler in Microsoft Fabric

What is Data Wrangler? A key selling point of Microsoft Fabric is the Data Science

Jul

Autogen Power BI Model in Tabular Editor

In the realm of business intelligence, Power BI has emerged as a powerful tool for

Jul

Microsoft Healthcare Accelerator for Fabric

Microsoft released the Healthcare Data Solutions in Microsoft Fabric in Q1 2024. It was introduced

Jul

Unlock the Power of Colour: Make Your Power BI Reports Pop

Colour is a powerful visual tool that can enhance the appeal and readability of your

Jul

Python vs. PySpark: Navigating Data Analytics in Databricks – Part 2

Part 2: Exploring Advanced Functionalities in Databricks Welcome back to our Databricks journey! In this

May

GPT-4 with Vision vs Custom Vision in Anomaly Detection

Businesses today are generating data at an unprecedented rate. Automated processing of data is essential

May

Exploring DALL·E Capabilities

What is DALL·E? DALL·E is text-to-image generation system developed by OpenAI using deep learning methodologies.

May

Using Copilot Studio to Develop a HR Policy Bot

The next addition to Microsoft’s generative AI and large language model tools is Microsoft Copilot

Apr