Rather than just using the the local domain values in its internal knowledge base, Data Quality Services (DQS) can instead clean data by using external reference data. This is a feature called Reference Data Services and in this blog post I’m going to show an example of cleaning SSIS data by connecting to the Azure Data Market.

Azure Data Market

After you’ve signed up for Azure Data Market, you’ll need to subscribe to datasets that are compatible with DQS. Unfortunately they generally cost money, but I have found one (namely DMTI Spatial) that allows you to sign up for a free trial. I do think this is a good idea and I’d like to see free trials offered on Data Market by other providers. I don’t happen to have a huge data quality problem with Canadian addresses, but it does allow me to show how Reference Data Services works with SSIS.

Domain Management

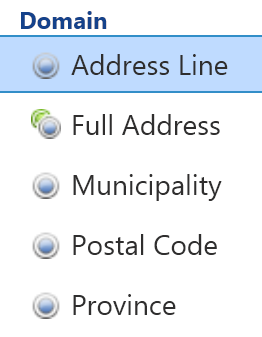

Once we’ve signed up for some data, the next step is to build the knowledge base in the Data Quality Client. In my case, I’m going to setup a simple set of address domains based on addresses:

For a detailed guide on how to setup domains, see the following article.

Reference Data Services

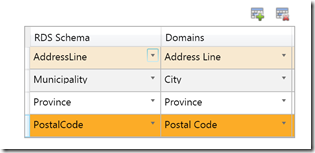

In order to create the link to the external data, settings need to be changed within Domain Management. Having selected the composite address domain, the first step is to click on the Reference Data tab, then click on the browse button, which will cause the following window to appear:

As there is only one dataset available, I’m going to pick it and then map each of the domains to the schema given by the reference dataset:

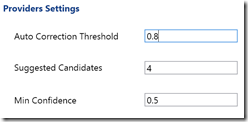

After clicking ok, there are some optional settings to adjust in order to influence auto correction, the number of candidates and the minimum confidence:

SSIS and DQS

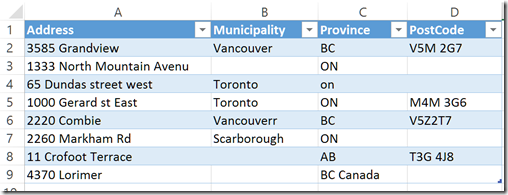

Now its time to look at how the combination of DQS and the external data can be used within SSIS. I’ve put together just a simple CSV containing a few commercial Canadian addresses to clean, with a few spelling mistakes etc:

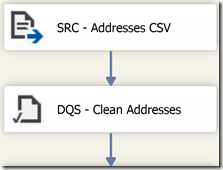

Within SSIS, the first step is to create a data flow that pulls data from the CSV. Then, within the data flow, we need to connect the the CSV source to the DQS Cleansing Transform:

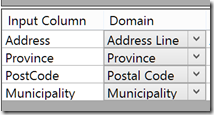

The key part within SSIS is to edit the DQS Cleansing transform, as we need to first select a knowledge base, then match the incoming columns to the DQS domains:

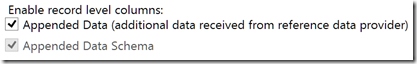

Interestingly on the advanced tab of the transform there are checkboxes to return additional columns from the reference data provider, which is a provider-dependant feature and is not actually available within the DQ client:

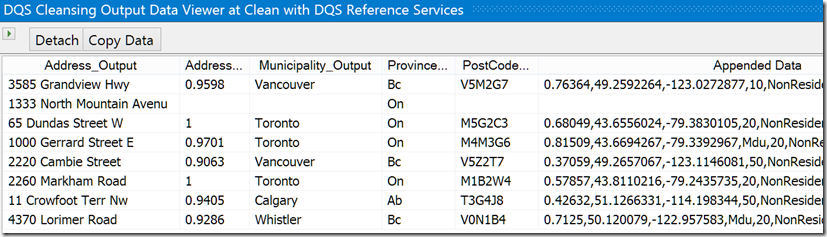

Now we’re ready to go. When running the SSIS package with a data viewer, the following results are output:

Most of our addresses have been looked up and corrected, e.g. “4370 Lorimer” to “4370 Lorimer Road” and “Gerard St” to “Gerrard St”. The confidence of the cleaning is included in the output, as well as the aforementioned ‘extra’ appended data. In this case, as we’re using the DMTI provider, the type of property and latitude/longitude are amongst the columns returned.

As a final point, I noticed that the initial results from the external provider were changing my address values of “Road” and “Street” to “Rd” and “St”. As you can see from my Excel screenshot earlier, my input addresses were a mix of “Rd” and “Road”, but I wanted to standardise on “Road” and “Street”. This is exactly what Term Based Relations are for in DQS, but i didn’t expect them to work with external reference data as the data returned by the external data is in theory correct. Surprisingly, they do work with Reference Data Services, meaning its possible to gain a bit of extra control over the output.

Introduction to Data Wrangler in Microsoft Fabric

What is Data Wrangler? A key selling point of Microsoft Fabric is the Data Science

Jul

Autogen Power BI Model in Tabular Editor

In the realm of business intelligence, Power BI has emerged as a powerful tool for

Jul

Microsoft Healthcare Accelerator for Fabric

Microsoft released the Healthcare Data Solutions in Microsoft Fabric in Q1 2024. It was introduced

Jul

Unlock the Power of Colour: Make Your Power BI Reports Pop

Colour is a powerful visual tool that can enhance the appeal and readability of your

Jul

Python vs. PySpark: Navigating Data Analytics in Databricks – Part 2

Part 2: Exploring Advanced Functionalities in Databricks Welcome back to our Databricks journey! In this

May

GPT-4 with Vision vs Custom Vision in Anomaly Detection

Businesses today are generating data at an unprecedented rate. Automated processing of data is essential

May

Exploring DALL·E Capabilities

What is DALL·E? DALL·E is text-to-image generation system developed by OpenAI using deep learning methodologies.

May

Using Copilot Studio to Develop a HR Policy Bot

The next addition to Microsoft’s generative AI and large language model tools is Microsoft Copilot

Apr