Recently at a client, we needed to come up with a few different ways that we can perform File Management operations within their Data Lake – for example moving files once processed and in their case, renaming folders etc. We needed to come up with different solutions to what we currently used in order to keep within their desired architecture. So we started looking at using the REST API and calling that using C# within an SSIS package. The other option I looked at was using Python. I will explain more about both the methods below, but first there is some set up we need to do.

Pre-Requisites

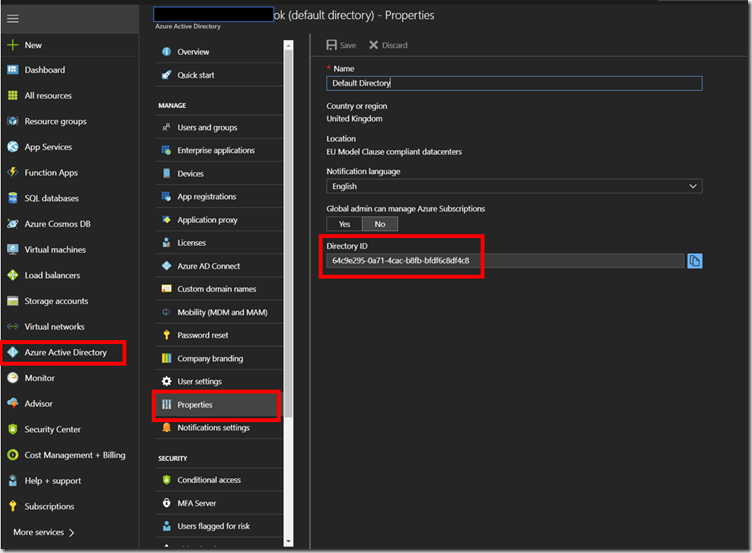

Aside from having an Azure Subscription and a Data Lake Store account, you will need an Azure Active Directory Application. For our case we needed a Web Application as we will be doing Service to Service authentication. This is where the application provides it’s own credentials to perform the operations whereas with End-User authentication, a user must log into your application using Azure AD.

Service-to-service authentication setup

Data Lake store uses Azure Active Directory (AAD) for authentication, and this results in our application being provided with an OAuth 2.0 token, which gets attached to each request made to the Azure Data Lake Store. To read more about how it works and how to create the app, get the relevant credentials and how to give it access to the Data Lake store, follow through the Microsoft tutorial here.

Make a note of the below as we will be needing them when we develop the solutions:

- Tenant ID (also known as Directory ID)

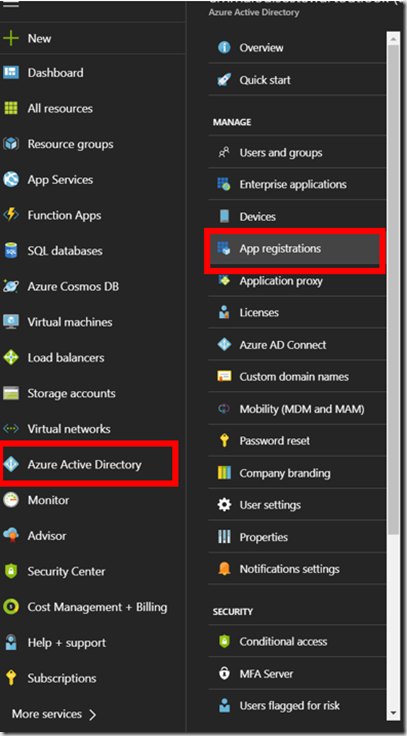

- Client Id (also known as the ApplicationID)

Within App registrations, if you look for your App under ‘All Apps’ and click on it you will be able to retrieve the Application Id.

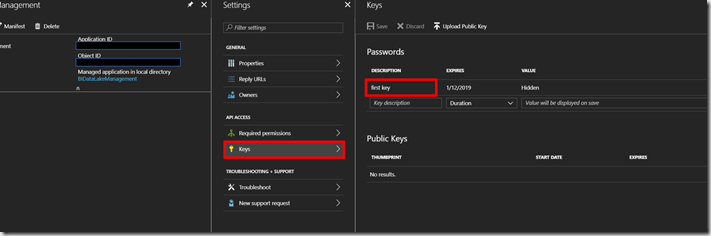

- Client Secret (also known as Authentication Key)

Within the App area used above, click on setting and then ‘Keys’. If you haven’t previously created one, you can create it there and you must remember to save it when it appears as you will not get another chance!

- Data Lake Name

Using REST API

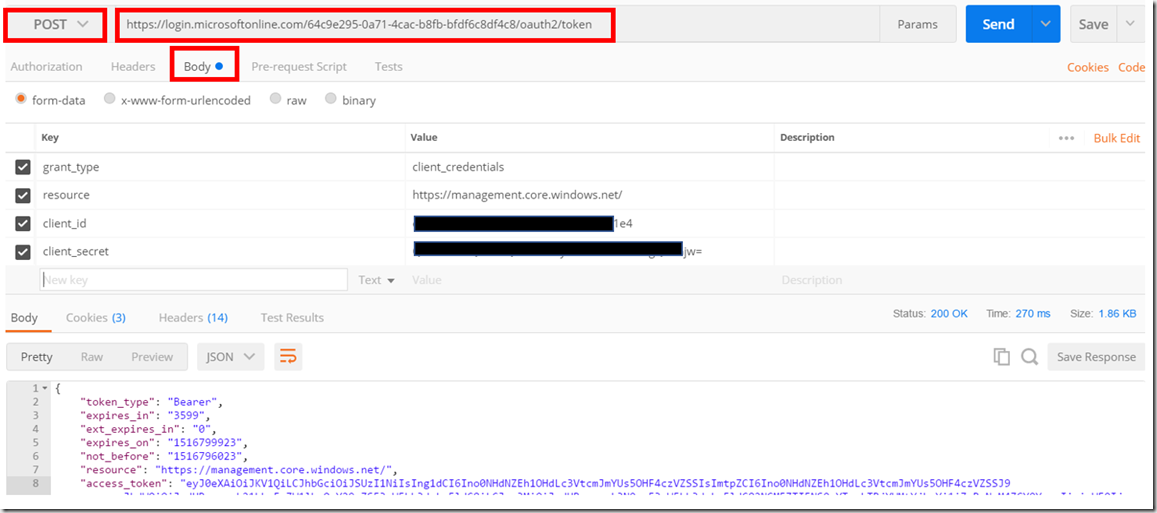

Now we have everything set up and all the credentials we need, we can make a start constructing the requests. In order to test them out I used Postman which can be downloaded here.

Authentication

Firstly, before we can begin any folder management operations, we need to authenticate against the Data Lake. To do this we need to perform a POST request in order to obtain an access token. This token will be used and passed to the other REST API calls we will make (e.g. deleting files) as we will always need to authenticate against the Data Lake.

To retrieve the access token, we need to pass through the TENANT ID, CLIENT ID and CLIENT SECRET and the request looks as follows:

curl -X POST https://login.microsoftonline.com//oauth2/token

-F grant_type=client_credentials

-F resource=https://management.core.windows.net/

-F client_id=

-F client_secret=

Within Postman, it looks like this:

1. Make sure the request type is set to POST

2. Make sure you have added your tenant id to the request

3. Fill out the body with your Client ID and Client Secret. (grant_type and resource are set as constant values as shown above).

4. Make a note of the Bearer access token as we will need it to perform any File Management operation on the Data Lake.

Deleting a File

Now we have our access token, we can perform a deletion of a file with the following:

curl -i -X DELETE -H “Authorization: Bearer ” ‘https://.azuredatalakestore.net/webhdfs/v1/mytempdir/myinputfile1.txt?op=DELETE’

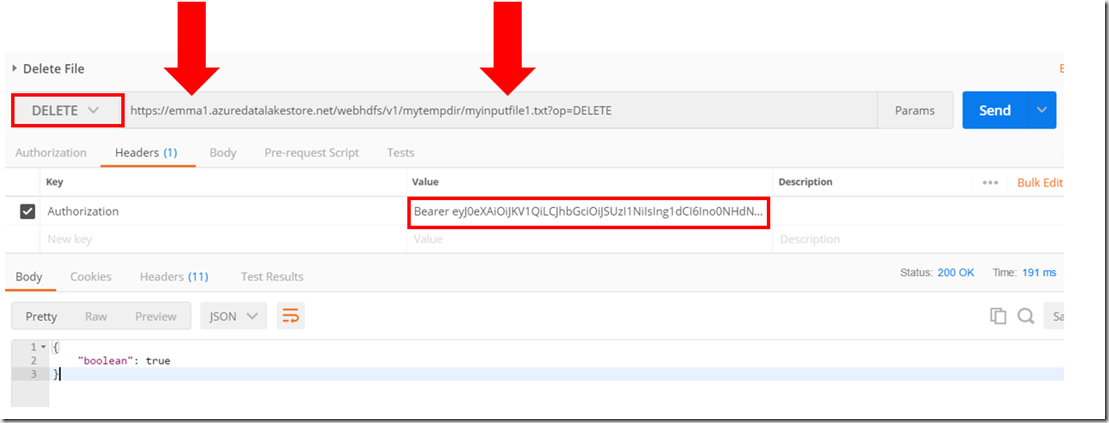

Within Postman, it looks like the following:

1. This is a DELETE request and have therefore changed the dropdown to reflect that.

2. Remember to add your data lake store name into the request; in my example it is called emma1

3. You can point to a particular file, or you can point it to a folder and add &recursive=true to the request and it will delete all the files within the folder including the folder itself. I haven’t managed to find a way to just delete the contents of the folder and leaving the folder as is.

4. The access token is sent as a header called ‘Authorization’. Make sure to include ‘Bearer ‘ before you access token as highlighted above.

Once you have sent the request, you will receive some JSON in the output to show if the action has been successful (true).

You can perform many more File Management operations using the Rest API and the code can be found here:

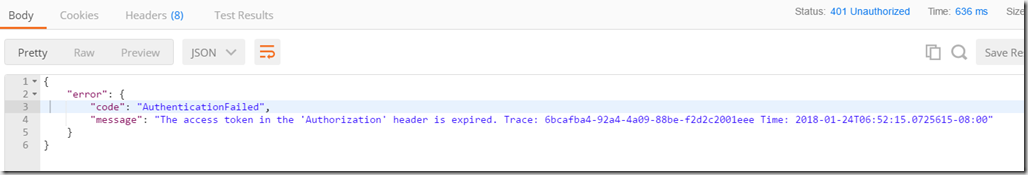

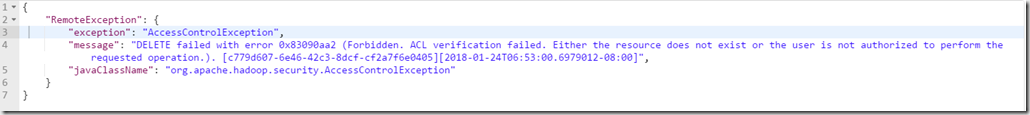

Common Errors

1. This following error is caused by running the delete file request when passing through an Access Token that has expired. To fix this issue, re-generate the Access token and pass that through instead.

2. The error below is caused by the Application that we are using to access the Data Lake Store not having sufficient permissions to perform the operations. Make sure it has access to the relevant folder(s). Check Step 3 here to find out how to set the access.

Summary

Now we have managed to get what them working manually within Postman, we need to consider how to call them in a production environment. The solution we implemented was an SSIS package (in-keeping with their current architecture) with script tasks calling C# which in turn calls the API. Before the File System Operation is called, we will run the authentication API call to obtain the latest Access Token, and place the value in a variable to be used later on within the package to ensure it is the latest.

Using Python

From having a play around with Python do do similar File Management operations, it seems rather limited in comparison and you can’t do as much.Nevertheless, I am sure more functionality will be added and it is useful to know how it works.

Firstly, if you don’t already have Python, you can download the latest version from here. As an IDE, I have been using Visual Studio 2017 which now comes with Python Support, see here for further information.

In order for us to be able to perform operations on the Data Lake, we need to install three Azure modules. To install the modules, open up the command prompt and run the following:

pip install azure-mgmt-resource

pip install azure-mgmt-datalake-store

pip install azure-datalake-store

Now we need to create the Python app (I used Visual Studio) to do the folder management tasks. In order to reference the modules we have just installed, we need to import the relevant modules so we can use them within our app. Each time we create an app related to Data Lake folder manipulations, we need to add them in each time. The code below shows how to do this. Save the application, but don’t run it yet!

## Use this for Azure AD authentication

from msrestazure.azure_active_directory import AADTokenCredentials## Required for Azure Data Lake Store account management

from azure.mgmt.datalake.store import DataLakeStoreAccountManagementClient

from azure.mgmt.datalake.store.models import DataLakeStoreAccount## Required for Azure Data Lake Store filesystem management

from azure.datalake.store import core, lib, multithread## Common Azure imports

import adal

from azure.mgmt.resource.resources import ResourceManagementClient

from azure.mgmt.resource.resources.models import ResourceGroup## Use these as needed for your application

import logging, getpass, pprint, uuid, time

Firstly, we need to authenticate with Azure AD. Again, as described above there are two ways; End-User and Service-to-Service. We will be using Service-to-Service again in this example. To set this up, we run the following:

adlCreds = lib.auth(tenant_id = ‘FILL-IN-HERE’, client_secret = ‘FILL-IN-HERE’, client_id = ‘FILL-IN-HERE’, resource = ‘https://datalake.azure.net/’)

And fill in the TENANT ID, CLIENT SECRET and CLIENT ID that we captured earlier on.

Now we can authenticate against the Data Lake, we can now attempt to delete a file. We need to import some more modules, so add the script below to your application:

## Use this only for Azure AD service-to-service authentication

from azure.common.credentials import ServicePrincipalCredentials## Required for Azure Data Lake Store filesystem management

from azure.datalake.store import core, lib, multithread

We now need to create a filesystem client:

## Declare variables

subscriptionId = ‘FILL-IN-HERE’

adlsAccountName = ‘FILL-IN-HERE’## Create a filesystem client object

adlsFileSystemClient = core.AzureDLFileSystem(adlCreds, store_name=adlsAccountName)

We are now ready to perform some file management operations such as deleting a file:

## Delete a directory

adlsFileSystemClient.rm(‘/mysampledirectory’, recursive=True)

Please see the script below for the full piece of code. You can find information on the other operation you can complete (e.g. creating directories) here

## Use this for Azure AD authentication

from msrestazure.azure_active_directory import AADTokenCredentials## Required for Azure Data Lake Store filesystem management

from azure.datalake.store import core, lib, multithread# Common Azure imports

import adal

from azure.mgmt.resource.resources import ResourceManagementClient

from azure.mgmt.resource.resources.models import ResourceGroup## Use these as needed for your application

import logging, getpass, pprint, uuid, time## Service to service authentication with client secret for file system operations

adlCreds = lib.auth(tenant_id = XXX’, client_secret = ‘XXX’, client_id = ‘XXX’, resource = ‘https://datalake.azure.net/’)

## Create filesystem client

## Declare variables

subscriptionId = ‘XXX’

adlsAccountName = ’emma1′## Create a filesystem client object

adlsFileSystemClient = core.AzureDLFileSystem(adlCreds, store_name=adlsAccountName)## Create a directory

#adlsFileSystemClient.mkdir(‘/mysampledirectory’)## Delete a directory

adlsFileSystemClient.rm(‘/mysampledirectory’, recursive=True)

Summary

In summary, there are a few different ways in which you can handle your file management operations within Data Lake and the principles behind the methods are very similar. So, if one way doesn’t fit into your architecture, there is always an alternative.

Introduction to Data Wrangler in Microsoft Fabric

What is Data Wrangler? A key selling point of Microsoft Fabric is the Data Science

Jul

Autogen Power BI Model in Tabular Editor

In the realm of business intelligence, Power BI has emerged as a powerful tool for

Jul

Microsoft Healthcare Accelerator for Fabric

Microsoft released the Healthcare Data Solutions in Microsoft Fabric in Q1 2024. It was introduced

Jul

Unlock the Power of Colour: Make Your Power BI Reports Pop

Colour is a powerful visual tool that can enhance the appeal and readability of your

Jul

Python vs. PySpark: Navigating Data Analytics in Databricks – Part 2

Part 2: Exploring Advanced Functionalities in Databricks Welcome back to our Databricks journey! In this

May

GPT-4 with Vision vs Custom Vision in Anomaly Detection

Businesses today are generating data at an unprecedented rate. Automated processing of data is essential

May

Exploring DALL·E Capabilities

What is DALL·E? DALL·E is text-to-image generation system developed by OpenAI using deep learning methodologies.

May

Using Copilot Studio to Develop a HR Policy Bot

The next addition to Microsoft’s generative AI and large language model tools is Microsoft Copilot

Apr