I recently had the pleasure of attending SQLBits, there were a number of great talks this year, and I think overall it was a fantastic event. As part of our commitment to learning at Adatis, we were also challenged to present back to the team something of interest we learnt at the event. This then becomes our internal Adatibits event where we can get sample of sessions from across the week.

As such, I was pleased when I saw a talk by Ben Keen on the Friday bill of SQLBits that revolves around deep learning. Having just come through the Microsoft Data Science program, this fell in line with my interest / research in data science, and was focusing on the bleeding edge of the subject area. It was also the talk I was probably looking forward to the most from the synopsis, and I thought it was very well delivered.

Anyway, on to the blog. In the following paragraphs, I’ll cover a cut down version of the talk and also talk about my experience using the MNIST dataset on Azure Batch AI. Credit to Ben for a few of the images I’ve used as they came off his slide deck.

What is Deep Learning?

So you’ve heard of machine learning (ML), and what that can do – deep learning is essentially a subset of ML, which uses neural network architectures (similar to human brains). It can work with both supervised (labelled) and unsupervised data, although its value today is tending towards learning from the labelled data. You can almost think of it as an evolution of ML. It’s performance tends to improve the more data you can throw at it, where traditional ML algorithms seem to plateau. Deep learning also has an ability to perform automatic feature extraction from raw data (feature learning), where as the traditional routes have features provided as part of the dataset.

How does it help us? What are its use cases?

Deep learning excels at finding patterns in unstructured data. The following examples would be very difficult to write program to do, which is where the field of DL comes in. The use cases are usually split into 4 main areas – image, text, sound, and video.

- Image – medical imaging to diagnose heart disease / cancers

- Image – classification / segmentation of satellite images (NASA)

- Image – restoring colour to blank and white photos

- Image – Pixel Super Resolution (generating higher res images from lower ones)

- Text – real time text translation

- Text – identifying font (Adobe DeepFont)

- Sound – real time foreign language translation

- Sound – restoring sound to videos

- Video – motion detection in self-driving cars (Tesla)

- Video – beating video games (DeepMind beating Atari Breakout)

- Video – redacting video footage in Police body-cameras (NIJ)

Deep Neural Network Example

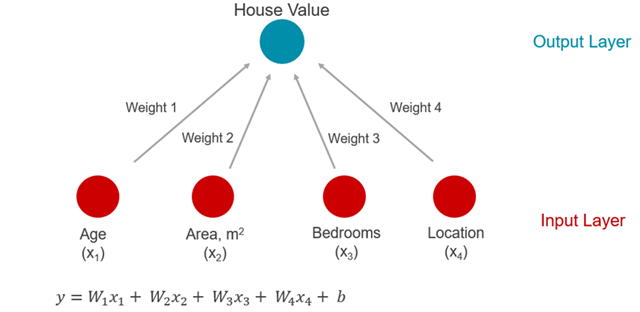

A simple example of how we can use deep learning is to understand the complexity around house prices. Taking an input layer of neurons for things such as Age, Sq. Footage, Bedrooms, and Location of a house – one can normally apply the traditional linear formula y = mx + c to apply weightings to the neurons to calculate the house price. This is a very simplistic view and there are many more factors that apply that can change the value. This often involves the different neurons intersecting with one another.

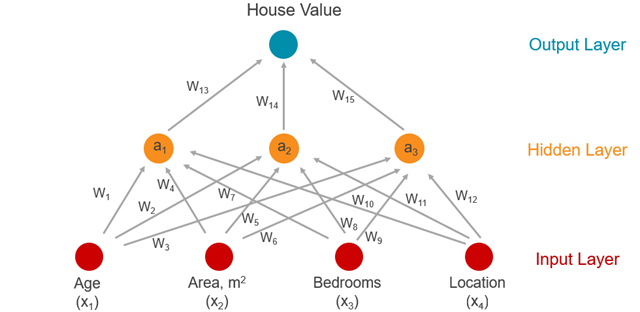

For example, people would think a house with a large number of bedrooms is a good thing and this would raise the price of the house, but if all these bedrooms were really small – then this wouldn’t be a very attractive offering for anyone other than a developer (and even then they might baulk at the effort involved) therefore lowing the price. This is especially true with people wanting more open plan houses nowadays. So traditionally people might be interested in bedrooms, this may shift in recent times to Sq. Footage as the main driver.

Therefore a number of weights can be attributed to an intermediary layer called the hidden layer.

The neural network architecture then uses this hidden layer to perform a better prediction. It does this by starting off with completely arbitrary weights (which will make a poor prediction). The process then multiplies the inputs by these weights, and applies an activation function to get to the hidden layer (a1, a2, a3). This neuron is then multiplied by another weight and another activation function to generate the prediction. This value is then scored, and evaluated via some form of loss function (Root Mean Squared Error). The weights are adjusted accordingly and the process is repeated. The weights are adjusted through a process called gradient decent.

To give you an idea of scale, and weight optimisation that’s required – a 50×50 RGB image has 7,500 input neurons. Therefore we’re going to need to scale out as the training is very compute intensive. This is where Azure Batch AI comes in!

Azure Batch AI

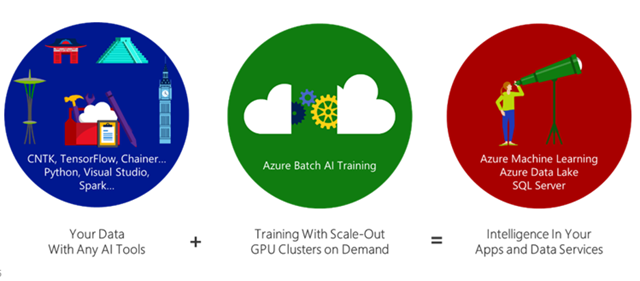

Azure Batch AI is a managed service for training deep learning models in parallel at scale.

It’s currently in public preview, which I believe it entered around September 2017. It’s built on top of Azure Batch and essentially sells the standard Azure story where it provides the infrastructure for data scientists so they don’t need to worry about it and can get on with more practical work.

You can take your ML tools and workbooks (CNTK, TensorFlow, Python, etc.) and provision a GPU cluster on demand to run them against. Its important to note, the provision is of GPU, not CPU – similar cores, less money, less power consumed for this type of activity.

Once trained, the service can provide access to the trained model via apps and data services.

As part of my interest in the subject, I then went and looked at using the service to train a model off the MNIST dataset. This is a collection of handwritten digits between 1-9 with over 60,000 examples. It’s a great dataset to use to try out learning techniques and pattern recognition methods while spending minimal efforts on pre-processing and formatting. This is not always easy with most images as they contain a lot of noise and require time to convert into a format ready for training.

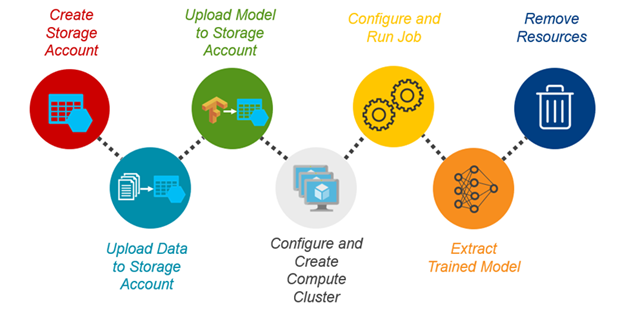

I then followed the following process within Azure Batch AI.

Created a Storage Account using Azure CLI along with a file share and directory.

# Login az login -u <username> -p <password> # Register resource providers az provider register -n Microsoft.BatchAI az provider register -n Microsoft.Batch # Create Resource Group az group create --name AzureBatchAIDemo --location uksouth # Create storage account to host data/scripts az storage account create --name azurebatchaidemostorage --sku Standard_LRS --resource-group AzureBatchAIDemo # Create File Share az storage share create --account-name azurebatchaidemostorage --name batchaiquickstart # Create Directory az storage directory create --share-name batchaiquickstart --name mnistcntksample --account-name azurebatchaidemostorage

Uploaded the training / test datasets, and Python script.

# Upload train, test and script files az storage file upload --share-name batchaiquickstart --source Train-28x28_cntk_text.txt --path mnistcntksample --account-name azurebatchaidemostorage az storage file upload --share-name batchaiquickstart --source Test-28x28_cntk_text.txt --path mnistcntksample --account-name azurebatchaidemostorage az storage file upload --share-name batchaiquickstart --source ConvNet_MNIST.py --path mnistcntksample --account-name azurebatchaidemostorage

Provisioned a GPU cluster. The NC6 consists of 1 GPU, which is 6 vCPUs, 56GB memory, and is roughly 80p/hour. This scales all the way up to an ND24 which is 4 GPUs, 448GB memory, for roughly £7.40/hour.

# Create GPU Cluster NC6 is a NVIDIA K80 GPU az batchai cluster create --name azurebatchaidemocluster --vm-size STANDARD_NC6 --image UbuntuLTS --min 1 --max 1 --storage-account-name azurebatchaidemostorage --afs-name batchaiquickstart --afs-mount-path azurefileshare --user-name <username> --password <password> --resource-group AzureBatchAIDemo --location westeurope # Cluster status overview az batchai cluster list -o table

Created a training job from a JSON template – this tells the cluster where to find the scripts and the data, how many nodes to use, what container to use, and where to store the trained model. This can be then be run!

# Create a training job from a JSON template az batchai job create --name batchaidemo --cluster-name azurebatchaidemocluster --config batchaidemo.json --resource-group AzureBatchAIDemo --location westeurope # Job status az batchai job list -o table

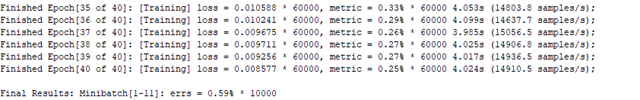

The output can be seen in real time along with the epochs and metrics. An epoch is essentially a full training cycle, and by having multiple epochs, you can cross validate your data, which leads the model to generalise more and fit real world data better.

# Output metadata az batchai job list-files --name batchaidemo --output-directory-id stdouterr --resource-group AzureBatchAIDemo # Observe realtime output az batchai job stream-file --job-name batchaidemo --output-directory-id stdouterr --name stderr.txt --resource-group AzureBatchAIDemo

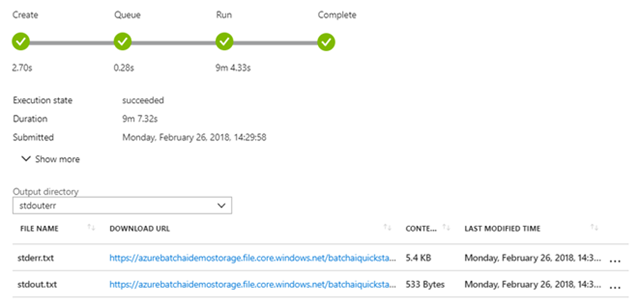

The pipeline can also be seen in the Azure portal along with links to the output metadata.

Once the model has been trained, it can be extracted, and the resources can be cleared down.

# Clean Up az batchai job delete --name batchaidemo az batchai cluster delete --name azurebatchaidemocluster az group delete --name AzureBatchAIDemo

Conclusion

By moving the compute into Azure, and having the ability to scale – this means we can generate a faster learning rate for our problem. This in turn will mean better hyperparameter tuning to generate better weightings, which will mean better models, and better predictions.

As Steph Locke also alluded to in her data science talk – this means data scientists can do more work on things that they are good at, rather than waiting around for models to train to re-evaluate. Deep learning is certainly an interesting space to be in currently!

Introduction to Data Wrangler in Microsoft Fabric

What is Data Wrangler? A key selling point of Microsoft Fabric is the Data Science

Jul

Autogen Power BI Model in Tabular Editor

In the realm of business intelligence, Power BI has emerged as a powerful tool for

Jul

Microsoft Healthcare Accelerator for Fabric

Microsoft released the Healthcare Data Solutions in Microsoft Fabric in Q1 2024. It was introduced

Jul

Unlock the Power of Colour: Make Your Power BI Reports Pop

Colour is a powerful visual tool that can enhance the appeal and readability of your

Jul

Python vs. PySpark: Navigating Data Analytics in Databricks – Part 2

Part 2: Exploring Advanced Functionalities in Databricks Welcome back to our Databricks journey! In this

May

GPT-4 with Vision vs Custom Vision in Anomaly Detection

Businesses today are generating data at an unprecedented rate. Automated processing of data is essential

May

Exploring DALL·E Capabilities

What is DALL·E? DALL·E is text-to-image generation system developed by OpenAI using deep learning methodologies.

May

Using Copilot Studio to Develop a HR Policy Bot

The next addition to Microsoft’s generative AI and large language model tools is Microsoft Copilot

Apr