I recently ran into a problem which required me to work with a SFTP site as part of our ETL process in Azure. Using the traditional BI stack, FTP tasks would be natively supported but this is no longer the case when working with Azure – this now requires a bit of plumbing. This blog will take a look at the ways in which you can interact with FTP/SFTP sites as part of your pipelines in ADF and the issues I ran into. Specifically the task I was trying to solve was to archive files (move them to a separate folder) that had passed into our staging layer, so they would not be picked up on the next process run. The blog will also cover passing parameters into a webhook as I also needed the solution to be flexible to handle different SFTP sources.

Like many similar tasks through Azure, you can go about them in a number of ways (Logic Apps, Functions, PS Runbooks, etc) but its about finding the solution that fits with your architecture and you find comfortable developing and maintaining. I initially started looked at using Logic Apps since quite a bit of the work was done for you, they have the connectors already setup, and the framework to achieve some simple tasks which sounded perfect for the job. Unfortunately, Logic Apps is not designed for any type of heavy lifting. You can move files using the platform but are capped at a mere 50MB when trying to pick up and put down a file across the platform. Rumours were that Microsoft would extend this to 1GB at some point during 2018 but this has not been the case so far. There was also no such task to just change the remote path location as far as I could tell – it looks like people are up-voting it here.

Next I looked at using Azure functions, since I would do something similar through SSIS if this was a traditional stack problem. This involves writing a bit of C# to talk to the SFTP site and do the tasks for you. While this is very possible, it didn’t fit into our existing architecture. I also felt it was easier to build something to maintain using PowerShell – a language which I’m enjoying coding in more and more.

Lastly I looked at using Azure Automation and doing the tasks through PowerShell. There are a number of modules which you can use to achieve the goal, for which I used Posh SSH. I also looked at WinSCP but found the Posh cmdlets more flexible.

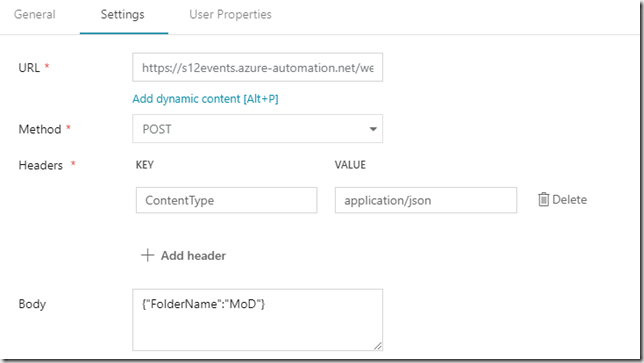

The first thing I did was work out how to pass parameters from a webhook into the script. This was so that the script could be used across multiple SFTP folders. In ADF it was as simple as defining the headers/body to pass with the POST call to the webhook.

Within the PS script I then defined the following region to pass the parameters into. The parameters are passed in as an object which is then deconstructed into the various header/body elements. The body is also deconstructed further from JSON into the variable I wanted. I believe it is also mandatory to name the object received by the script as $WebhookData as this is not configurable externally.

# Get parameter value Param ([object]$WebhookData) #region Verify Webhook if ($WebHookData){ # Collect properties of WebhookData $WebhookName = $WebHookData.WebhookName $WebhookHeaders = $WebHookData.RequestHeader $WebhookBody = $WebHookData.RequestBody # Collect individual headers if required. Input converted from JSON. $Input = (ConvertFrom-Json -InputObject $WebhookBody) Write-Output "WebhookBody: $($Input)" Write-Output -InputObject ('Runbook started from webhook {0}.' -f $WebhookName) # Extract variables $folderName = $Input.FolderName } else { Write-Error -Message 'Runbook was not started from Webhook' -ErrorAction stop } #endregion

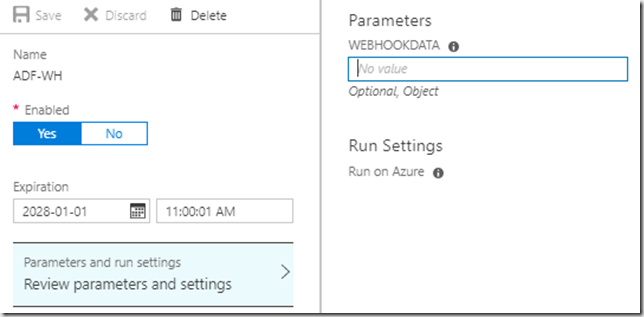

Once this is then defined within the script, you can setup the webhook from the Automation end and paste the URL it generates back into ADF. Its also at this point that the webhook configuration allows you to define a parameter as part of the webhook. Without the body of code above, this will not be an option, and cannot be created first as I originally assumed.

Once the parameter passing has been dealt with, the code block for connecting to the SFTP site and performing the task is straight forward.

# Get variable values $userName = Get-AutomationVariable -Name 'SftpUserName' $userPassword = Get-AutomationVariable -Name 'SftpPassword' $hostName = Get-AutomationVariable -Name 'SftpHost' $port = Get-AutomationVariable -Name 'SftpPort' # Create PS credential from username/password $userPassword = ConvertTo-SecureString -String $userPassword -AsPlainText -Force $userCredential = New-Object -TypeName System.Management.Automation.PSCredential ($userName, $userPassword) # Create new SFTP session $session = New-SFTPSession -ComputerName $hostName -Credential $userCredential -Port $port -AcceptKey # Retrieve child objects on remote path and for every zip rename to zip.bk $remotePath = "/Data/" + $folderName + "/" Get-SFTPChildItem $session $remotePath| ForEach-Object { if ($_.Fullname -like '*.zip' -Or $_.Fullname -like "*.txt") { $NewName = $_.Name + ".bk" Rename-SFTPFile $session -Path $_.FullName -NewName $NewName Write-Output "$($_.FullName) has been renamed to $($NewName)" } }

The first task is to extract the variables from the Automation resource. These should not be hard-coded into the script, but stored as variables external to the runbook. Then a PSCredential requires configuration in the format above.

Finally the task to archive the files is performed. This involves creating a new SFTP session, and iterating over the child items within the remote path. For each file it finds, it will then perform the archiving process as long as they have the correct extension.

While I originally wanted to move the files between folders I realised this was not possible with the modules I was using. I’m sure with a bit more research something would be available out there to achieve this, specifically changing the path of a remote file.

Therefore I was limited to changing the name of a remote file by adding a .bk to the end of the filename. ADF would then only pass over files ending in .zip the next time round.

Conclusion

I’m hoping this will be useful for anyone in the same situation. I’ve specifically used the webhook parameters multiple times now. While I didn’t achieve my original goal – with a small workaround I still satisfied my original requirement to archive the files from future process runs. There’s also some other code snippets that might be of good reference for future implementations here too, specifically thinking around the PS credential creation. Hope it helps!

Introduction to Data Wrangler in Microsoft Fabric

What is Data Wrangler? A key selling point of Microsoft Fabric is the Data Science

Jul

Autogen Power BI Model in Tabular Editor

In the realm of business intelligence, Power BI has emerged as a powerful tool for

Jul

Microsoft Healthcare Accelerator for Fabric

Microsoft released the Healthcare Data Solutions in Microsoft Fabric in Q1 2024. It was introduced

Jul

Unlock the Power of Colour: Make Your Power BI Reports Pop

Colour is a powerful visual tool that can enhance the appeal and readability of your

Jul

Python vs. PySpark: Navigating Data Analytics in Databricks – Part 2

Part 2: Exploring Advanced Functionalities in Databricks Welcome back to our Databricks journey! In this

May

GPT-4 with Vision vs Custom Vision in Anomaly Detection

Businesses today are generating data at an unprecedented rate. Automated processing of data is essential

May

Exploring DALL·E Capabilities

What is DALL·E? DALL·E is text-to-image generation system developed by OpenAI using deep learning methodologies.

May

Using Copilot Studio to Develop a HR Policy Bot

The next addition to Microsoft’s generative AI and large language model tools is Microsoft Copilot

Apr