With SQL Server 2014 due for release soon, there’s a lot of talk about how little is changing for those of us more interested in Business Intelligence. We’ll be getting clustered, updatable columnstore, which is very convenient, but I’ve been looking into the potential benefits of other new features. The real gem for me is the potential of memory-optimised tables or in-memory OLTP (previously known as Project Hekaton).

I’ve had a few questions around how columnstore and memory-optimised tables differ – they’re both in-memory solutions for data storage right?

The big difference comes in disk usage – in order for a columnstore index to exist, there must also be a physical table written to the disk. Any updates to the table, even in SQL Server 2014, will require disk writes, log writes too if you’re not bulk loading. Memory-optimised tables don’t have this overhead – we can push the staging data straight into memory and skip the disk entirely.

There are plenty of uses for this new type of table, but it’s the traditional ETL routine I’m interested in. We would normally pull data from our source systems, place it in a staging database while we clean, validate etc, then write the cleaned data to the warehouse:

The data written to the staging database is transient, it’s only of use during the load process. It is therefore redundant to write this data to disk each time; I believe this is where memory-optimised tables will really have an impact.

To prove this theory, I set up a quick demonstration. I started by creating two identical tables, one physical, one memory-optimised, both schema copies of [AdventureWorks2012].[Sales].[SalesOrderDetail].

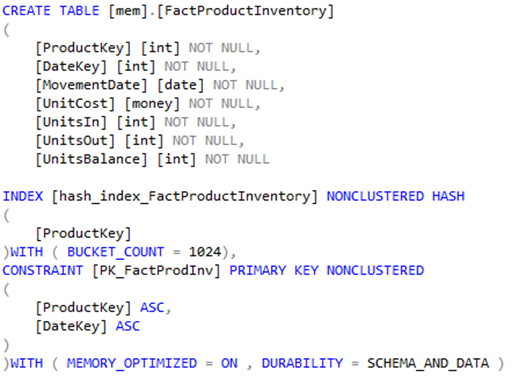

The memory optimised table was created using the following script:

I then built the simplest ofs, a data flow to move data from AdventureWorks2012.Sales.SalesOrderDetail to a ‘staging’ table, then read the data from the staging table and write it back to a disk-based table, representing our final warehouse.

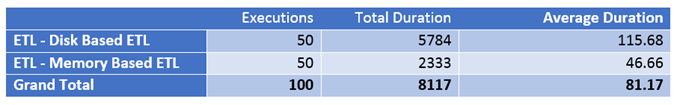

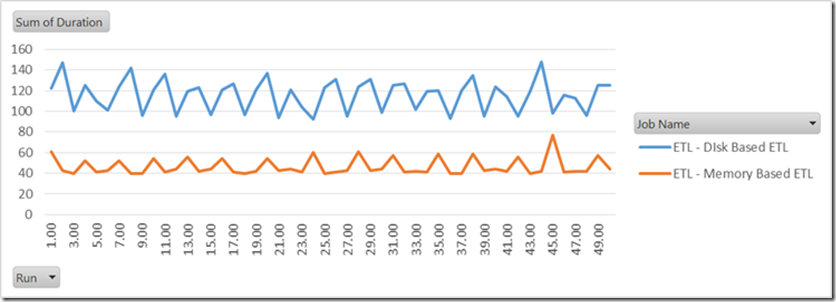

I configured two versions of the package, one where the staging table was disk-based, one where it is our memory-optimised table. I deployed and scheduled these packages as alternating jobs and kicked them off for a few hours to see how performance varies over time. The results are as follows:

The initial results are very promising – we see a huge reduction in execution time when using in-memory tables for our transient ETL data. We’re still performing minimal logging with this setup – you can reduce this even further by switching to SCHEMA_ONLY durability, but by bypassing writing the data to disk we’ve cut our ETL window in half.

There are obvious hardware limits to how this can be used, and in-memory tables have their own limitations, but implemented carefully it could change the face of warehouse loading drastically.

Introduction to Data Wrangler in Microsoft Fabric

What is Data Wrangler? A key selling point of Microsoft Fabric is the Data Science

Jul

Autogen Power BI Model in Tabular Editor

In the realm of business intelligence, Power BI has emerged as a powerful tool for

Jul

Microsoft Healthcare Accelerator for Fabric

Microsoft released the Healthcare Data Solutions in Microsoft Fabric in Q1 2024. It was introduced

Jul

Unlock the Power of Colour: Make Your Power BI Reports Pop

Colour is a powerful visual tool that can enhance the appeal and readability of your

Jul

Python vs. PySpark: Navigating Data Analytics in Databricks – Part 2

Part 2: Exploring Advanced Functionalities in Databricks Welcome back to our Databricks journey! In this

May

GPT-4 with Vision vs Custom Vision in Anomaly Detection

Businesses today are generating data at an unprecedented rate. Automated processing of data is essential

May

Exploring DALL·E Capabilities

What is DALL·E? DALL·E is text-to-image generation system developed by OpenAI using deep learning methodologies.

May

Using Copilot Studio to Develop a HR Policy Bot

The next addition to Microsoft’s generative AI and large language model tools is Microsoft Copilot

Apr