As you may all know Terraform serves a great purpose in deploying resources and infrastructure into your Azure environment, however, Terraform can also be used to automate and consistently deploy Azure Policies which can be defined prior to any resources being generated. In this blog, I will cover how you can import policies into your Terraform State to then deploy into an Azure Resource Group in order to secure your landing zone prior to deploying any resources.

Importing the Policy into the State File

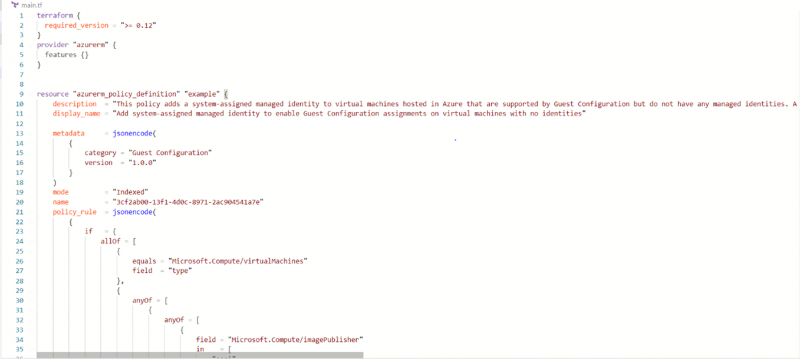

In order to assign a policy you first must define the policy, and to do this you need to write some fairly complex Terraform which can be slightly painful however with terraform import and terraform show the Terraform code can be defined for you, making it easier to get going and deploy the policies.

Firstly, create your Policy definition statement but without defining any parameters so it looks like the below:

resource “azurerm_policy_definition” “example” {

}

Once you have then initialised this with a terraform init you can import an Azure Policy into your state by running the following command:

terraform import azurerm_policy_definition.example <definition id>

The definition id can be obtained in the Azure Portal by navigating to policies then selecting the policy you wish to import and then grabbing the definition id.

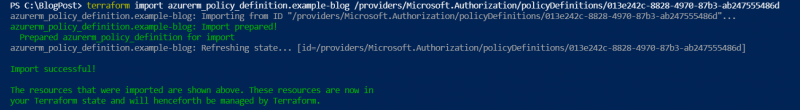

To verify that the import has been successful your command window should generate the message below stating that the import is successful.

Generating the Terraform Code for Policy Definition

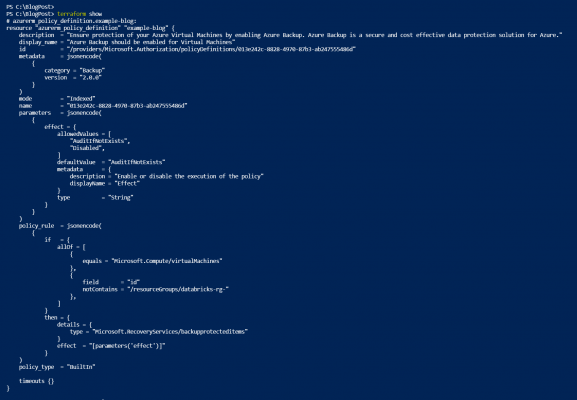

Now that the Policy has been imported into the state, the terraform code now needs to be generated so that definition can be assigned and the code re-used to deploy to another Azure Tennent.

If the command terraform show is now run, Terraform will now generate the code that is in state file and this code can now be copied and added to your main.tf file.

Assigning Policy Definition to Resource Group.

Now that the policy has been defined in the previous steps, we can now assign this policy to the scope in question, in this case, we are going to assign the policy definition to a resource group that we will create on run time, using the following code:

resource “azurerm_resource_group” “example” {

name = “test-resources-blog-post”

location = “West Europe”

}

resource “azurerm_policy_assignment” “example” {

name = “example-policy-assignment-blog-post”

scope = azurerm_resource_group.example.id

policy_definition_id = azurerm_policy_definition.example.id

description = “Policy Assignment created via an Acceptance Test”

display_name = “My Example Policy Assignment-Blog-Post”

}

This code is simply stating that we will create a resource group called “test-resources-blog-post” in West Europe.

We are then going to assign the policy definition from the ID which we defined further up and apply this policy to the scope of the resource group that we are going to create.

Once this has been done we can run the three terraform commands in this order:

- terraform init

- terraform plan

- terraform apply

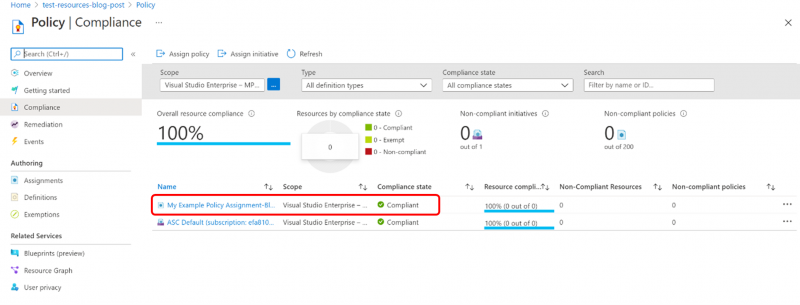

To which you should be presented with a success message and can verify that the policy has been deployed by navigating to the newly created resource group and confirming the policy has been applied:

Now that one policy has been deployed it makes it easier to rapidly import others in the Terraform thus going forwards having the ability to generate and deploy policies into Azure consistently and rapidly.

I hope you enjoyed this blog, please read our other blogs here

Introduction to Data Wrangler in Microsoft Fabric

What is Data Wrangler? A key selling point of Microsoft Fabric is the Data Science

Jul

Autogen Power BI Model in Tabular Editor

In the realm of business intelligence, Power BI has emerged as a powerful tool for

Jul

Microsoft Healthcare Accelerator for Fabric

Microsoft released the Healthcare Data Solutions in Microsoft Fabric in Q1 2024. It was introduced

Jul

Unlock the Power of Colour: Make Your Power BI Reports Pop

Colour is a powerful visual tool that can enhance the appeal and readability of your

Jul

Python vs. PySpark: Navigating Data Analytics in Databricks – Part 2

Part 2: Exploring Advanced Functionalities in Databricks Welcome back to our Databricks journey! In this

May

GPT-4 with Vision vs Custom Vision in Anomaly Detection

Businesses today are generating data at an unprecedented rate. Automated processing of data is essential

May

Exploring DALL·E Capabilities

What is DALL·E? DALL·E is text-to-image generation system developed by OpenAI using deep learning methodologies.

May

Using Copilot Studio to Develop a HR Policy Bot

The next addition to Microsoft’s generative AI and large language model tools is Microsoft Copilot

Apr