Following on from my last blog on the topic of security within Azure Databricks which concentrated on the implementation model for data processing for platform, the following blog concentrates on the alternative – data processing for users.

Data Processing for Users

By this, I mean data-related activities that a user is performing interactively, for instance data analysis from Data Lake.

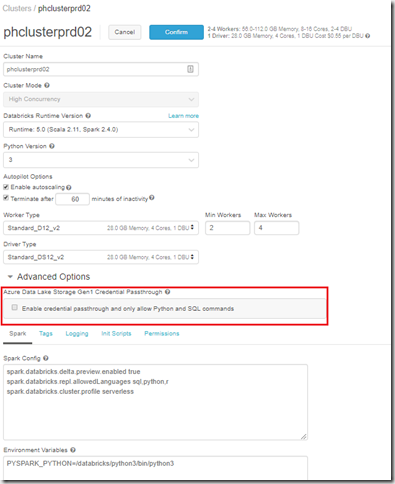

With the addition of Databricks runtime 5.1 which was released December 2018, comes the ability to use Azure AD credential pass-through. This is a huge step forward since there is no longer a need to control user permissions through Databricks Groups / Bash and then assigning these groups access to secrets to access Data Lake at runtime. As mentioned previously – with the lack of support for AAD within Databricks currently, ACL activities were done on an individual basis which was not ideal. By using this feature, you can now pass the authentication onto Data Lake, and as we know one of the advantages of Data Lake is the tight integration into Active Directory so this simplifies things. Its worth noting that this feature is currently in public preview but having tested it thoroughly, am happy with the implementation/limitations. The feature also requires a premium workspace and only works with high concurrency clusters – both of which you’d expect to use in this scenario.

The other good thing is that its incredibly easy to enable this functionality, as it is controlled by the cluster configuration process. To enable, navigate to the cluster configuration page, select runtime 5.1 or higher, and expand the advanced options. At this point, you will see a tick box which needs to be checked (see below). This will add another line of code into your spark config. Its actually good to see it was implemented in this way – and helps to stick to the Microsoft mantra of keeping things simple.

Once enabled, only connections into Data Lake via acl:// are valid – any existing connections with the dbfs or through the databricks databases mounted to route via the dbfs for unmanaged tables will stop working. This is a current limitation and may be fixed at GA (although technically you could re-build the tables using the acl:// path if this was an issue).

Great – so now my ACL can be controlled entirely within Data Lake without the need for Service Principals! But there’s more..

I touched on this with my previous blog, but as part of the access implementation for Data Lake, it is preferable to define Azure Active Directory Groups (AAD) to provide further flexibility moving forward. By this I mean, creating AAD groups and assigning them to Data Lake so as to create a level of abstraction away from Users/User AD Groups/Service Principals so that modifications will not need to be made to Data Lake permissions in the future, only to the AAD groups that are already assigned. From experience, by not going down this route – any additional user permissions that need applying in future have to be applied across all folders/files which depending on the size of the data lake, can take a particularly long time be awkward to add. Therefore this needs to be done upfront as part of the design!

I would suggest the following conventions for this group setup, an example being AAD_PLATFORM_ADL_ENV_RAW_READER.

- AAD – to separate out AD/AAD once sync is implemented.

- PLATFORM – the platform or project/department this group is used by.

- ADL – the resource on the platform that the group is used by.

- ENV – the environment on which the resource resides (prod/non-prod).

- RAW – the layer within lake the permissions will be applied to.

- READ – the permission the group will have access to.

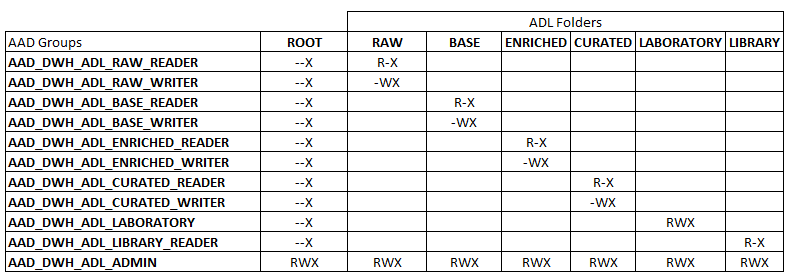

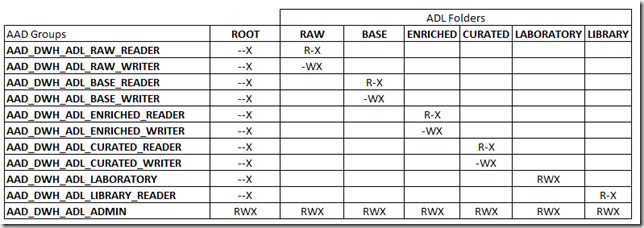

You would then need to create the following AAD groups to cover all areas across the Data Lake. This assumes using our standard Data Lake layer/folder pattern first introduced by Ust’s blog back in 2016.

- AAD_PLATFORM_ADL_PROD_RAW_READER

- AAD_PLATFORM_ADL_PROD_RAW_WRITER

- AAD_PLATFORM_ADL_PROD_BASE_READER

- AAD_PLATFORM_ADL_PROD_BASE_WRITER

- AAD_PLATFORM_ADL_PROD_ENRICHED_READER

- AAD_PLATFORM_ADL_PROD_ENRICHED_WRITER

- AAD_PLATFORM_ADL_PROD_CURATED_READER

- AAD_PLATFORM_ADL_PROD_CURATED_WRITER

- AAD_PLATFORM_ADL_PROD_LABORATORY

- AAD_PLATFORM_ADL_PROD_LIBRARY_READER

- AAD_PLATFORM_ADL_PROD_ADMIN

The permissions applied to these groups can then be implemented using the following matrix. When adding these permissions, they need to be added to the current folder, and all children, and added as both an access permission entry and a default permission entry. Without this, any changes to Data Lake in the future will not inherit these permissions.

Once this is complete, you would add the Users/AD User Groups/Service Principals into these AAD groups to provide access and the pass-through permissions work as expected.

By using this method it separates out both read/write and the data lake layers meaning that unless specifically granted, there will be a much more limited footprint in terms of access permissions into Data Lake. Using a combination of this, and the AD credential pass-through from Databricks provides a suitable solution for implementing security using the Databricks/Data Lake combo.

Introduction to Data Wrangler in Microsoft Fabric

What is Data Wrangler? A key selling point of Microsoft Fabric is the Data Science

Jul

Autogen Power BI Model in Tabular Editor

In the realm of business intelligence, Power BI has emerged as a powerful tool for

Jul

Microsoft Healthcare Accelerator for Fabric

Microsoft released the Healthcare Data Solutions in Microsoft Fabric in Q1 2024. It was introduced

Jul

Unlock the Power of Colour: Make Your Power BI Reports Pop

Colour is a powerful visual tool that can enhance the appeal and readability of your

Jul

Python vs. PySpark: Navigating Data Analytics in Databricks – Part 2

Part 2: Exploring Advanced Functionalities in Databricks Welcome back to our Databricks journey! In this

May

GPT-4 with Vision vs Custom Vision in Anomaly Detection

Businesses today are generating data at an unprecedented rate. Automated processing of data is essential

May

Exploring DALL·E Capabilities

What is DALL·E? DALL·E is text-to-image generation system developed by OpenAI using deep learning methodologies.

May

Using Copilot Studio to Develop a HR Policy Bot

The next addition to Microsoft’s generative AI and large language model tools is Microsoft Copilot

Apr