Exploring the Potential of Managed Airflow in Azure Data Factory

Microsoft recently made an announcement regarding the integration of Apache Airflow within its Azure cloud offering. Following much anticipation, a public preview of Managed Airflow in Azure Data Factory is now in place and can be tested across several Azure regions. This blog post will attempt to explain the basics of Airflow, how the Azure integration works and how you can potentially use it to your advantage.

What is Apache Airflow?

Apache Airflow is a community-driven open-source platform to programmatically author, schedule and monitor data workflows. Since its initial development by Airbnb and subsequent adoption by many companies worldwide including Yahoo, Intel and PayPal, it has become an important and popular feature of the Apache ecosystem.

The platform is Python-based and allows for the visual management of DAGs. ‘DAGs’ stands for Directed Acyclic Graphs and users of platforms like Apache Spark and Hadoop might already be familiar with them. A DAG resembles a flow chart featuring nodes connected by edges and these represent various tasks to be executed along with their dependencies. The ‘acyclic’ part of the name is referring to the fact that the tasks cannot be self-referential or dependent on themselves, thus avoiding infinite cycles.

Example of a DAG flow

Within Airflow, DAGs are declared in Python and define tasks for execution. The ability to configure the tasks in Python using various operators and libraries makes Airflow dynamic and extensible. This flexibility is one of the main reasons for Airflow’s popularity. Another advantage of Airflow is its scalability, which allows for the scheduling and orchestration of various worker nodes on demand. The interface makes it easy to manage and monitor workflows alongside troubleshooting logging and problems.

Why use Managed Airflow in Azure?

The main advantage of Managed Airflow is combining the best of both worlds – using the flexibility and extensibility of Airflow powered by an active open community alongside the convenience and security of an Azure-managed environment. ADF jobs can now be executed within Airflow DAGs, thus giving a welcome extension to ADF’s orchestration capabilities. Managed Airflow in ADF has the following key features:

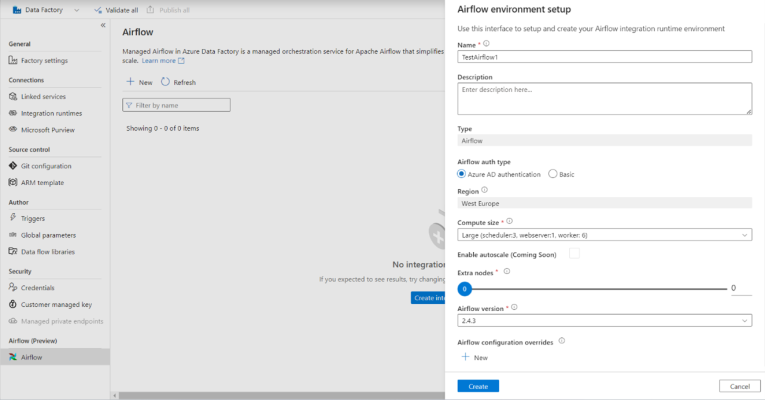

- Ease of set-up – Managed Airflow allows for a quick automated setup of a fully managed environment in Data Factory. Normally Airflow has a complex setup involving some components for which it is often used in conjunction with Kubernetes, so this is a significant step up in terms of convenience.

- Built-in security and authentication – Secure automatic encryption and Azure Active Directory role-based authentication makes it easier to address security concerns.

- Autoscaling – automatic scaling of worker nodes that uses minimum and maximum number of allowed nodes.

- Automatic upgrades – the ADF Managed Airflow version will be upgraded periodically following the schedule of upgrades and patches of the normal version.

- Integration with Azure – Managed Airflow in ADF makes it easy to use other components of Azure such as ADF pipelines, Cosmos DB, Azure Key Vault, Azure Monitor, ADLS Gen 2 to name a few.

Creating a new Managed Airflow environment.

Why is Managed Airflow important?

This is a welcome announcement and we’re excited to see how Managed Airflow develops within ADF. This feature will help Azure users get more customisation and extensibility beyond what ADF’s orchestration normally provides, and the convenience of the managed environment could resolve some of the problems that existing Apache Airflow users are facing. Those who might benefit the most from this feature are those seeking a ‘lift and shit’ of their existing DAGs within Azure. We’ll continue exploring and posting about this tool as it gets further developed by the Azure and open-source community.

Introduction to Data Wrangler in Microsoft Fabric

What is Data Wrangler? A key selling point of Microsoft Fabric is the Data Science

Jul

Autogen Power BI Model in Tabular Editor

In the realm of business intelligence, Power BI has emerged as a powerful tool for

Jul

Microsoft Healthcare Accelerator for Fabric

Microsoft released the Healthcare Data Solutions in Microsoft Fabric in Q1 2024. It was introduced

Jul

Unlock the Power of Colour: Make Your Power BI Reports Pop

Colour is a powerful visual tool that can enhance the appeal and readability of your

Jul

Python vs. PySpark: Navigating Data Analytics in Databricks – Part 2

Part 2: Exploring Advanced Functionalities in Databricks Welcome back to our Databricks journey! In this

May

GPT-4 with Vision vs Custom Vision in Anomaly Detection

Businesses today are generating data at an unprecedented rate. Automated processing of data is essential

May

Exploring DALL·E Capabilities

What is DALL·E? DALL·E is text-to-image generation system developed by OpenAI using deep learning methodologies.

May

Using Copilot Studio to Develop a HR Policy Bot

The next addition to Microsoft’s generative AI and large language model tools is Microsoft Copilot

Apr