Microsoft have recently added support to call Azure Functions natively within Azure Data Factory. Previous to this, you only had one option if you wanted to leverage the serverless compute – which was through a web activity. While this certainly did a job, it wasn’t ideal as it exposed the function/host key within code and also the http message so as to authenticate with the functions. As such, I expect quite a few people’s pipelines will be in a similar state to my own. I have recently been through the process of upgrading them in line with the new native ADF functionality and as part of this, I thought it would be worth my time to share the process I went through so you don’t suffer the same pains!

Linked Service

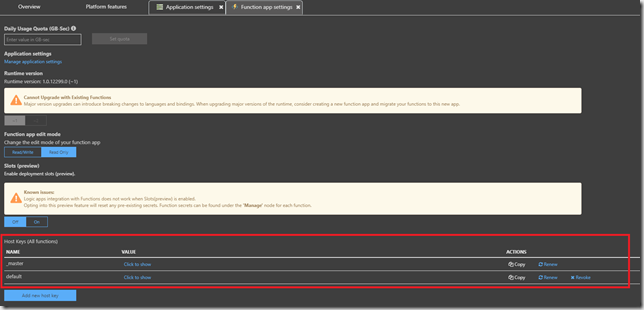

The first step in this process is to add a new ADF linked service into your data factory. When you try to do this, you’ll probably end up doing what always seems to happen when first searching for an Azure Function connector and search the prompt in front of you. This is default filtered to data connectors so it won’t appear, and you’ll need to switch the tab at the top to compute, at which point you’ll see it. The next step is to add the URL to your functions end point which will be in the format https://functionappname.azurewebsites.net. You’ll then need to supply a key to the function app for authentication purposes. I strongly advise you to set up an Azure Key Vault and store the key in there, and then in ADF you can access the key via a secret. This is so simple to do and promotes best practice from a security perspective as well as being useful for CI/CD purposes. This key can be both a function key (specific to a single function) OR a host key (access to all functions). Most of the tutorials on the web as well as the official MS content currently reference the function key, so I was slightly worried at first you could only call functions via the function key. This is not the case, and the host key can be used too. If you’re not too familiar with functions, this can be found within the application settings of your function app.

Pipelines

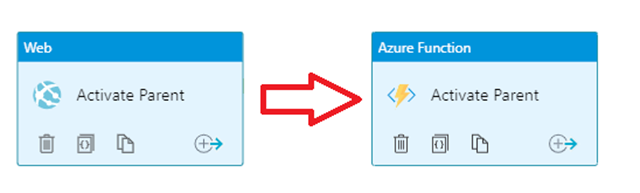

The next step in the process is replacing those web activities with native function activities. While this is a fairly simple process, you’ll no longer be calling a GET on a HTTP webhook directly via a URL string, and passing in parameters. Instead, you’ll need to specify the function you need to call and pass in the parameters via a JSON body as part of a POST call to the end point. For any functions not requiring parameters, you’ll need to use a GET rather than a POST with an empty body as ADF has issues validating a body without any content.

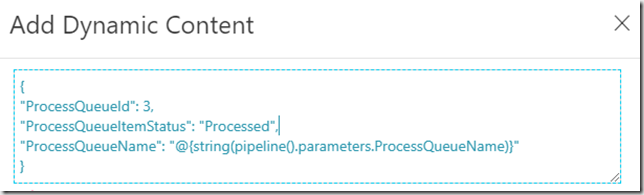

To pass the parameters into a body, you’ll need to wrap them in JSON with the following format. I’ve provided examples of strings, integers, and expressions but hopefully you get the point here.

Function Code

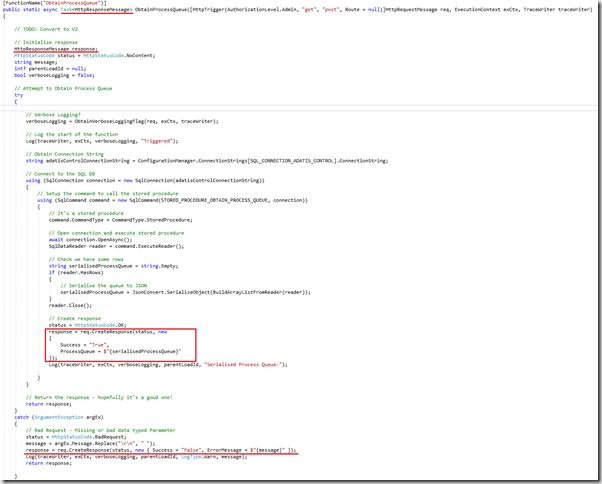

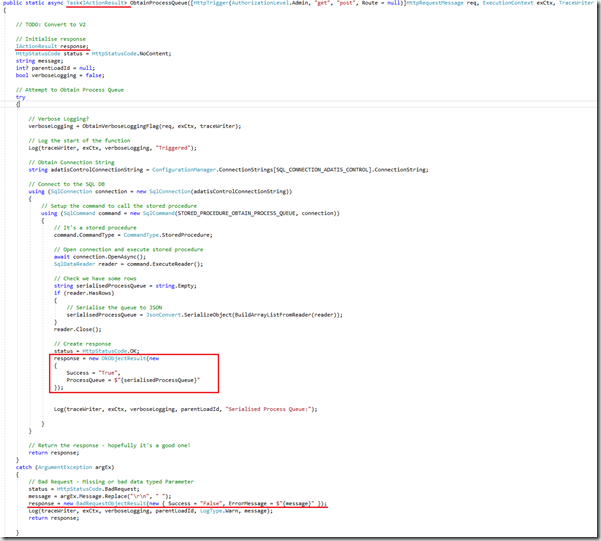

As part of this process, you’ll also need to modify your C# code within your function apps. Previous to this upgrade, you may be handling your functions through the HttpResponseMessage object (see below for an example – I’ve highlighted in red the code snippets that relate to the subject matter). Your web activity is happy to accept a response from this class in Data Factory!

Now that we are using the native functions, Data Factory will no longer accept a Http message as a response, and instead wants a JSON object (JObject). As a result, our HttpResponseMessage needs to be replaced by an IActionResult object (see below for an example of changes). The change is relatively trivial and you only need to decide on the type of Object to create instead of just creating a response.

To use this object, you’ll need to import the Microsoft.AspNetCore.Mvc libraries through NuGet. At this point, I ran into an issue since the dependencies required by Microsoft.AspNetCore.Mvc conflicted with my existing libraries – to such an extent where separate NuGet packages wanted my Newtonsoft.Json library to be both equal to version 9.01 and greater than version 11.0.1. To solve this issue, I imported separate parts of the main library – in this instance it was Microsoft.AspNetCore.Mvc.Abstractions and Microsoft.AspNetCore.Mvc.Core. These 2 libraries did not have the same constraints as the entire framework library.

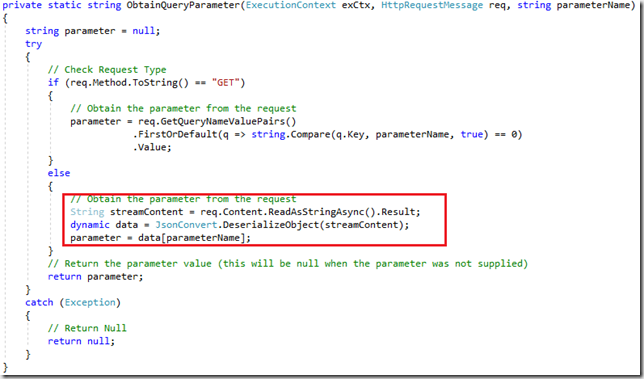

While the above code changes allowed me to send/receive a JObject, in most scenarios I also needed to POST parameters into the function. In this case, I also needed to modify the code to read this (see highlighted code block below). The content is now treated as a stream which then needs to be deserialised from JSON into an object. The parameters can then be read by treating the object as a list.

Errors

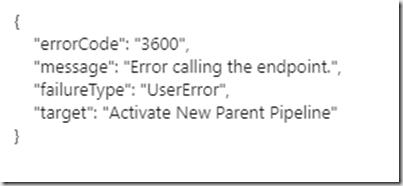

If you’ve found this blog as part of a Google search, you’ll more likely than not of hit the following generic error { “errorCode”: “3600”, “message”: “Error calling the endpoint.”, “failureType”: “UserError”, “target”: “Activate New Parent Pipeline” }. This not a particularly helpful error as it basically tells you, you’re not able to receive a correctly formatted response. More often than not, this will be due to an issue in your function code, than something in ADF. I spent a while thinking it was an authentication error when it was not! You may receive a slightly more verbose error if you check the function app logs through something such as Azure Insights – again, I would strongly recommend you set this up as part of your Azure functions service as it’s not ideal to rely on ADF to debug this. Alternatively, I would also suggest setting up Postman to debug the issues locally to have a bit more control over the variables at runtime through the Locals output.

Conclusion

Hopefully you will find this guide useful if you’ve about to go through a similar process to myself. It might also be useful for anyone setting up functions in ADF for the first time as it will cover most of the content required for the services to talk to one another.

Introduction to Data Wrangler in Microsoft Fabric

What is Data Wrangler? A key selling point of Microsoft Fabric is the Data Science

Jul

Autogen Power BI Model in Tabular Editor

In the realm of business intelligence, Power BI has emerged as a powerful tool for

Jul

Microsoft Healthcare Accelerator for Fabric

Microsoft released the Healthcare Data Solutions in Microsoft Fabric in Q1 2024. It was introduced

Jul

Unlock the Power of Colour: Make Your Power BI Reports Pop

Colour is a powerful visual tool that can enhance the appeal and readability of your

Jul

Python vs. PySpark: Navigating Data Analytics in Databricks – Part 2

Part 2: Exploring Advanced Functionalities in Databricks Welcome back to our Databricks journey! In this

May

GPT-4 with Vision vs Custom Vision in Anomaly Detection

Businesses today are generating data at an unprecedented rate. Automated processing of data is essential

May

Exploring DALL·E Capabilities

What is DALL·E? DALL·E is text-to-image generation system developed by OpenAI using deep learning methodologies.

May

Using Copilot Studio to Develop a HR Policy Bot

The next addition to Microsoft’s generative AI and large language model tools is Microsoft Copilot

Apr