Let’s assume we have a company that distributes alcoholic drinks across the country. To determine their performance, they define a set of KPIs that will evaluate, between others, how many establishments (eg. pubs, bars, …) have their products exposed in the shelf. To achieve this goal, they have a set of sales reps that visit each establishment and take note of which products are exposed. One possible way to track the data is by accessing a mobile application, manually fill the form and upload the data, but, what if we could automate the process of identifying the products in a shelf by simply uploading a picture? To do that, we would need to apply a machine learning algorithm to classify the image and identify each product.

To prove if the above scenario is achievable, I’ll demonstrate how to create a project using a tool called Custom Vision, a service that allow us to easily build a predictive model with just a few clicks and without the need of deep machine learning knowledge.

What is the Custom Vision Service?

Azure Custom Vision Service is a Microsoft Cognitive Services tool for tagging images using a custom computer vision model. Although very similar to Microsoft’s Computer Vision API, it has the advantage of fine-tuning a predictive model to a specific dataset, however, there are still a couple of minor disadvantages. For example, the service can only identify if an object is in an image and not where it stands within the image.

Build the model

To build a predictive model with this service we can either use the web interface or the REST API with support for C# and Python.

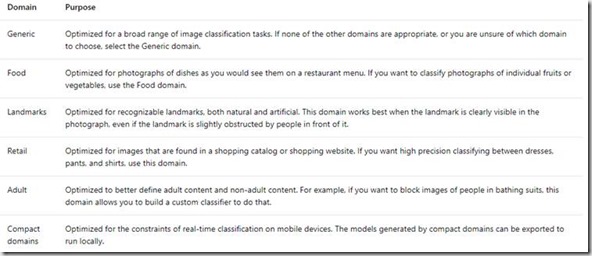

The first step was to create a project by providing a name, a domain and a resource group. When selecting a domain, we can either choose a general domain optimized for a range of images or select a specific domain optimized for a certain scenario. In my case, I selected the Food domain, given I wanted to identify different kind of bottles.

Below a description of each domain detailed by Microsoft.

The next step was to upload and tag images. Here are a couple of considerations:

– To start the prototype, we need at least two different tags and a couple of images, usually, a minimum of 30 per class

– It is best practice to use a variety of good quality images (different angles, lights, background, size, …) to ensure a better differentiation and accurate results from the classifier. In my case, Google was the source of the images, which was a bit limited in some cases (surprisingly!!!)

– It is best practice to include images that represent what the classifier will find in the real world, rather than images with neutral backgrounds

– It is advised to avoid images with multiple entities. If we upload images with a bottle and a glass, because the classifier will learn the characteristics that the photos have in common, when comparing two images, the classifier might be comparing a bottle + cup with a single bottle

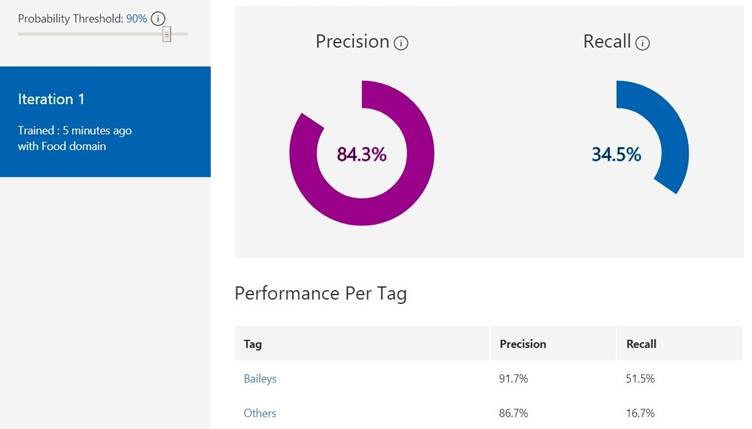

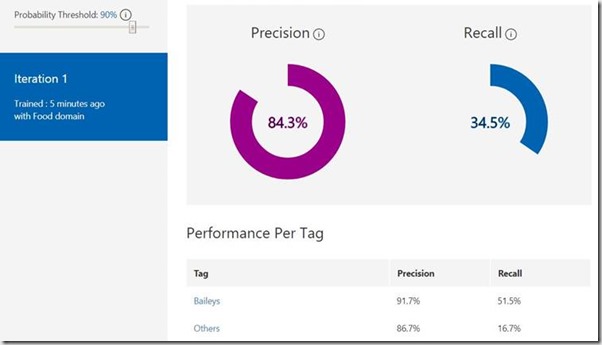

I started by uploading 60 images for 2 classes, Baileys and Others. Once the images were uploaded, I trained my model and obtained the following results.

The Precision and Recall indicators demonstrates how good the classifier is performing. Above, we can see that the analysis is done for the entire model and for each tag. It is important to refer that 100% precision is usually not achievable, however, having a model with 75%+ in Precision and Recall is an indication of an effective model.

Precision – Indicates how likely the classifier is correctly classifying an image. Knowing that we had 60 images, having a precision of 84.3% means that roughly 51 of the images were correctly tagged

Recall – From out of all the images that should have been classified correctly, how many did the classifier identified accurately. Having a precision of 34.5% means that only 20 images were correctly classified

Probability Threshold – The slider, set by default at 90%, indicates what is the value used to calculate Precision and Recall. Let’s consider the following example. The probability that image A has a Baileys bottle is 94%. If the probability threshold is 90%, then Image A will be taken into consideration as a “correct prediction”.

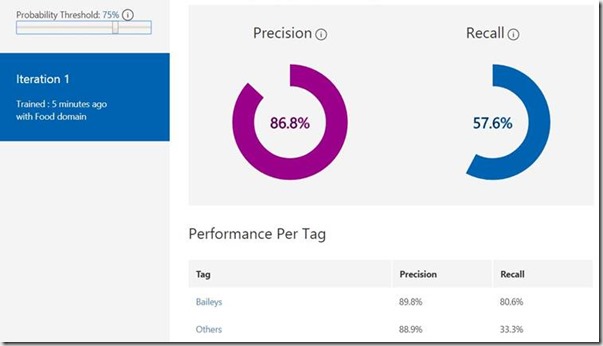

In the example below, we can see that I obtained better results when I changed the probability threshold to 75%. Based on this information I had two options, either correctly tag the wrong images or replace them with better ones.

Fortunately, Custom Vision Service has a very handy functionality that, for each iteration, highlights which images confused the model according to the probability threshold value. In the image below, there are two images with a red square. If we hover the mouse on the image we can see the prediction for each tag. In this case, the prediction is below 90% for both tags, meaning the image was not considered in the Precision and Recall calculation.

After a couple of iterations we finally obtained a model we could use to run a quick test. When having multiple iterations, we select the one with best results by selecting the option Make Default.

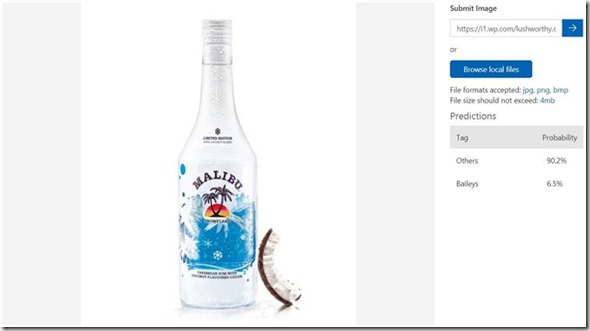

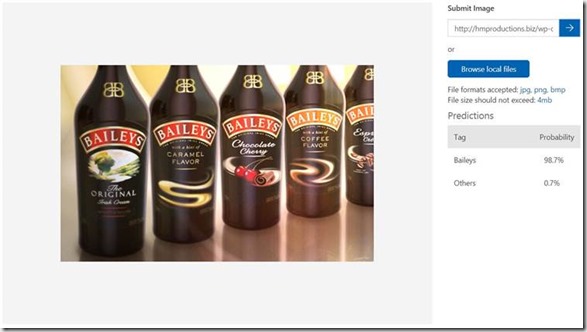

To test the model, I selected two images from the internet showing individual brands. As we can see, the model correctly classified each image.

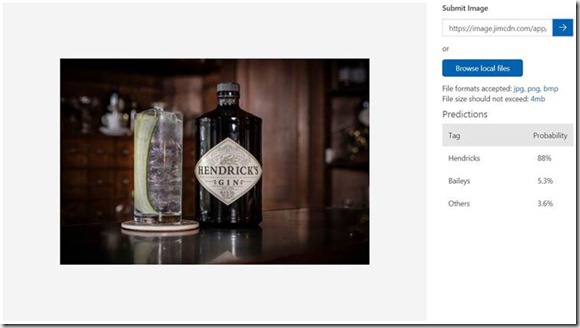

Since I was happy with the results, I decided to increase the complexity of the model by creating a new tag and uploading a new set of images. After training the model, I noticed the results were not as good as before, since the new images were creating some confusion to the model. It took a couple of iterations until I got an acceptable model. Following the result of a quick test.

I now had a model that could correctly classify 3 different entities, so I decided to increase the challenge and added an image with multiple entities. The result I obtained helped me understand the flaw in my model.

The model identified the image should be classified as Others, however, although we have a Baileys and Hendricks bottle in the image, the probability for those two classes was too low. Here is why:

– When uploading images to the project I only used 1 tag per image. Based on that, the model will always try to classify an image with a single tag. As soon as I added more tags per image, my predictions improved

– All the examples used were showing a single entity, ie, only one type of bottle per image, except for the Others category. Example: I uploaded 30 images of Baileys bottles, 30 images of Hendricks bottles, and, for the category Others, 3 different types of bottles

– My model was trained to identify bottles where the image didn’t have multiple entities. As referred above, the model should always be trained with images that represent what the classifier will predict

– The number of images per class didn’t have enough variety. As any machine learning model, if we improve the number of examples, the model will perform better

Final Considerations

With just a couple of clicks and no deep machine learning knowledge I was able to create a predictive model that could accurately classify a set of images. With further iterations I could potentially have a model that could achieve the requirements of the scenario proposed at the beginning of this blog, however, would Custom Vision Service be the right tool? In my opinion the use of the Computer Vision API would be more adequate, but, this is definitely a service with massive potential.

As always, if you have any queries or considerations do let me know.

Introduction to Data Wrangler in Microsoft Fabric

What is Data Wrangler? A key selling point of Microsoft Fabric is the Data Science

Jul

Autogen Power BI Model in Tabular Editor

In the realm of business intelligence, Power BI has emerged as a powerful tool for

Jul

Microsoft Healthcare Accelerator for Fabric

Microsoft released the Healthcare Data Solutions in Microsoft Fabric in Q1 2024. It was introduced

Jul

Unlock the Power of Colour: Make Your Power BI Reports Pop

Colour is a powerful visual tool that can enhance the appeal and readability of your

Jul

Python vs. PySpark: Navigating Data Analytics in Databricks – Part 2

Part 2: Exploring Advanced Functionalities in Databricks Welcome back to our Databricks journey! In this

May

GPT-4 with Vision vs Custom Vision in Anomaly Detection

Businesses today are generating data at an unprecedented rate. Automated processing of data is essential

May

Exploring DALL·E Capabilities

What is DALL·E? DALL·E is text-to-image generation system developed by OpenAI using deep learning methodologies.

May

Using Copilot Studio to Develop a HR Policy Bot

The next addition to Microsoft’s generative AI and large language model tools is Microsoft Copilot

Apr