In recent weeks, I’ve spent a fair chunk of time working with different aspects of Databricks and as part of this, one topic that consumed a proportion of that time is the security and authentication aspects of the service. Our scenario was one that I expect most people will come across over the next few years, essentially the integration of Databricks with Azure Data Lake for data processing. In the following blogs (yup I need 2) I intend to document the way we went about implementing the security model, breaking it up into data processing for a platform and data processing for users – each has a slightly different implementation.

Data Processing for Platform

By this, I mean data processing activities that happen as part of a service, orchestrated through something such as Azure Data Factory that happen on a schedule or in real-time.

I expect the very first thing most people will do once they have a Databricks workspace is to mount their Data Lake. Its by the far easiest option for getting your hands on your data, and one that’s still worth using to start off as long as you understand the consequences. If you need to do this, I will point you in the direction of Hugh’s blog post covering the topic which I’ve used a number of times now to remember all the different secrets/GUIDs you need. By mounting the Data Lake in your Databricks workspace, you are able to interact with the data as if it were in the DBFS (the databricks local file system). This proves incredibly powerful for tools such as dbutils which allow you to perform a vast number of file system operations. Unfortunately the one downside to this method is that anyone with access to the workspace is able to directly interact with all layers in your Data Lake – not ideal. Since Data Lake’s are not treated in the same way as something like an Azure SQL DB, you also won’t have those handy recovery options if something happens to your data because of this unrestricted access. This obviously poses a risk for an Enterprise solution. Its worth noting that the mount is done once, so your clusters can go down and be spun up again by other users and they would still be able to access the mountpoint until it is un-mounted.

With that in mind, it is far better to implement the access to Data Lake directly at runtime using the Spark API. The config for this is very similar to the mounting, except the spark config is set at runtime and therefore if this is not included you will be unable to access the Data Lake. This avoids the issues mentioned above where anyone can access the data, just because they can access the workspace. The following commands can be used to set this up at runtime.

# Get Secrets from Databricks ClientId = dbutils.secrets.get(scope = "ScopeX", key = "ClientId") ClientSecret = dbutils.secrets.get(scope = "ScopeX", key = "ClientSecret") DirectoryId = dbutils.secrets.get(scope = "ScopeX", key = "DirectoryId") # Apply variables to spark config spark.conf.set("dfs.adls.oauth2.access.token.provider.type", "ClientCredential") spark.conf.set("dfs.adls.oauth2.client.id", ClientId) spark.conf.set("dfs.adls.oauth2.credential", ClientSecret) spark.conf.set("dfs.adls.oauth2.refresh.url", "https://login.microsoftonline.com/" + DirectoryId + "/oauth2/token")

You’ll notice that as part of this I’m retrieving the secrets/GUIDS I need for the connection from somewhere else – namely the Databricks-backed secrets store. This avoids exposing those secrets in plain text in your notebook – again this would not be ideal. The secret access is then based on an ACL (access control list) so I can only connect to Data Lake if I’m granted access into the secrets. While it is also possible to connect Databricks up to the Azure Key Vault and use this for secrets store instead, when I tried to configure this I was denied based on permissions. After research I was unable to overcome the issue. This would be more ideal to use but unfortunately there is limited support currently and the fact the error message contained spelling mistakes suggests to me the functionality is not yet mature.

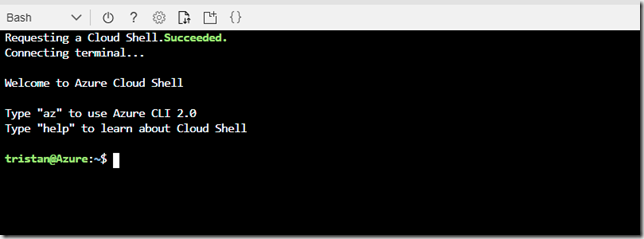

To configure the databricks-backed secrets, the easiest method is to use an Azure Bash console and go in via the Databricks CLI. To access the console – within the Azure portal you’ll notice an icon similar to below as part of the top ribbon.

Clicking this will then prompt you to start either a PowerShell or Bash console – which will look similar to below.

The connection into the Databricks CLI can be setup as per the following commands.

virtualenv -p /usr/bin/python3.5 databrickscli source databrickscli/bin/activate pip install databricks-cli databricks configure --token

At this point, you’ll need to provide it both your databricks host – something similar to https://northeurope.azuredatabricks.net and a token. The token will need to be generated through your Databricks workspace – under User Settings / Access Tokens. This essentially lets you into the API without the need for a password. The important thing to mention at this point is that the token is a personal access token. While this doesn’t impact anything in particular with Azure Bash, its worth noting that the token is created under your Databricks account, and therefore using this token for something such as a linked service into ADF then will use your account to process the connection authenticating as you. Hopefully over time, this will be matured and the use of Managed Identity’s or Service Principals directly connected into the platform will be possible. As you might of guessed then, you will need to make sure the account that generates the token is then used within the ACL to read the secret, otherwise at processing time – ADF will not be able to read through Databricks into Lake.

The configuration of the secrets is then required and can be done using the following commands. Simply replace [client id], [secret value], [directory id] with the necessary values from your service principal.

databricks secrets create-scope --scope ScopeX databricks secrets put --scope ScopeX --key ClientId --string-value [client id] databricks secrets put --scope ScopeX --key ClientSecret --string-value [secret value] databricks secrets put --scope ScopeX --key DirectoryId --string-value [directory id] databricks secrets put-acl --scope ScopeX --principal admins --permission READ

I then granted access to read these secrets to the admins group – this just keeps things simple but you can obviously provide it individual users as well or other Databricks groups. One of my hopes for 2019 is that the platform is integrated better into AAD moving forwards as everyone needs to be named individually currently. You would then need to manage permissions through the groups which can only be done via the CLI.

Once this is complete, you can now interact with your Data Lake and authenticate at runtime. Its also worth mentioning that this interaction changes the connection string from dbfs:// to adl:// which may have a knock-on effect if you use certain tools such as dbutils to do things within your code. This is also important to know since with databricks runtime 5.1 and AD credential pass-through, you will be unable to access anything other than Data Lake file systems. I’ll explain this further in my next blog.

Conclusion

Hopefully this will prove a useful to anyone venturing onto the platform and provide a basis to implement a security model. If you wanted to go a step further, you may also want to implement service principal access to Data Lake on a number of levels – both across folders AND read/write. This would add slightly more complexity to the solution but provide an even securer method avoiding the scenario where accounts can access the entire Data Lake. In my next blog, I will look at it from a user-perspective which takes on a slightly different implementation.

Introduction to Data Wrangler in Microsoft Fabric

What is Data Wrangler? A key selling point of Microsoft Fabric is the Data Science

Jul

Autogen Power BI Model in Tabular Editor

In the realm of business intelligence, Power BI has emerged as a powerful tool for

Jul

Microsoft Healthcare Accelerator for Fabric

Microsoft released the Healthcare Data Solutions in Microsoft Fabric in Q1 2024. It was introduced

Jul

Unlock the Power of Colour: Make Your Power BI Reports Pop

Colour is a powerful visual tool that can enhance the appeal and readability of your

Jul

Python vs. PySpark: Navigating Data Analytics in Databricks – Part 2

Part 2: Exploring Advanced Functionalities in Databricks Welcome back to our Databricks journey! In this

May

GPT-4 with Vision vs Custom Vision in Anomaly Detection

Businesses today are generating data at an unprecedented rate. Automated processing of data is essential

May

Exploring DALL·E Capabilities

What is DALL·E? DALL·E is text-to-image generation system developed by OpenAI using deep learning methodologies.

May

Using Copilot Studio to Develop a HR Policy Bot

The next addition to Microsoft’s generative AI and large language model tools is Microsoft Copilot

Apr