HashBytes() in SQL Server 2016

The HashBytes() function is primarily intended for hashing values for use within cryptographic situations, but can prove very valuable for change detection of records. I won’t go into the details of the HashBytes() function, other than to say that basically you pass in a string or varbinary expression and specify an algorithm for hashing the expression, and a varbinary value is returned. Prior to SQL 2016 the input was restricted to 8000 bytes, which should suffice for the majority of Data Warehousing record sizes. In SQL2016 this limit is set to (n)varchar/varbinary(max). The size of the varbinary output is dependent on the strength of the algorithm, and can range from 16 to 64 bytes. The output should always be the same for the input and algorithm, thereby giving a mechanism for checking a large input for changes. There is a chance that two separate inputs can give the same output however, something that is referred to as a hash “collision” or “clash”. The more powerful the hashing algorithm, the lower the chance of a clash between two differing inputs.

MD5 is a 128 bit algorithm, thereby providing 2128 values, or 3.4×1038. So for perfectly randomly distributed data input we would expect the probability of a collision to be 3.4×1038/n, where n is the number of inputs we are hashing. Note that the size of the input does not change this.

Move up to SHA1 and you have 160 bits, giving 2160 or ~ 1.5×1048 possible hash values.

The basic principles when using hashing to check for differences in the record are as follows:

- Concatenate the fields of interest together in string form, with a delimiter

- Hash this resultant string value in order to arrive at a single value that represents the uniqueness of the string

- Compare this hash field against future hashed values to indicate whether the fields of interest have changed over time

Checking is done per record, looking up against the business key(s) so the actual probability is per record, not over the table. This means that the probability of hash clashes is dependent on the number of different inputs that occur per Business Key, not the number of rows of data (assuming of course we have uniqueness across our business keys, as is to be expected). It is the variation in the resultant concatenated field data that we are checking here. So for 3.4×1038 available hash values, we would expect to suffer a collision with a 0.01% probability when we have 3.4×1038/0.0001 = 3.4×1034 different values hashed for the same Business Key. Assuming each change occurred once per second, that’s ~ 1.1×1027 years before we risk a 0.01% chance of two changes resulting in the same hash value and therefore missing the change. So we’re in pretty safe hands with MD5 when comparing against a particular Business Key. The storage size, at 16 bytes (128 bit encryption) won’t bust the storage bank. This is primarily used on dimension records, which tend to be wider than facts, so shouldn’t result in any considerable proportional increase in row sizes for any but the narrowest of tables.

Deprecated Algorithms and Microsoft Connect Issue

You may have noticed in the above BOL article that there is a note regarding deprecating all but SHA_256 and SHA_512 algorithms, as the others are considered cryptographically weak. 128 bit encryption is not something that is encouraged for sensitive data and understandably Microsoft do not want people being exposed. However, we are not using this for encryption, we are applying the uniqueness of the output for a complex input as a solution to succinctly tracking changes in a performant manner. Microsoft have since withdrawn the statement regarding the deprecation of some of the weaker algorithms, so as to allow this use case for HashBytes(). This is mentioned in the connect article below, so we are safe to apply this approach for our change tracking needs without fear of deprecation in future releases.

What’s the catch?

So this all sounds very promising as a way of tracking changes to our Data Warehouse data, for purposes such as extracting deltas, inserts and updates to Type I and II dimensions and so forth. It doesn’t have any show-stopping overhead for the hashing operations for the sizes of data typically encountered and storage isn’t going to be an issue. It is native to T-SQL so we can rerun our hash value generation in the engine where our data resides rather than having to push through SSIS or some other tool to generate this for us. Algorithms are universal and as such will give us the same values wherever used for the same bytes of input. Let’s go back to the basic idea for a minute and consider how we implement this.

Preparing the Field Values for Hashing

The main task we have is in getting the field data of interest into a consistent input string for processing by HashBytes(). This is where things can become rather tricky. Here is a typical example of a small number of fields being concatenated for input into the HashBytes() function, for use in change tracking of a very modest Type I/II hybrid dimension.

select

*

--Type I fields

,cast(hashbytes('MD5',isnull(upper(AttributeA),N'')

+ '||' + isnull(cast(AttributeB as nvarchar),N'')

+ '||' + isnull(upper(AttributeC),N'')

+ '||' + isnull(cast(AttributeD as nvarchar),N''))

as binary(16)) as HashTypeOne

--Type II fields

,cast(hashbytes('MD5',isnull(AttributeE,N'')

+ '||' + isnull(cast(AttributeF as nvarchar),N'')

+ '||' + isnull(AttributeG,N'')

+ '||' + isnull(cast(AttributeH as nvarchar),N''))

as binary(16)) as HashTypeTwo

/*

additional change tracking, lineage/audit fields here...

*/

from

(select

mso2.BusKey

,mso2.AttributeA --a type I attribute

,mso2.AttributeB --a type I attribute

,mso2.AttributeC --a type I attribute

,mso2.AttributeD --a type I attribute

,mso1.AttributeA as AttributeE --a type II attribute

,mso1.AttributeB as AttributeF --a type II attribute

,mso1.AttributeC as AttributeG --a type II attribute

,mso1.AttributeD as AttributeH --a type II attribute

from

SourceSystem.SourceObject2 mso2

inner join SourceSystem.SourceObject1 mso1

on mso2.ForeignKey = mso1.BusKey

) src

Here fields AttributeA, AttributeC, AttributeE and AttributeG are nvarchars, B, D, F and H are ints. For the Type I fields (A-D) we want to exclude case sensitivity, hence the Upper() function calls, whereas for the Type II fields (e-H) we have preserved case. As you can imagine, with more fields of various data types this is field casting and concatenation statement for the HashBytes() input is going to present some challenges to get correct. There are two main issues to overcome here to ensure a consistent result for a set of fields.

String representation of the field data

It is not straight forward to arrive at a consistent representation of a field value in a string format. We need to consider dates for example, ensuring that they are treated universally across the solution. We may have to deal with SQLCLR types such as HierarchyId, Geography etc. that are not simply cast( as nvarchar) conversions. We may need to consider or ignore case sensitivity, based on the requirements of our change tracking. Having such as complex expression crafted correctly every time this is needed is not guaranteed and bugs may be difficult to track down.

Field ordering

If we are concatenating a bunch of strings that result from respective fields, we need to ensure we do it in the same order each time for those fields.

Failure to ensure either of the above will mean inconsistent values resulting from the same field set, which will throw our differencing tracker out of kilter, wheels will fall off and changes could be missed.

Helpers to the Rescue

In order to overcome these development difficulties when cerating the HashBytes() input statement, I created some helper functions and a stored procedure.

util.CastAsNVarChar Scalar Valued Function

This scalar function returns an expression that is the cast of a field as an nvarchar value. This can then be used when concatenating the fields together to ensure that the correct string representation of the field value is used. The data type of the field and the required case sensitivity are supplied together with the field name, and a simple case statement based on the data type supplies the correct cast expression. Note, this function is not for use in casting the actual field values for the data, that would be a performance killer. All this is doing is generating the correct expression for the cast operation that will be used for the input statement for HashBytes(). Native SQL Server data type casting functions will be used for the actual string conversions.

create function [util].[CastAsNVarchar]

(

@statement nvarchar(max)

,@typeName sysname

,@caseSensitive bit

)

returns nvarchar(max)

as

/*

"""Generates the cast statement for converting an input statement of a specified data type to

the nvarchar equivalent.

Args:

@statement: The statement for which the cast to nvarchar is required.

@typeName: The data type name from which the cast to nvarchar will be done

@caseSensitive: When 0 then takes upper() of the field value to avoid case variance."""

Returns:

The nvarchar casting statement.

*/

begin

declare @castStatement nvarchar(max) = N''

set @castStatement =

case

when @typeName in (N'Date',N'DateTime',N'DateTimeOffset',N'DateTime2',N'SmallDateTime',N'Time')

then N'isnull(convert(nvarchar,' + @statement + N', 121), N'''')'

when @typeName in (N'BigInt',N'Int',N'SmallInt',N'TinyInt',N'bit')

then N'isnull(cast(' + @statement + N' as nvarchar), N'''')'

when @typeName in (N'Float',N'Decimal',N'Numeric',N'Real',N'Money',N'SmallMoney')

then N'isnull(convert(nvarchar,' + @statement + N', 2), N'''')'

when @typeName in (N'Binary','VarBinary')

then N'isnull(convert(nvarchar,' + @statement + N', 2), N'''')'

--must specify length to avoid arithmetic overflow error

when @typeName = N'UniqueIdentifier'

then N'isnull(cast(' + @statement + N' as nvarchar(36)), N'''')'

when @typeName in ('geography','geometry','hierarchyid')

then N'isnull(' + @statement + N'.ToString(), N'''')'

when @typeName in (N'varchar',N'nvarchar')

--ignore case for varchar when not case sensitive

then case

when @caseSensitive = 0 then N'isnull(upper(' + @statement + N'), N'''')'

else N'isnull(' + @statement + N', N'''')'

end

else N'isnull(' + @statement + N', N'''')'

end

return @castStatement

end

util.LengthOfCastAsNVarchar Scalar Valued Function

This determines the maximum length in bytes of a datatype when cast to the nvarchar equivalent, e.g. 20 for int (remember these are nvarchar, hence 2 bytes per character). This is used to warn if the resultant concatenation of nvarchar-cast equivalent field values exceeds any specified limits, which will be of particular benefit when dealing with large data types or using the HashBytes() function on SQL Server prior to 2016, where the limit of 8000 characters needs to be considered.

create function util.LengthOfCastAsNVarchar

(

@typeName sysname

,@maxLength smallint

)

returns smallint

as

/*

"""Calculates the resultant maximum length in bytes of a data type when cast to an nvarchar

value.

Args:

@typeName: The name of the data type from which the cast to nvarchar is done.

@maxLength: The maximum length of a variable length data type

Returns:

The maximum length of the resultant cast to nvarchar."""

*/

begin

declare @castLength smallint

set @castLength =

case @typeName when N'Date' then 20

when N'DateTime' then 46

when N'DateTimeOffset' then 60

when N'DateTime2' then 54

when N'SmallDateTime' then 46

when N'Time' then 32

when N'BigInt' then 38

--decimal point, hence +1

when N'Decimal' then 2 * @maxLength + 1

when N'Numeric' then 2 * @maxLength + 1

when N'Int' then 20

when N'SmallInt' then 10

when N'TinyInt' then 6

when N'Bit' then 2

--decimal point, hence +1

when N'Float' then 2 * @maxLength + 1

--decimal point, hence +1

when N'Real' then 2 * @maxLength + 1

--decimal point, hence +1

when N'Money' then 2 * @maxLength + 1

--decimal point, hence +1

when N'SmallMoney' then 2 * @maxLength + 1

when N'Binary' then 4 * @maxlength

when N'VarBinary' then 4 * @maxlength

when N'UniqueIdentifier' then 72

when N'HierarchyId' then 8000

when 'Geography' then -1

when 'Geometry' then -1

when 'Char' then 2 * @maxLength

when 'Varchar' then 2 * @maxLength

when 'NChar' then 2 * @maxLength

when 'NVarchar' then 2 * @maxLength

else @maxLength

end

return @castLength

end

util.FormatStatementFieldsAsConcatNVarchar Stored Procedure

This stored procedure takes a SQL statement as a string and outputs a SQL statement that expresses all the fields in the input statement as a concatenation of their nvarchar-cast values, to create a string for use in the input to HashBytes(). It first determines the data type for each of the fields in the input statement and then calls util.CastAsNVarChar for each field. Fields are concatenated in alphabetical order for consistency, using the “||” characters as a delimiter between fields, which is a string that will rarely feature in the source data. The maximum length in bytes of the field when cast to nvarchar is also determined and a running total is performed in order to determine whether the length of the resultant nvarchar versions of the concatenated fields will exceed a specified maximum length. If this maximum length exceeds a specified value (such as when checking against an 8000 byte limit for pre SQL2016 HashBytes()) the field that exceeded this limit is also returned, to allow the user to break the statement down into smaller statements that can fit into the bytes limit (note however that as the procedure sorts fields alphabetically before concatenating, that the field returned will also be in this order, so you will need to rewrite and divide up the statements in alphabetically sorted field order so that the length exceeding field is correctly batched up into the next input statement). The resultant concatenation statement for the the nvarchar-cast fields is output together with information on the maximum length of the resultant nvarchar versions of the concatenated fields.

Determination of Field Data Types

In SQL 2016 there is a new table valued function that will allow the determination of the field types in the first result set of a statement. This function, sys.dm_exec_describe_first_result_set, takes a statement and returns a table including such details as column data type, maxlength, precision, nullability etc. However, for our utility procedure this does not quite fit the bill, as we would need to use three-part names in the statement passed in, which would ordinarily require a rewrite of the statement we want to generate the HashBytes() value for (unless for some reason it was written already using three-part names). Ideally we want to be able to direct a statement at any database and, if possible, server, and return the field data type information for the statement, without any hacking around of the original statement.

In order to determine the field data types for the input statement, we use an insert into .. exec approach to create a global temporary table from which the field data types are extracted. A global temporary table is required as we need to use OpenQuery() to achieve this, which will run in a separate session, and as such a session scoped temporary table (#MyTable) will not be visible to the calling procedure. As this is a development time call, we can assume that global table name clashes will not be an issue on our dev instance. Ae can also assume that as we are executing this on a development instance concerns over using OpenQuery() and enabling ‘Ad Hoc Distributed Queries’ are not an issue. The following enables the aforementioned instance level configuration.

-- Enable Ad Hoc Distributed Queries exec sp_configure 'Ad Hoc Distributed Queries' ,1 reconfigure with override go

The code for the concatenation procedure is as below:

create procedure util.FormatStatementFieldsAsConcatNVarchar

@statement nvarchar(max)

,@caseSensitive bit = 0

,@nvarcharBytesLimit smallint = 8000

,@serverName sysname = null

,@databaseName sysname = null

as

/*

"""Formats the fields of a statement as a concatenation of nvarchar-casted equivalents.

Fields are concatenated in alphabetical order to ensure consistency for the same input.

Tracks the length of the resultant nvarchar converted equivalent statement, warning when the length

has exceeded @@nvarcharBytesLimit. Inserts a delimeter between each cast field.

Args:

@statement: The statement whose fields will be cast to nvarchar equivalent values.

@caseSensitive: When 0 then takes upper() of the field value to avoid case variance.

@@nvarcharBytesLimit: when < 0 then treats the limit as nvarchar(max), else the size of the limit in bytes, for the resultant output nvarchar statement, beyond which a warning is returned. @serverName: The server against which the statement is to be executed. @databaseName: The database against which the statement is to be executed. Returns: The concatenated nvarchar-cast equivalents of each of the fields in @statement, separated by a delimeter.""" */ begin declare @concatFieldsAsNVarchar nvarchar(max) = '' declare @delimConcat nchar(9) = ' +''||''+' --the length in bytes of the actual delimiting nvarchar (hence * 2) characters within @delimConcat, i.e. '||' declare @delimLen int = 2 * 2 --global temp table required when using openrowset. if object_id('tempdb..##StatementMetadata') is not null drop table ##statementMetadata -- set defaults as these cannot be defaulted within the param defs set @serverName = isnull(@serverName,@@servername) set @databaseName = isnull(@databaseName,db_name()) set @statement = N'select top 0 * into ##StatementMetadata from openrowset(''SQLNCLI'', ''server=' + @serverName + ';database=' + @databaseName + ';trusted_connection=yes'', N''set fmtonly off; set nocount on; ' + @statement + ''')' exec sp_executesql @statement declare FieldMetadata cursor local static read_only forward_only for select c.Name as ColumnName ,ty.Name as TypeName ,c.Max_Length from tempdb.sys.tables t inner join tempdb.sys.columns c on t.object_id = c.object_id inner join tempdb.sys.types ty on c.user_type_id = ty.user_type_id where t.object_id = object_id('tempdb..##StatementMetadata') --must order by columns names in order to concatenate consistently order by ColumnName asc declare @colName sysname = '' declare @typeName sysname = '' declare @maxlength smallint = 0 declare @nvarcharMaxreached bit declare @concatNVarcharLength smallint = 0 declare @colNameLimitBreaker sysname open FieldMetadata fetch next from FieldMetadata into @colName, @typeName, @maxlength while @@fetch_status = 0 begin --append a delimeter string to the field casting statement set @concatFieldsAsNVarchar += (util.CastAsNVarchar(@colName,@typeName,@caseSensitive) + @delimConcat) --the running total of the length of the resultant casting expression, in bytes set @concatNVarcharLength += util.LengthOfCastAsNVarchar(@typeName, @maxLength) + @delimLen --negative values for @nvarcharLimit signify nvarchar(max) limit if @nvarcharBytesLimit >= 0

begin

if (@maxLength = -1 or @concatNVarcharLength > @nvarcharBytesLimit) and @colNameLimitBreaker is null

set @colNameLimitBreaker = @colName

end

else

--simply track when we have exceeded 1 (max) field

if @maxLength = -1

begin

if @nvarcharMaxreached = 1

if @colNameLimitBreaker is null

set @colNameLimitBreaker = @colName

else

set @nvarcharMaxreached = 1

end

fetch next from FieldMetadata

into @colName, @typeName, @maxlength

end

--remove final @delimConcat

set @concatFieldsAsNVarchar = left(@concatFieldsAsNVarchar,case

when len(@delimConcat) > len(@concatFieldsAsNVarchar)

then 0

else len(@concatFieldsAsNVarchar) - len(@delimConcat)

end)

close FieldMetadata

deallocate FieldMetadata

declare @concatLengthMsg nvarchar(200)

if @colNameLimitBreaker is not null

set @concatLengthMsg = N'***WARNING*** concatenated field limit of ' + cast(@nvarcharBytesLimit as nvarchar) + ' exceeded by column ''' + @colNameLimitBreaker + ''''

else

set @concatLengthMsg = N'Concatenated field length(bytes) = ' + cast(@concatNVarcharLength as nvarchar)

select

@concatFieldsAsNVarchar

union all

select @concatLengthMsg

if object_id('tempdb..##StatementMetadata') is not null

drop table ##StatementMetadata

end

Example Usage

Okay, so now we’ve slogged through all the necessaries, what does this give us? Here are some examples, based on everyone’s favourite bike retailer, yes, it’s…. AdventureWorks2014 (again).

Let’s say for this example that we need to track changes to all fields in the Sales.vSalesPerson view against the Business Key, BusinessEntityID, perhaps because we are considering a Type II dimension based on this data. Excluding the BusinessEntityID, we have the following call to our statement generation procedure:

exec util.FormatStatementFieldsAsConcatNVarchar

@statement =

N'select

,Title

,FirstName

,MiddleName

,LastName

,Suffix

,JobTitle

,PhoneNumber

,PhoneNumberType

,EmailAddress

,EmailPromotion

,AddressLine1

,AddressLine2

,City

,StateProvinceName

,PostalCode

,CountryRegionName

,TerritoryName

,TerritoryGroup

,SalesQuota

,SalesYTD

,SalesLastYear

from

Sales.vSalesPerson'

,@caseSensitive = 0

,@nvarcharBytesLimit = -1

,@serverName = null

,@databaseName = 'AdventureWorks2014'

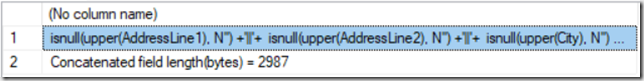

That’s twenty one fields, with 3 different data types, admittedly not the most complex, but a good illustration perhaps. Note that we are passing @nvarcharBytesLimit = -1, thereby treating the length checks on the resultant nvarchar concatenated output as vs nvarchar(max). Here’s the procedure output:

The first row is the input statement we are interested in for use with the HashBytes() function. The second row simply informs us as to the maximum bytes that the concatenated nvarchars and delimiters may consume for each row. If we take the output on row 1 above and turn the field listing into a select statement from the source object, we have:

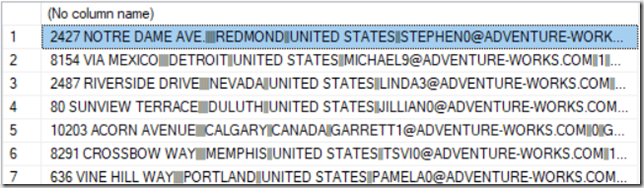

select isnull(upper(AddressLine1),N'') + '||' + isnull(upper(AddressLine2),N'') + '||' + isnull(upper(City),N'') + '||' + isnull(upper(CountryRegionName),N'') + '||' + isnull(upper(EmailAddress),N'') + '||' + isnull(cast(EmailPromotion as nvarchar),N'') + '||' + isnull(upper(FirstName),N'') + '||' + isnull(upper(JobTitle),N'') + '||' + isnull(upper(LastName),N'') + '||' + isnull(upper(MiddleName),N'') + '||' + isnull(upper(PhoneNumber),N'') + '||' + isnull(upper(PhoneNumberType),N'') + '||' + isnull(upper(PostalCode),N'') + '||' + isnull(convert(nvarchar,SalesLastYear,2),N'') + '||' + isnull(convert(nvarchar,SalesQuota,2),N'') + '||' + isnull(convert(nvarchar,SalesYTD,2),N'') + '||' + isnull(upper(StateProvinceName),N'') + '||' + isnull(upper(Suffix),N'') + '||' + isnull(upper(TerritoryGroup),N'') + '||' + isnull(upper(TerritoryName),N'') + '||' + isnull(upper(Title),N'') from Sales.vSalesPerson

The resultant concatenated field values that will be the actual input to the HashBytes() can be seen below for a selection of the records from AdventureWorks014.Sales.vSalesPerson.

As you can see, although not particularly pretty, we do now have the complete set of field values for each record from the initial select statement, in a single field for generating our MD5 hash value over. This allows a single resultant value to be used for determining any change in any field of interest. So, pasting the output on line 1 from util.FormatStatementFieldsAsConcatNVarchar above into the input for HashBytes(), together with the required actual field values for populating our dimension, we arrive at:

select

BusinessEntityID

,cast(hashbytes('md5'

,isnull(upper(AddressLine1),N'')

+ '||' + isnull(upper(AddressLine2),N'')

+ '||' + isnull(upper(City),N'')

+ '||' + isnull(upper(CountryRegionName),N'')

+ '||' + isnull(upper(EmailAddress),N'')

+ '||' + isnull(cast(EmailPromotion as nvarchar),N'')

+ '||' + isnull(upper(FirstName),N'')

+ '||' + isnull(upper(JobTitle),N'')

+ '||' + isnull(upper(LastName),N'')

+ '||' + isnull(upper(MiddleName),N'')

+ '||' + isnull(upper(PhoneNumber),N'')

+ '||' + isnull(upper(PhoneNumberType),N'')

+ '||' + isnull(upper(PostalCode),N'')

+ '||' + isnull(convert(nvarchar,SalesLastYear,2),N'')

+ '||' + isnull(convert(nvarchar,SalesQuota,2),N'')

+ '||' + isnull(convert(nvarchar,SalesYTD,2),N'')

+ '||' + isnull(upper(StateProvinceName),N'')

+ '||' + isnull(upper(Suffix),N'')

+ '||' + isnull(upper(TerritoryGroup),N'')

+ '||' + isnull(upper(TerritoryName),N'')

+ '||' + isnull(upper(Title),N'')) as binary(8)) as Type2Hash

,Title

,FirstName

,MiddleName

,LastName

,Suffix

,JobTitle

,PhoneNumber

,PhoneNumberType

,EmailAddress

,EmailPromotion

,AddressLine1

,AddressLine2

,City

,StateProvinceName

,PostalCode

,CountryRegionName

,TerritoryName

,TerritoryGroup

,SalesQuota

,SalesYTD

,SalesLastYear

from

Sales.vSalesPerson sp

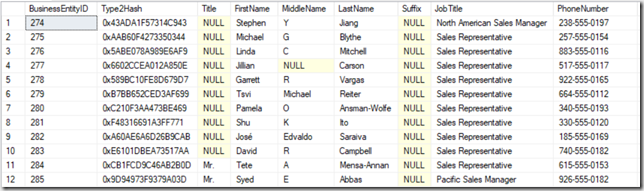

Here’s the output from the above statement (additional columns omitted for brevity):

So there you have it. An MD5 hash of the fields of interest for change tracking, which can then be used in comparisons for checking whether any of our twenty one fields have undergone a change. Simply store this alongside the Business Key and you can determine when your data needs inserts/updates etc.

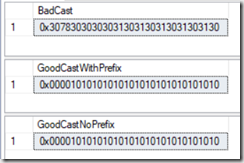

Querying for Specific Binary(16) Hash Values

When querying individual rows for specific binary values, such as those that result from a HashBytes() output, you will need to convert the value you are looking for into a binary(16) value. Trying to use a simple cast(‘0x……’ as binary(16)) will give you the wrong resultant binary value. You will need to use convert(binary(16), ‘0x……’, <style>), where the last parameter passed to convert, being the style value, is used to determine whether there is a leading ‘0x’, (that is used for hexadecimal format) or not in the input string. Use 1 when there is the ‘0x’ prefix, 2 when there is no ‘0x’ prefix. You can see the results of the three attempts to cast the string representation of the binary value below

--incorrect casting operation

select cast('0x00001010101010101010101010101010' as binary(16)) as BadCast

--correct casting operation using '0x' hex format prefix

select convert(binary(16),'0x00001010101010101010101010101010',1) as GoodCastWithPrefix

--correct casting operation using no hex format prefix

select convert(binary(16),'00001010101010101010101010101010',2) as GoodCastNoPrefix

Pre SQL 2016 Implementations for Large Input

Prior to SQL Server 2016, HashBytes() input is limited to 8000 bytes. This makes hashing the full input a matter of breaking the input down into two or more field sets, each of which does not exceed 8000 bytes and then create multiple hashed value fields. You then use these multiple fields for change detection comparison rather than a single field. Of course if you find that you are needing a number of these hashed value fields you may want to rethink your need to change detect over such a wide set of data. In data warehousing the fields of interest tend not to include large string values of many hundreds of characters and if they do you may want to rethink the need for storing these in a dimensional model. These should probably reside elsewhere such as in document database stores, or in a separate table using alternate change detection mechanisms such as source system audit fields, edited date, CDC, Change Tracking etc..

Conclusion

The HashBytes() function provides a powerful way of generating a single unique value from a complex input but getting it consistent can be a challenge. Hopefully the above code will help you avoid making a hash from your hashing, opening up possible development applications for this approach to efficient multi-field change detection.

Introduction to Data Wrangler in Microsoft Fabric

What is Data Wrangler? A key selling point of Microsoft Fabric is the Data Science

Jul

Autogen Power BI Model in Tabular Editor

In the realm of business intelligence, Power BI has emerged as a powerful tool for

Jul

Microsoft Healthcare Accelerator for Fabric

Microsoft released the Healthcare Data Solutions in Microsoft Fabric in Q1 2024. It was introduced

Jul

Unlock the Power of Colour: Make Your Power BI Reports Pop

Colour is a powerful visual tool that can enhance the appeal and readability of your

Jul

Python vs. PySpark: Navigating Data Analytics in Databricks – Part 2

Part 2: Exploring Advanced Functionalities in Databricks Welcome back to our Databricks journey! In this

May

GPT-4 with Vision vs Custom Vision in Anomaly Detection

Businesses today are generating data at an unprecedented rate. Automated processing of data is essential

May

Exploring DALL·E Capabilities

What is DALL·E? DALL·E is text-to-image generation system developed by OpenAI using deep learning methodologies.

May

Using Copilot Studio to Develop a HR Policy Bot

The next addition to Microsoft’s generative AI and large language model tools is Microsoft Copilot

Apr