Azure AI Content Safety is a solution designed to identify harmful content, whether generated by users or AI, within applications and services. It offers text and image APIs for detecting objectionable material. Additionally, there’s an interactive Content Safety Studio enabling users to explore sample code and detect harmful content across various formats. Implementing content filtering software can aid applications in complying with regulations and maintaining a safe user environment.

This blog post will cover the capabilities of Content Safety within Azure AI Studio. It will explore how Content Safety features empower users to effectively manage potentially harmful content, offering insights into its significance and impact. Furthermore, the post will discuss how businesses can utilise Content Safety tools to establish safe and compliant online environments.

Content Safety Studio

Azure AI Content Safety Studio is an online tool designed for handling potentially sensitive, risky, or undesirable content through advanced content moderation ML models. It offers templates and personalised workflows, empowering users to tailor and construct their own content moderation system. Users can either upload their own content or utilise provided sample content for testing purposes. The Content Safety Studio offers not just pre-existing AI models, but also incorporates Microsoft’s predefined term blocklists to identify inappropriate language and remain current with evolving trends. Additionally, users have the option to upload their own blocklists to improve detection of harmful content relevant to their specific needs. The Studio facilitates the establishment of a moderation workflow, enabling continuous monitoring and enhancement of content moderation effectiveness. It assists in fulfilling content needs across various sectors such as gaming, media, education, e-commerce, and beyond. Enterprises can seamlessly integrate their services with the Studio, ensuring real-time moderation of content, whether generated by users or AI. The Studio and its backend handle all these features, relieving customers from the burden of model development. Users can quickly validate their data and monitor key performance indicators (KPIs) like technical metrics (latency, accuracy, recall) or business metrics (block rate, block volume, category proportions, language proportions, etc.). With straightforward operations and configurations, customers can promptly test different solutions and find the optimal fit, avoiding the need for custom model experimentation or manual moderation efforts.

Content Safety Studio features

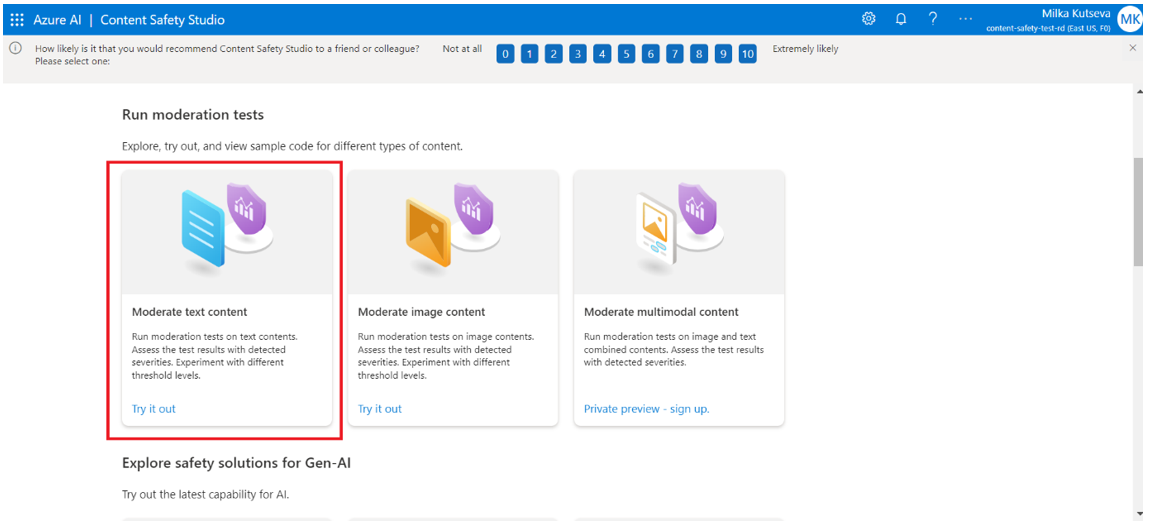

Within the Content Safety Studio, users have access to the following features provided by the Azure AI Content Safety service:

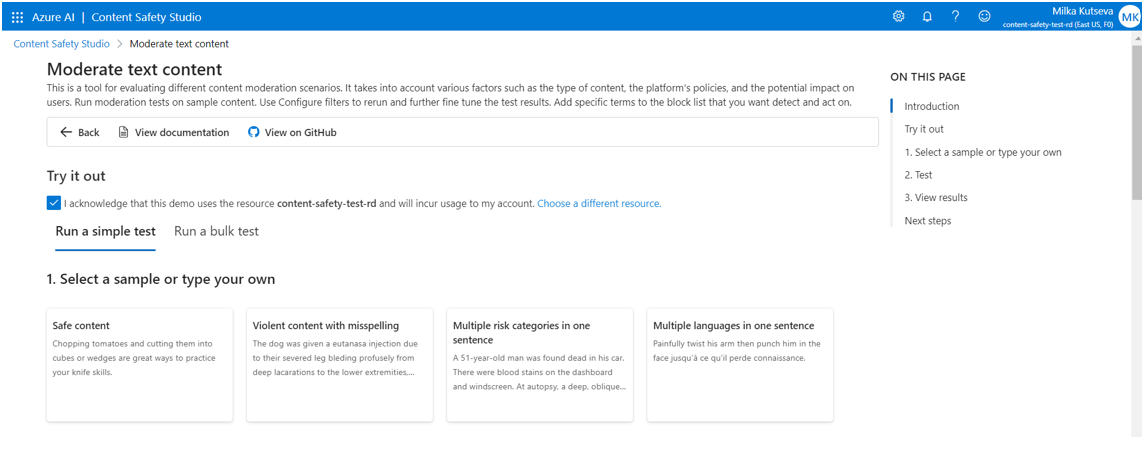

- Moderate Text Content: Using the text moderation tool in the Content Safety Studio, users can easily analyse text content, whether it’s a single sentence or a large dataset. The tool offers a simple interface within the portal for reviewing test results. Users can adjust sensitivity levels to customise content filters and manage blocklists, ensuring precise moderation tailored to their needs. Additionally, users can export the code for seamless integration into their applications, optimising workflow efficiency.

First step: You can try it out by running the sample tests from here:

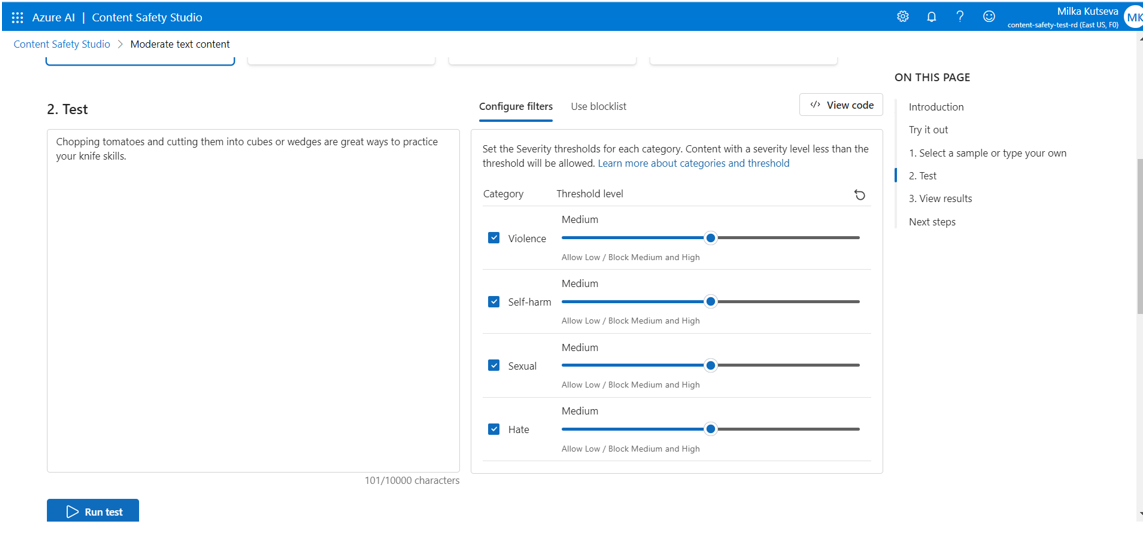

Second step: You can run the test by setting the severity thresholds for each category. Thus, the content with a severity level less than the threshold will be allowed.

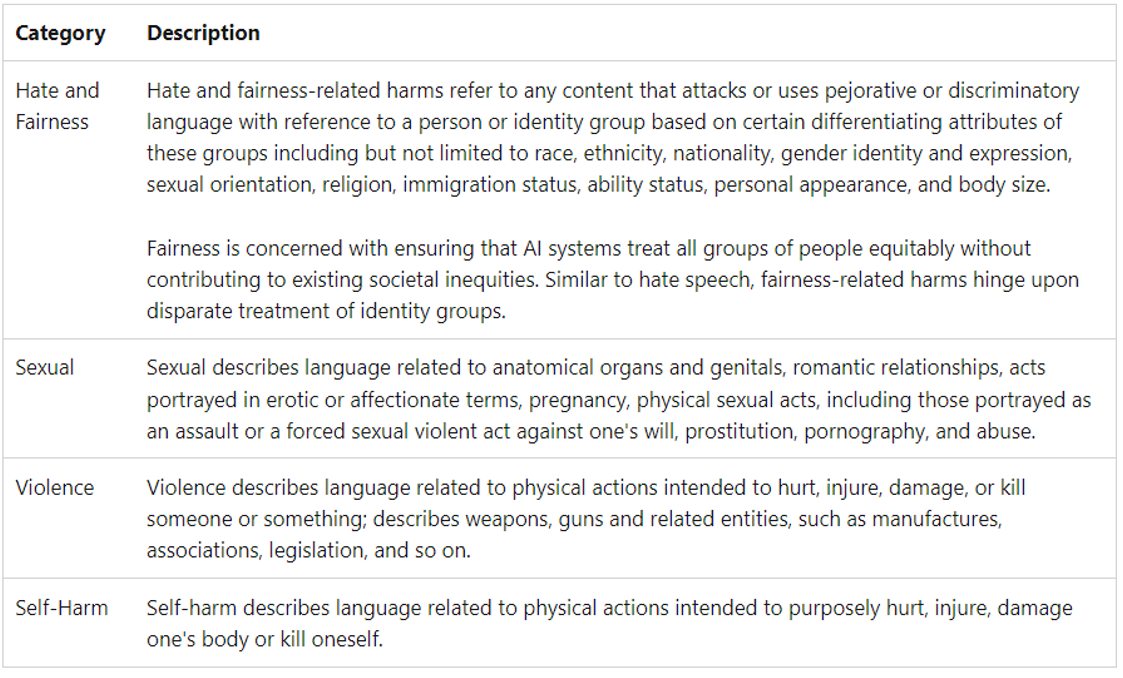

Content Safety identifies four specific types of objectionable content as follow:

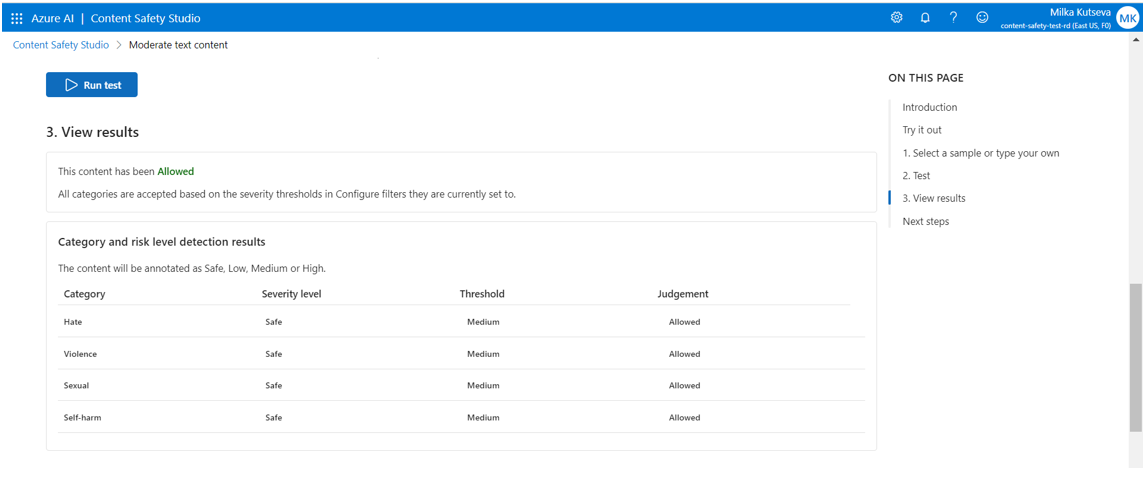

Severity levels: For each identified harm category, the service assigns a severity level rating, which reflects the potential severity of consequences associated with displaying the flagged content.

Threshold: Content that falls below the established threshold severity level will be permitted. Severity levels assigned to content are categorised as Low, Medium, or High. “Low” indicates the presence of mildly harmful content, while “High” denotes significantly dangerous content.

Text: The existing text model supports the complete severity scale ranging from 0 to 7. The classifier identifies various severity levels within this scale. Upon user request, it can provide severity ratings using a condensed scale of 0, 2, 4, and 6, where adjacent levels are combined into single levels.

- [0,1] -> 0

- [2,3] -> 2

- [4,5] -> 4

- [6,7] -> 6

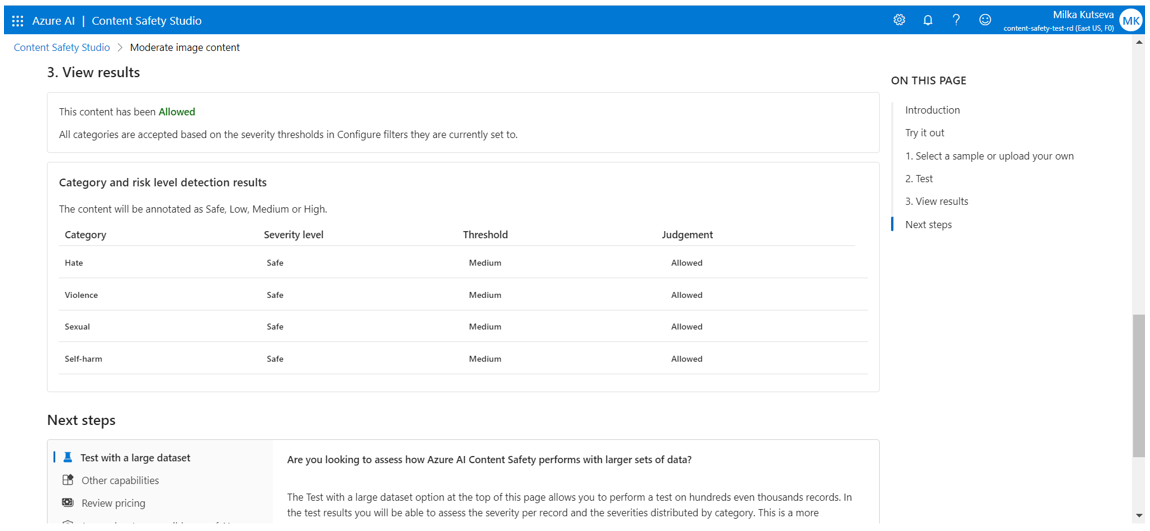

Third step: Viewing the results.

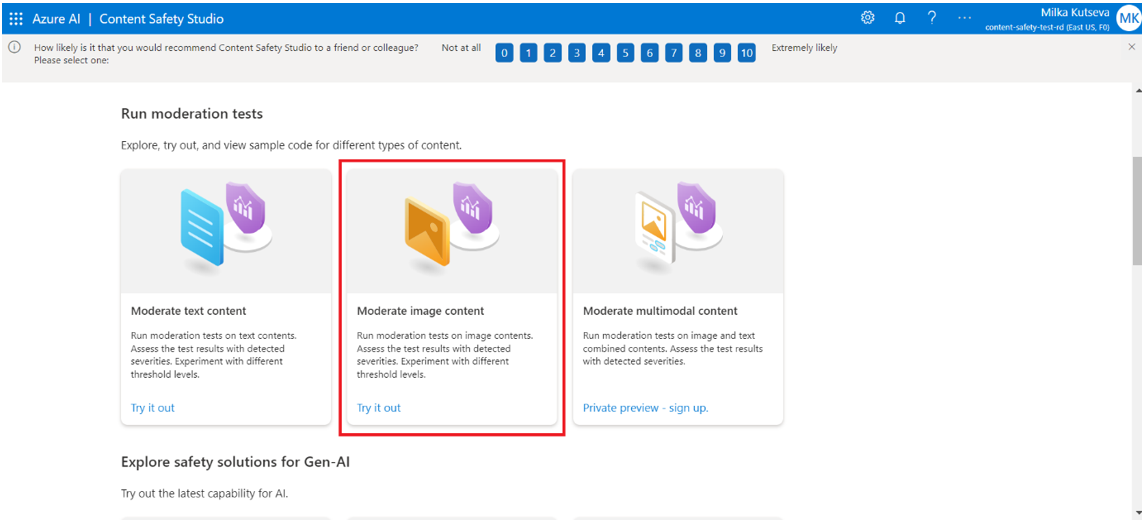

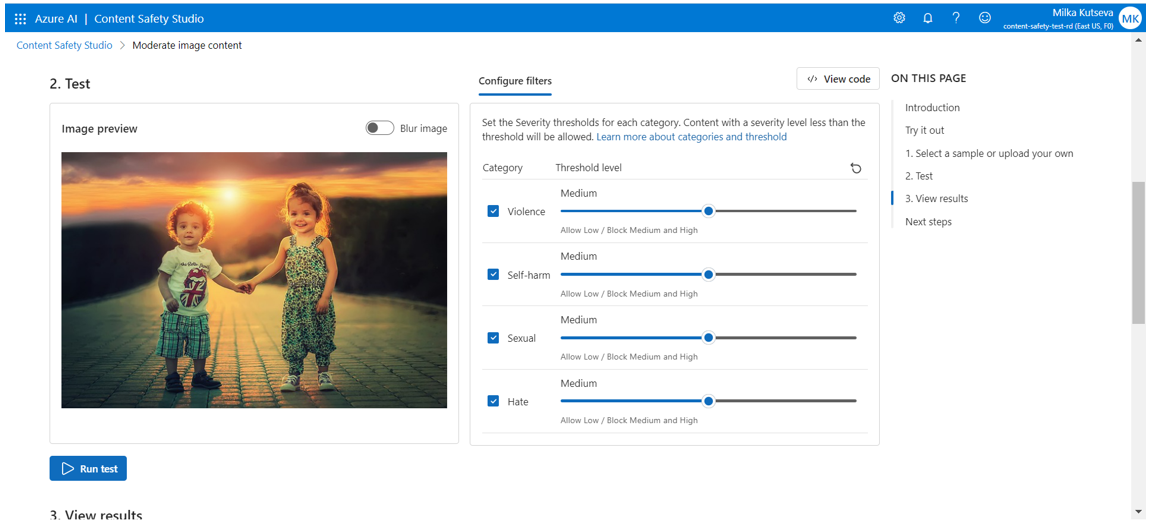

- Moderate Image Content: Using the image moderation tool, users can easily assess images to ensure they meet their content standards. The user-friendly interface allows direct evaluation of test results within the portal, and users can experiment with different sensitivity levels to adjust content filters. Once settings are customised, users can seamlessly export the code for implementation into their applications.

The steps are the same as those for processing text.

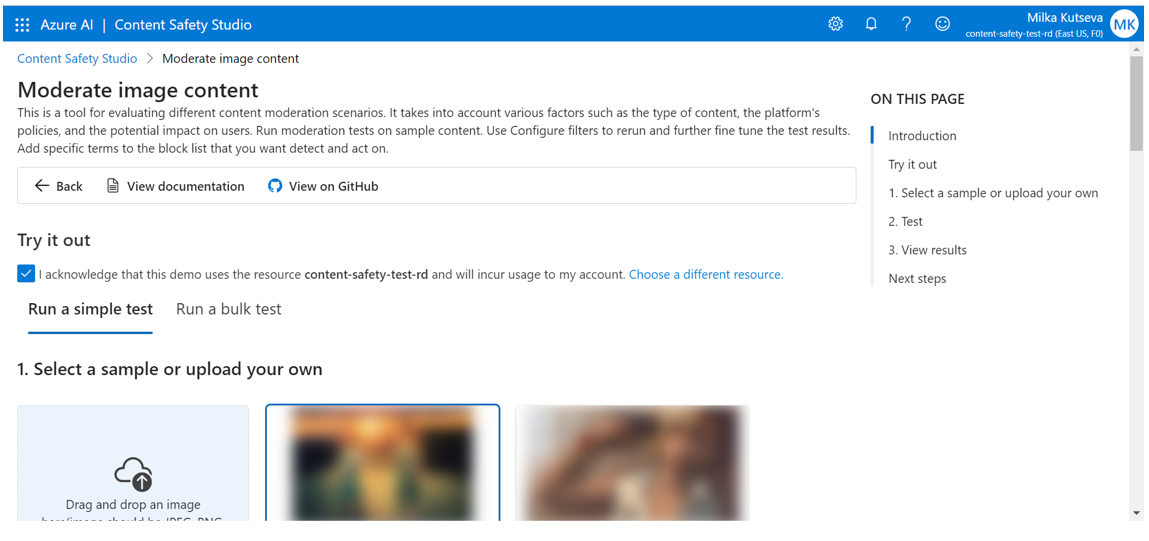

First step: You can try it out by choosing the sample images from here or by uploading your own:

Second step: When selecting the image you want to test, there is an option to choose whether it should be visible or not (“Blur image” button). And again, the user can run the test by setting the severity thresholds for each category. Thus, the content with a severity level less than the threshold will be allowed.

Severity levels:

Image: The current image model version adopts a streamlined version of the full severity scale, ranging from 0 to 7. It exclusively returns severity levels 0, 2, 4, and 6, merging adjacent levels into singular ratings.

- [0,1] -> 0

- [2,3] -> 2

- [4,5] -> 4

- [6,7] -> 6

Third step: viewing the results.

Input requirements

Text submissions have a default maximum length of 10,000 characters. For longer blocks of text, users can split the input text into multiple related submissions, for example, by punctuation or spacing.

Image submissions must adhere to a maximum size of 4 MB and dimensions ranging from 50 x 50 pixels to 2,048 x 2,048 pixels. Accepted image formats include JPEG, PNG, GIF, BMP, TIFF, or WEBP.

How to use it via different platforms?

In addition to Content Safety Studio, there are alternative methods to test texts and images, such as utilising the REST API or client SDKs for basic text moderation tasks. Utilising the Azure AI Content Safety Service offers AI algorithms to identify objectionable content. The users have a variety of platforms available to choose from – REST, C#, Python, Java, JavaScript.

Summary

Azure AI Content Safety Studio presents a comprehensive solution for managing potentially harmful content, providing users with cutting-edge tools for text and image moderation. With its user-friendly interface and customisable workflows, businesses can efficiently implement content moderation strategies across various industries. Whether utilising pre-built AI models or integrating with REST API and client SDKs, Azure AI Content Safety Studio empowers users to maintain safe and compliant environments for their online platforms.

Introduction to Data Wrangler in Microsoft Fabric

What is Data Wrangler? A key selling point of Microsoft Fabric is the Data Science

Jul

Autogen Power BI Model in Tabular Editor

In the realm of business intelligence, Power BI has emerged as a powerful tool for

Jul

Microsoft Healthcare Accelerator for Fabric

Microsoft released the Healthcare Data Solutions in Microsoft Fabric in Q1 2024. It was introduced

Jul

Unlock the Power of Colour: Make Your Power BI Reports Pop

Colour is a powerful visual tool that can enhance the appeal and readability of your

Jul

Python vs. PySpark: Navigating Data Analytics in Databricks – Part 2

Part 2: Exploring Advanced Functionalities in Databricks Welcome back to our Databricks journey! In this

May

GPT-4 with Vision vs Custom Vision in Anomaly Detection

Businesses today are generating data at an unprecedented rate. Automated processing of data is essential

May

Exploring DALL·E Capabilities

What is DALL·E? DALL·E is text-to-image generation system developed by OpenAI using deep learning methodologies.

May

Using Copilot Studio to Develop a HR Policy Bot

The next addition to Microsoft’s generative AI and large language model tools is Microsoft Copilot

Apr