Design and build your ideal data architecture

Applying best practices, and getting the most from your people, technology and budget.

What is data architecture?

Should you be cloud-based, on-premises, or a hybrid? Is a big data architecture the best choice for your workloads? How should you handle analysis? When it comes to data architecture, there are no universal answers. Our expert data architecture consultants will explore your needs, and devise and build whatever’s right for your organisation.

- Develop a data architecture that meets your current and future needs

- Designed and constructed according to best practices

- Compliant with any relevant data regulations

Get in touch to learn more

Microsoft Fabric

This integrated all-in-one SaaS solution centralises all data analytics and data engineering tools into one location to provide a simplified experience of analytics. The solution comprises of a streamlined software allowing organisations to process data from start to finish, without leaving the platform.

Learn more about Fabric

At Adatis, we are skilled and knowledgeable about this new solution, ready to support your organisation to embed Fabric into its data architecture framework. Whether you are in the beginning of your data modernisation journey, or you have a well-developed architecture in place; Adatis can enable your organisation to be Microsoft Fabric ready.

To understand how you can benefit from Microsoft Fabric, we are offering a 1-hour briefing call, as well as a 5-day Proof of Concept to discuss the capabilities and relevant use-cases that can be delivered by the new solution.

Get started with Fabric

Architecture for big data

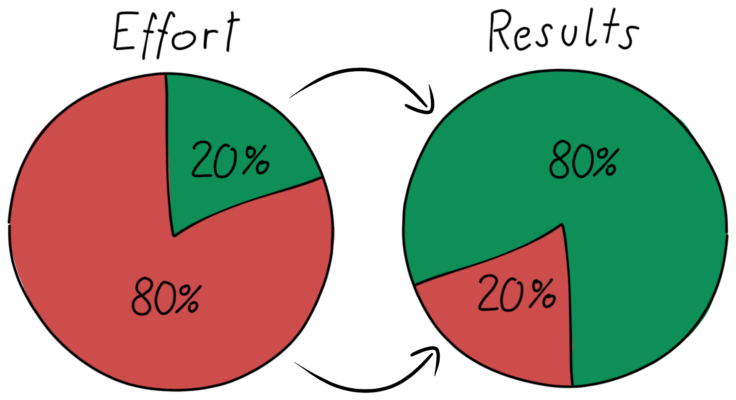

Over the years, the data landscape has changed. What you can do with data today has changed considerably, and with that expectations have escalated about what is now possible. At the same time, the cost of storage has fallen dramatically, while the number of ways in which data is collected keeps on multiplying.

Not all data is the same. Some data arrives at a rapid pace, constantly demanding to be collected and observed. Other data arrives at slower rates, but in very large chunks. You might be facing an advanced analytics problem, or one that requires machine learning. These are the challenges that our team of big data consultants seek to solve.

A big data architecture is designed to handle the ingestion, processing, and analysis of data that is too large or complex for traditional database systems. The threshold at which organisations enter into the big data realm differs, depending on the capabilities of the users and their tools. For some, it can mean hundreds of gigabytes of data, while for others it means hundreds of terabytes. As tools for working with big data sets advance, so does the meaning of big data. More often, this term relates to the value you can extract from your data sets through advanced analytics, rather than strictly the size of that data.

The evolving data architecture challenge

The cloud is rapidly changing the way applications are designed and how data is processed and stored. The choice around which is the best data architecture for a particular organisation’s goals is not straightforward and requires, a full understanding of data and business requirements as well as a thorough knowledge of emerging technologies and best practice.

Traditional vs big data solutions

Data in traditional database systems is typically relational data with a pre-defined schema and a set of constraints to maintain referential integrity.

Often, data from multiple sources in the organisation may be consolidated into a modern data platform, using an ETL process to move and transform the source data. An ETL process stands for Extract, Transform and Load. In the process, an ETL tool extracts the data from different RDBMS source systems then transforms the data like applying calculations or concatenations.

By contrast, a big data architecture is designed to handle the ingestion, processing, and analysis of data that is too large or complex for traditional database systems. The data may be processed in batch or in real time. These two categories are not mutually exclusive, and there is overlap between them. The skill is in selecting the relevant Azure services and the appropriate architecture for each scenario. In addition, there is also work to be done on the technology choices for data solutions in Azure, which can include open source options.

Data architecture consultancy services

Working with each individual client using key selection criteria and a capability matrix, the data architecture consultants at Adatis can help choose the right technology for any particular circumstances. The goal is to help you select the right data architecture for your scenario, and then select the Azure services and technologies that are the best fit with those requirements.

Data Estate Modernisation in Microsoft Azure

As data infrastructures age, they become more expensive to manage, while delivering less business value. Businesses should consider modernising their data estate before it’s technical capabilities fall out of step with their strategic ambitions.

Get more business value from your data estate, with Microsoft and Adatis

Download our Data Estate Modernisation White Paper

Explore your data using Azure Data Explorer

As part of the big data era, most modern data architectures (if not all) deal with large, diverse data sources as part of their reporting needs – composed of far more than just Gigabytes of data. One of the concerns that this amount of data has always brought, is the time and effort required to load and/or transform that data in order to start analysing it.

This paper covers some important points about Azure Data Explorer, including its key features, use cases, architecture, collaboration methods and related services that utilise the same backend engine.

Read our Azure Data Explorer White Paper

Latest Posts

Here the Adatis team share their latest perspectives on all things advanced data analytics

Our blog